This is a written follow-up to a talk presented at a recent Strata online event.

A new breed of startup is emerging, built to take advantage of the rising tides of data across a variety of verticals and the maturing ecosystem of tools for its large-scale analysis.

These are data startups, and they are the sumo wrestlers on the startup stage. The weight of data is a source of their competitive advantage. But like their sumo mentors, size alone is not enough. The most successful of data startups must be fast (with data), big (with analytics), and focused (with services).

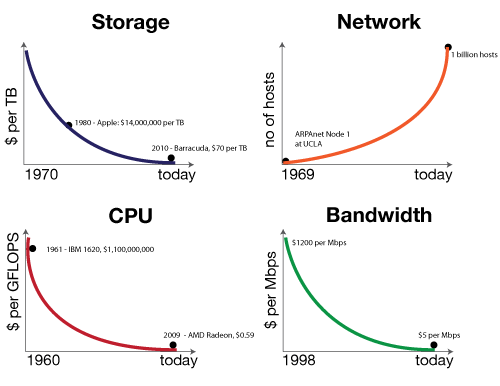

Setting the stage: The attack of the exponentials

The question of why this style of startup is arising today, versus a decade ago, owes to a confluence of forces that I call the Attack of the Exponentials. In short, over the past five decades, the cost of storage, CPU, and bandwidth has been exponentially dropping, while network access has exponentially increased. In 1980, a terabyte of disk storage cost $14 million dollars. Today, it’s at $30 and dropping. Classes of data that were previously economically unviable to store and mine, such as machine-generated log files, now represent prospects for profit.

At the same time, these technological forces are not symmetric: CPU and storage costs have fallen faster than that of network and disk IO. Thus data is heavy; it gravitates toward centers of storage and compute power in proportion to its mass. Migration to the cloud is the manifest destiny for big data, and the cloud is the launching pad for data startups.

Leveraging the big data stack

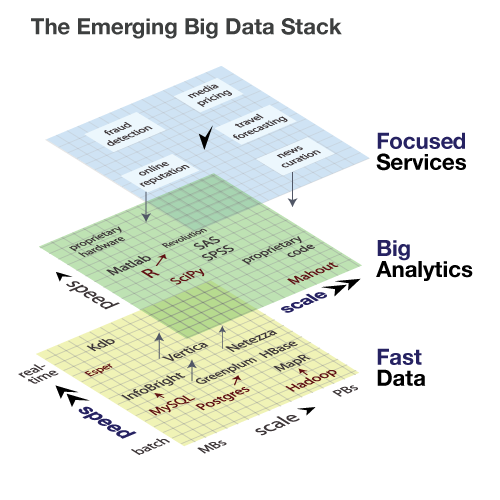

As the foundational layer in the big data stack, the cloud provides the scalable persistence and compute power needed to manufacture data products.

At the middle layer of the big data stack is analytics, where features are extracted from data, and fed into classification and prediction algorithms.

Finally, at the top of the stack are services and applications. This is the level at which consumers experience a data product, whether it be a music recommendation or a traffic route prediction.

Let’s take each of layers and discuss the competitive axes at each.

The competitive axes and representative technologies on the Big Data stack are illustrated here. At the bottom tier of data, free tools are shown in red (MySQL, Postgres, Hadoop), and we see how their commercial adaptations (InfoBright, Greenplum, MapR) compete principally along the axis of speed; offering faster processing and query times. Several of these players are pushing up towards the second tier of the data stack, analytics. At this layer, the primary competitive axis is scale: few offerings can address terabyte-scale data sets, and those that do are typically proprietary. Finally, at the top layer of the big data stack lies the services that touch consumers and businesses. Here, focus within a specific sector, combined with depth that reaches downward into the analytics tier, is the defining competitive advantage.

Fast data

At the base of the big data stack — where data is stored, processed, and queried — the dominant axis of competition was once scale. But as cheaper commodity disks and Hadoop have effectively addressed scalable persistence and processing, the focus of competition has shifted toward speed. The demand for faster disks has led to an explosion in interest in solid-state disk firms, such as Fusion-IO, which went public recently. And several startups, most notably MapR, are promising faster versions of Hadoop.

FusionIO and MapR represent another trend at the data layer: commercial technologies that challenge open source or commodity offerings on an efficiency basis, namely watts or CPU cycles consumed. With energy costs driving between one-third and one-half of data center operating costs, these efficiencies have a direct financial impact.

Finally, just as many large-scale, NoSQL data stores are moving from disk to SSD, others have observed that many traditional, relational databases will soon be entirely in memory. This is particularly true for applications that require repeated, fast access to a full set of data, such as building models from customer-product matrices. This brings us to the second tier of the big data stack, analytics.

Big analytics

At the second tier of the big data stack, analytics is the brains to cloud computing’s brawn. Here, however, the speed is less of a challenge; given an addressable data set in memory, most statistical algorithms can yield results in seconds. The challenge is scaling these out to address large datasets, and rewriting algorithms to operate in an online, distributed manner across many machines.

Because data is heavy, and algorithms are light, one key strategy is to push code deeper to where the data lives, to minimize network IO. This often requires a tight coupling between the data storage layer and the analytics, and algorithms often need to be re-written as user-defined functions (UDFs) in a language compatible with the data layer. Greenplum, leveraging its Postgres roots, supports UDFs written in both Java and R. Following Google’s BigTable, HBase is introducing coprocessors in its 0.92 release, which allows Java code to be associated with data tablets, and minimize data transfer over the network. Netezza pushes even further into hardware, embedding an array of functions into FPGAs that are physically co-located with the disks of its storage appliances.

The field of what’s alternatively called business or predictive analytics is nascent, and while a range of enabling tools and platforms exist (such as R, SPSS, and SAS), most of the algorithms developed are proprietary and vertical-specific. As the ecosystem matures, one may expect to see the rise of firms selling analytical services — such as recommendation engines — that interoperate across data platforms. But in the near-term, consultancies like Accenture and McKinsey, are positioning themselves to provide big analytics via billable hours.

Outside of consulting, firms with analytical strengths push upward, surfacing focused products or services to achieve success.

Focused services

The top of the big data stack is where data products and services directly touch consumers and businesses. For data startups, these offerings more frequently take the form of a service, offered as an API rather than a bundle of bits.

BillGuard is a great example of a startup offering a focused data service. It monitors customers’ credit card statements for dubious charges, and even leverages the collective behavior of users to improve its fraud predictions.

Several startups are working on algorithms that can crack the content relevance nut, including Flipboard and News.me. Klout delivers a pure data service that uses social media activity to measure online influence. My company, Metamarkets, crunches server logs to provide pricing analytics for publishers.

For data startups, data processes and algorithms define their competitive advantage. Poor predictions — whether of fraud, relevance, influence, or price — will sink a data startup, no matter how well-designed their web UI or mobile application.

Focused data services aren’t limited to startups: LinkedIn’s People You May Know and FourSquare’s Explore feature enhance engagement of their companies’ core products, but only when they correctly suggest people and places.

Democratizing big data

The axes of strategy in the big data stack show analytics to be squarely at the center. Data platform providers are pushing upwards into analytics to differentiate themselves, touting support for fast, distributed code execution close to the data. Traditional analytics players, such as SAS and SAP, are expanding their storage footprints and challenging the need for alternative data platforms as staging areas. Finally, data startups and many established firms are creating services whose success hinges directly on proprietary analytics algorithms.

The emergence of data startups highlights the democratizing consequences of a maturing big data stack. For the first time, companies can successfully build offerings without deep infrastructure know-how and focus at a higher level, developing analytics and services. By all indications, this is a democratic force that promises to unleash a wave of innovation in the coming decade.

Related: