Here are a few of the data stories that caught my eye this week.

Where big data and security collide

Could security be the next killer app for Hadoop? That’s what GigaOm’s Derrick Harris suggests: “The open-source, data-processing tool is already popular for search engines, social-media analysis, targeted marketing and other applications that can benefit from clusters of machines churning through unstructured data — now it’s turning its attention to security data.” Noting the universality of security concerns, Harris suggests that “targeted applications” using Hadoop might be a solid starting point for mainstream businesses to adopt the technology.

Could security be the next killer app for Hadoop? That’s what GigaOm’s Derrick Harris suggests: “The open-source, data-processing tool is already popular for search engines, social-media analysis, targeted marketing and other applications that can benefit from clusters of machines churning through unstructured data — now it’s turning its attention to security data.” Noting the universality of security concerns, Harris suggests that “targeted applications” using Hadoop might be a solid starting point for mainstream businesses to adopt the technology.

Juniper Networks’ Chris Hoff has also analyzed the connections between big data and security in a couple of recent posts on his Rational Survivability blog. Hoff contends that while we’ve had the capabilities to analyze security-related data for some time, that’s traditionally happened with specialized security tools, meaning that insights are “often disconnected from the transaction and value of the asset from which they emanate.”

Hoff continues:

Even when we do start to be able to integrate and correlate event, configuration, vulnerability or logging data, it’s very IT-centric. It’s very INFRASTRUCTURE-centric. It doesn’t really include much value about the actual information in use/transit or the implication of how it’s being consumed or related to.

But as both Harris and Hoff argue, Hadoop might help address this as it can handle all an organization’s unstructured data and can enable security analysis that isn’t “disconnected.” And both Harris and Hoff point to Zettaset as an example of a company that is tackling big data and security analysis by using Hadoop.

Strata Conference New York 2011, being held Sept. 22-23, covers the latest and best tools and technologies for data science — from gathering, cleaning, analyzing, and storing data to communicating data intelligence effectively.

Strata Conference New York 2011, being held Sept. 22-23, covers the latest and best tools and technologies for data science — from gathering, cleaning, analyzing, and storing data to communicating data intelligence effectively.

What’s your most important personal data?

Concerns about data security also occur at the personal level. To that end, The Locker Project, Singly‘s open source project to help people collect and control their personal data, recently surveyed people about the data they see as most important.

The survey asked people to choose from the following: contacts, messages, events, check-ins, links, photos, music, movies, or browser history. The results are in, and no surprise: photos were listed as the most important, with 37% of respondents (67 out of 179) selecting that option. Forty-six people listed their contacts, and 23 said their messages were most important.

Interestingly, browser history, events, and check-ins were rated the lowest. As Singly’s Tom Longson ponders:

Do people not care about where they went? Is this data considered stale to most people, and therefore irrelevant? I personally believe I can create a lot of value from Browser History and Check-ins. For example, what websites are my friends going to that I’m not? Also, what places should I be going that I’m not? These are just a couple of ideas.

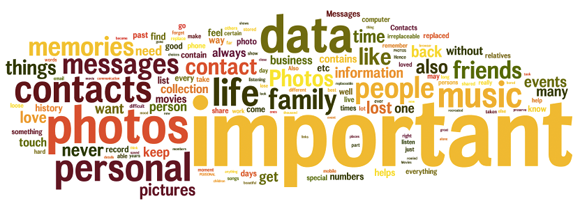

But just as revealing as the ranking of data were the reasons that people gave for why certain types were most important, as you can see in the word cloud created from their responses.

Click to enlarge. See Singly’s associated analysis of this data.

Click to enlarge. See Singly’s associated analysis of this data.

House panel moves forward on data retention law

The U.S. Congress is in recess now, but among the last-minute things it accomplished before vacation was passage by the House Judiciary Committee of “The Protecting Children from Internet Pornographers Act of 2011.” Ostensibly aimed at helping track pedophiles and pornographers online, the bill has raised a number of concerns about Internet data and surveillance. If passed, the law would require, among other things, that Internet companies collect and retain the IP addresses of all users for at least one year.

Representative Zoe Lofgren was one of the opponents of the legislation in committee, trying unsuccessfully to introduce amendments that would curb its data retention requirements. She also tried to have the name of the law changed to the “Keep Every American’s Digital Data for Submission to the Federal Government Without a Warrant Act of 2011.”

In addition to concerns over government surveillance, TechDirt’s Mike Masnick and the Cato Institute’s Julian Sanchez have also pointed to the potential security issues that could arise from lengthy data retention requirements. Sanchez writes:

If I started storing big piles of gold bullion and precious gems in my home, my previously highly secure apartment would suddenly become laughably insecure, without my changing my security measures at all. If a company significantly increases the amount of sensitive or valuable information stored in its systems — because, for example, a government mandate requires them to keep more extensive logs — then the returns to a single successful intrusion (as measured by the amount of data that can be exfiltrated before the breach is detected and sealed) increase as well. The costs of data retention need to be measured not just in terms of terabytes, or man hours spent reconfiguring routers. The cost of detecting and repelling a higher volume of more sophisticated attacks has to be counted as well.

New data from a very old map

And in more pleasant “storing old data” news: the Gough Map, the oldest surviving map of Great Britain, dating back to the 14th century, has now been digitized and made available online.

And in more pleasant “storing old data” news: the Gough Map, the oldest surviving map of Great Britain, dating back to the 14th century, has now been digitized and made available online.

The project to digitize the map, which now resides in Oxford University’s Bodleian Library took 15 months to complete. According to the Bodleian, the project explored the map’s “‘linguistic geographies,’ that is the writing used on the map by the scribes who created it, with the aim of offering a re-interpretation of the Gough Map’s origins, provenance, purpose and creation of which so little is known.”

Among the insights gleaned includes the revelation that the text on the Gough Map is the work of at least two different scribes — one from the 14th century and a later one, from the 15th century, who revised some pieces. Furthermore, it was also discovered that the map was made closer to 1375 than 1360, the data often given to it.

Got data news?

Feel free to email me.

Related: