One of the hardest things about teaching a large class is grading exams and homework assignments. In my teaching days a “large class” was only in the few hundreds (still a challenge for the TAs and instructor). But in the age of MOOCs, classes with a few (hundred) thousand students aren’t unusual.

Researchers at Stanford recently combed through over one million homework submissions from a large MOOC class offered in 2011. Students in the machine-learning course submitted programming code for assignments that consisted of several small programs (the typical submission was about 16 lines of code). While over 120,000 enrolled only about 10,000 students completed all homework assignments (about 25,000 submitted at least one assignment).

The researchers were interested in figuring out ways to ease the burden of grading the large volume of homework submissions. The premise was that by sufficiently organizing the “space of possible solutions”, instructors would provide feedback to a few submissions, and their feedback could then be propagated to the rest.

Domain specific metrics

Organizing the space of homework submissions required a bit of domain1 expertise. The researchers settled on two dimensions: functional variability and coding style (syntactic variability). Unit test results were used as a a proxy for functional variability. In the machine-learning course unit test results were numbers, and programs were considered functionally equal if resulting output vectors were the same. Abstract syntax trees (AST is a tree representation of code structure) and tree edit distance2 were used to measure stylistic similarity of code submissions.

Clustering and Feedback Propagation

Clustering submissions along key metrics is a natural way to reduce the amount of work required. The hope is that homework submissions within the same cluster are similar enough, that feedback for one member can be propagated to the rest of the cluster.

In this particular setting, the space of homework submissions were organized using AST: solutions within a cutoff edit distance of 5 of each other, formed clusters of (syntactically) similar solutions. Cluster assignment is also related to the other metric under consideration – unit test results. It turns out that if two AST’s are about “10 edits” from each other, they are also likely to be functionally similar (unit test results are similar):

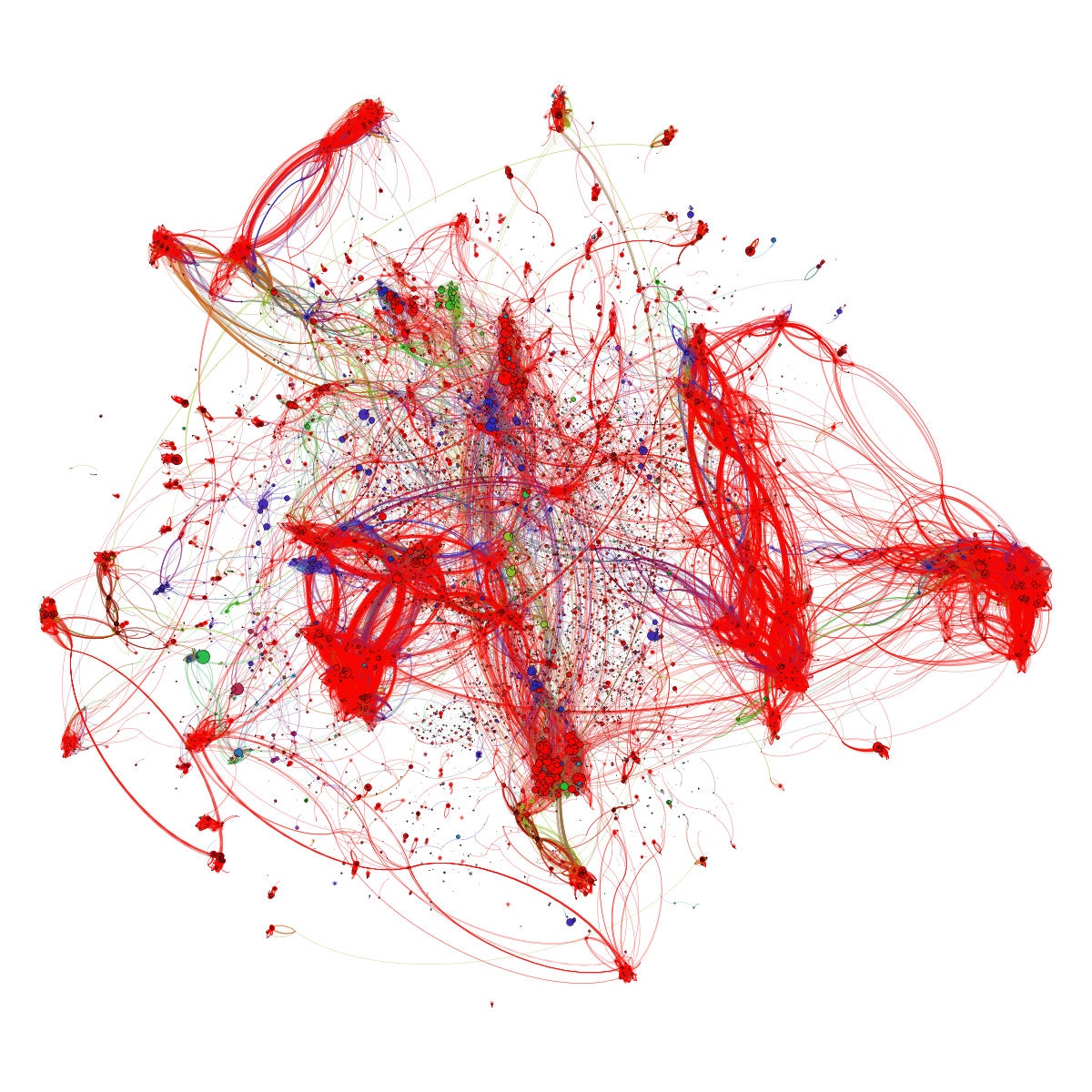

The above figure is the landscape of ~40,000 student submissions to the same programming assignment on Coursera’s Machine Learning course. Nodes represent submissions and edges are drawn between syntactically similar submissions. Colors correspond to performance on a battery of unit tests (with red submissions passing all unit tests). In particular, clusters of similarly colored nodes correspond to multiple similar implementations that behaved in the same way (under unit tests).

While this approach has yet to be used for grading, some instructors have used it to highlight common mistakes: clusters can also be used to identify common errors found in homework submissions. For a recent machine-learning course, feedback to students homework submissions, were accompanied by lists3 containing common errors (generated through clustering).

It’s great to see a machine-learning course employ … machine-learning! While this assessment method may not work in all domains, it will in subjects where metrics for organizing solutions can be found.

Related posts:

(0) Thanks to Jon Huang for walking me through the Code Webs project.

(1) No surprise – the task is “to grade” homework assignments for a specific course.

(2) Tree edit distance is a measure of similarity of two tree structures, that’s based on cost functions and edit operations. Once they settled on this metric, the Stanford researchers still needed to compute it at scale: “Though it is computationally expensive to calculate the similarity between all pairs of solutions in a massive class, the task is feasible given the dynamic programming edit distance algorithm presented by Shasha et al. While the algorithm is quartic in the worst case, it is quadratic in practice for student submission. By exploiting their algorithm and using a computing cluster, we are able to match submissions at MOOC scales.”

(3) Lists containing common errors have been well received.

O’Reilly Strata Conference — Strata brings together the leading minds in data science and big data — decision makers and practitioners driving the future of their businesses and technologies. Get the skills, tools, and strategies you need to make data work.

O’Reilly Strata Conference — Strata brings together the leading minds in data science and big data — decision makers and practitioners driving the future of their businesses and technologies. Get the skills, tools, and strategies you need to make data work.

Strata + Hadoop World: October 28-30 | New York, NY

Strata in London: November 15-17 | London, England

Strata in Santa Clara: February 11-13 | Santa Clara, CA