In the first two posts in this series, I examined the connection between information architecture and user interface design, and then looked closely at the opportunities and constraints inherent in information architecture as we’ve learned to practice it on the web. Here, I outline strategies and tactics that may help us think through these constraints and that may in turn help us lay the groundwork for an information architecture practice tailored and responsive to our increasingly connected physical environments.

The challenge at hand

NYU Media, Culture, and Communication professor Alexander Galloway examines the cultural and political impact of the Internet by tracing its history back to its postwar origins. Built on a distributed information model, “the Internet can survive [nuclear] attacks not because it is stronger than the opposition, but precisely because it is weaker. The Internet has a different diagram than a nuclear attack does; it is in a different shape.” For Galloway, the global distributed network trumped the most powerful weapon built to date not by being more powerful, but by usurping its assumptions about where power lies.

This “differently shaped” foundation is what drives many of the design challenges I’ve raised concerning the Internet of Things, the Industrial Internet and our otherwise connected physical environments. It is also what creates such depth of possibility. In order to accommodate this shape, we will need to take a wider look at the emergent shape of the world, and the way we interact with it. This means reaching out into other disciplines and branches of knowledge (e.g. in the humanities and physical sciences) for inspiration and to seek out new ways to create and communicate meaning.

The discipline I’ve leaned on most heavily in these last few posts is linguistics. From these explorations, a few tactics for information architecture for connected environments emerge: leveraging multiple modalities, practicing soft assembly, and embracing ambiguity.

Leverage multiple modalities

In my previous post, I examined the nature of writing as it relates to information architecture for the web. As a mode of communication – and of meaning making – writing is incredibly powerful, but that power does come with limitations. Language’s linearity is one of these limitations; its symbolic modality is another.

In linguistics, a sign’s “modality” refers to the nature of the relationship between a signifier and a signified, between the name of a thing and the thing itself. In Ferdinand de Saussure’s classic example (from the Course in General Linguistics), the thing “tree” is associated with the work “tree” only by arbitrary convention.This symbolic modality, though by far the most common modality in language, isn’t the only way we make sense of the world. Linguist Charles Sanders Peirce identified two additional modes that offer alternatives to the arbitrary sign of spoken language: the iconic and the indexical modes.

Indexical modes link signifier and signified by concept association. Smoke signifies fire, thunder signifies a storm, footprints signify a past presence. The signifier “points” to the signified (yes, the reference is related to our index finger). Iconic modes, on the other hand, link signifier and signified by resemblance or imitation of salient features. A circle with two dots and lines in the right places signifies the human face to humans – our perceptual apparatus is tuned to recognize this pattern as a face (a pre-programmed disposition which easily becomes a source of amusement when comparing the power outlets of various regions).

Before you write off this scrutiny of modes as esoteric navel-gazing – or juvenile visual punning – let’s take an example many of us come into contact with daily: the Apple MacBook trackpad. Prior to the Mountain Lion operating system, moving your finger “down” the trackpad (i.e. from the space bar toward the edge of the computer body) caused a page on screen to scroll down – which is to say, the elements you perceived on the screen moved up (so you could see what had been concealed “below” the bottom edge of the screen).

The root of this spatial metaphor is enlightening: if you think back a few years to the scroll wheel mouse (or perhaps you’re still using one?), you can see where the metaphor originates. When you scroll the wheel toward you, it is as if the little wheel is in contact with a sheet of paper sitting underneath the mouse. You move the wheel toward you, the wheel moves the paper up. It is an easy mechanical model that we can understand based on our embodied relationship to physical things.

This model was translated as-is to the first trackpads — until another kinetic model replaced it: the touchscreen. On a touchscreen, you’re no longer mediating movement with a little wheel; you’re touching it and moving it around directly. Once this model became sufficiently widespread, it became readily accessible to users of other devices – such as the trackpad – and, eventually, became the default trackpad setting for Apple devices.

Left: Danish electrical plugs, on Wikimedia Commons. Right: US electrical plugs, photo by Andy Fitzgerald.

Here we can see different modes at play. The trackpad isn’t strictly symbolic, nor is it iconic. Its relationship to the action it accomplishes is inferred by our embodied understanding of the physical world. This is signification in the indexical mode.

This thought exercise isn’t purely academic: by basing these kinds of interactions on mechanical models that we understand in a physical and embodied way (we know in our bodies what it is like to touch and move a thing with our fingers), we root them in a symbolic experience that is more deeply rooted in our understanding of the physical world. In the case of iconic signification, for example in recognizing faces in power outlets (and even dispositions – compare, for instance, the plug shapes of North America and Denmark), our meaning making is even more deeply rooted in our innate perceptual apparatus.

Practice soft assembly

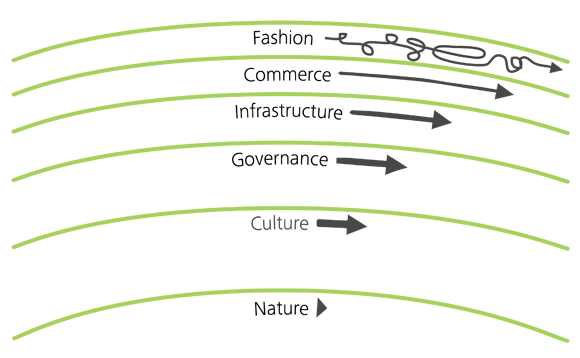

Attention to modalities is important because the more deeply a pattern or behavior is rooted in embodied experience, the more durable it is – and the more reliably it can be leveraged in design. Stewart Brand’s concept of “pace layers” helps to explain why this is the case. Brand argues in How Buildings Learn that the structures that make up our experienced environment don’t all change at the same rate. Nature is very slow to change; culture changes more quickly. Commerce changes more quickly than the layers below it, and technology changes faster than all of these. Fashion, relative to these other layers, changes most quickly of all.

When we discuss meaning making, our pace layer model doesn’t describe rate of change; it describes depth of embodiment. Remembering the “ctrl-z to undo” function is a learned symbolic (hence arbitrary) association. Touching an icon on a touchscreen (or, indexically, on a trackpad) and sliding it up is an associative embodied action. If we get these actions right, we’ve tapped into a level of meaning making that is rooted in one of the slowest of the physical pace layers: the fundamental way in which we perceive our natural world.

This has obvious advantages to usability. Intuitive interfaces are instantly useable because they capitalize on the operational knowledge we already have. When an interface is completely new, in order to be intuitive it must “borrow” knowledge from another sphere of experience (what Donald Norman in The Psychology of Everyday Things refers to as “knowledge in the world”). The touchscreen interface popularized by the iPhone is a ready example of this. More recently, Nest Protect’s “wave to hush” interaction provides an example that builds on learned physical interactions (waving smoke away from a smoke detector to try to shut it up) in an entirely new but instantly comprehensible way.

An additional and often overlooked advantage of tapping into deep layers of meaning making is that by leveraging more embodied associations, we’re able to design for systems that fit together loosely and in a natural way. By “natural” here, I mean associations that don’t need to be overwritten by an arbitrary, symbolic association in order to signify; associations that are rooted in our experience of the world and in our innate perceptual abilities. Our models become more stable and, ironically, more fluid at once: remapping one’s use of the trackpad is as simple as swapping out one easily accessible mental model (the wheel metaphor) for another (the touchscreen metaphor).

This loose coupling allows for structural alternatives to rigid (and often brittle) complex top-down organizational approaches. In Beyond the Brain, University of Lethbridge Psychology professor Louise Barrett uses this concept of “soft assembly” to explain how in both animals and robots “a whole variety of local control factors effectively exploit specific local (often temporary) conditions, along with the intrinsic dynamics of an animal’s body, to come up with effective behavior ‘on the fly.'” Barrett describes how soft assembly accounts for complex behavior in simple organisms (her examples include ants and pre-microprocessor robots), then extends those examples to show how complex instances of human and animal perception can likewise be explained by taking into account the fundamental constitutive elements of perception.

For those of us tasked with designing the architectures and interaction models of networked physical spaces, searching hard for the most fundamental level at which an association is understood (in whatever mode it is most basically communicated), and then articulating that association in a way that exploits the intrinsic dynamics of its environment, allows us to build physical and information structures that don’t have to be held together by force, convention, or rote learning, but which fit together by the nature of their core structures.

Embrace ambiguity

Alexander Galloway claims that the Internet is a different shape than war. I have been making the argument that the Internet of Things is a different shape than textuality. The systems that comprise our increasingly connected physical environments are, for the moment, often broader than we can understand fluidly. Embracing ambiguity – embracing the possibility of not understanding exactly how the pieces fit together, but trusting them to come together because they are rooted in deep layers of perception and understanding – opens up the possibility of designing systems that surpass our expectations of them. “Soft assembling” those systems based on cognitively and perceptually deep patterns and insight ensures that our design decisions aren’t just guesses or luck. In a word, we set the stage for emergence.

Embracing ambiguity is hard. Especially if you’re doing work for someone else – and even more so if that someone else is not a fan of ambiguity (and this includes just about everyone on the client side of the design relationship). By all means, be specific whenever possible. But when you’re designing for a range of contexts, users, and uses – and especially when those design solutions are in an emerging space – specificity can artificially limit a design.

In practical terms, embracing ambiguity means searching for solutions beyond those we can easily identify with language. It means digging for the modes of meaning making that are buried in our physical interactions with the built and the natural environment. This allows us the possibility of designing for systems which fit together with themselves (even though we can’t see all the parts) and with us (even though there’s much about our own behavior we still don’t understand), potentially reshaping the way we perceive and act in our world as a result.

Architecting connected physical environments requires that we think differently about how people think and about how they understand the world we live in. Textuality is a tool – and a powerful tool – but it represents only one way of making sense of information environments. We can add additional approaches to designing for these environments by thinking beyond text and beyond language. We accomplish this by leveraging our embodied experience (and embodied understanding of the world) in our design process: a combination of considering modalities, practicing soft assembly, and embracing ambiguity will help us move beyond the limits of language and think through ways to architect physical environments that make this new context enriching and empowering.

As we’re quickly discovering, the nature of connected physical environments imbricates the digital and the analog in ways that were unimaginable a decade ago. We’re at a revolutionary information crossroads, one where our symbolic and physical worlds are coming together in an unprecedented way. Our temptation thus far has been to drive ahead with technology and to try to fit all the pieces together with the tried and true methods of literacy and engineering. Accepting that the shape of this new world is not the same as what we have known up until now does not mean we have to give up attempts to shape it to our common good. It does, however, mean that we will have to rethink our approach to “control” in light of the deep structures that form the basis of embodied human experience.

If you are interested in the collision of hardware and software, and other aspects of the convergence of physical and digital worlds, subscribe to the free Solid Newsletter.