Alex Howard

As digital disruption comes to Africa, investing in data journalism takes on new importance

Justin Arenstein is building the capacity of African media to practice data-driven journalism.

This interview is part of our ongoing look at the people, tools and techniques driving data journalism.

I first met Justin Arenstein (@justinarenstein) in Chişinău, Moldova, where the media entrepreneur and investigative journalist was working as a trainer at a “data boot camp” for journalism students. The long-haired, bearded South African instantly makes an impression with his intensity, good humor and focus on creating work that gives citizens actionable information.

Whenever we’ve spoken about open data and open government, Arenstein has been a fierce advocate for data-driven journalism that not only makes sense of the world for readers and viewers, but also provides them with tools to become more engaged in changing the conditions they learn about in the work.

Whenever we’ve spoken about open data and open government, Arenstein has been a fierce advocate for data-driven journalism that not only makes sense of the world for readers and viewers, but also provides them with tools to become more engaged in changing the conditions they learn about in the work.

He’s relentlessly focused on how open data can be made useful to ordinary citizens, from Africa to Eastern Europe to South America. For instance, in November, he highlighted how data journalism boosted voter registration in Kenya, creating a simple website using modern web-based tools and technologies.

For the last 18 months, Arenstein has been working as a Knight International Fellow embedded with the African Media Initiative (AMI) as a director for digital innovation. The AMI is a group of the 800 largest media companies on the continent of Africa. In that role, Arenstein has been creating an innovation program for the AMI, building more digital capacity in countries that are as in need of effective accountability from the Fourth Estate as any in the world. That disruption hasn’t yet played itself out in Africa because of a number of factors, explained Arenstein, but he estimates that it will be there within five years.

“Media wants to be ready for this,” he said, “to try and avoid as much of the business disintegration as possible. The program is designed to help them grapple with and potentially leapfrog coming digital disruption.”

In the following interview, Arenstein discusses the African media ecosystem, the role of Hacks/Hackers in Africa, and expanding the capacity of data journalism. Read more…

U.S. Senate to consider long overdue reforms on electronic privacy

The silver lining in the role of cloud-based email in the CIA Director's resignation is a renewed focus on digital privacy.

In 2010, electronic privacy needed digital due process. In 2012, it’s worth defending your vanishing rights online.

This week, there’s an important issue before Washington that affects everyone who sends email, stores files in Dropbox or sends private messages on social media. In January, O’Reilly Media went dark in opposition to anti-piracy bills. Personally, I believe our right to digital due process for government to access private electronic are just as important.

Why? Here’s the context for my interest. The silver lining in the way former CIA Director David Petraeus’ affair was discovered may be its effect on the national debate around email and electronic privacy, and our rights in a surveillance state. The courts and Congress have failed to fully address the constitutionality of warrantless wiretapping of cellphones and the location of “persons of interest.” Phones themselves, however, are a red herring. What’s at stake is the Fourth Amendment in the 21st century, with respect to the personal user data that telecommunications and technology firms hold that government is requesting without digital due process.

On Thursday, the Senate Judiciary Committee will consider an update to the Electronic Communications Privacy Act (ECPA), the landmark 1986 legislation that governs the protections citizens have when they communicate using the Internet or cellphones. (It’s the small item on the bottom of this meeting page.)

UPDATE: Senator Leahy’s manager’s amendment to ECPA passed but Politico’s Tony Romm reports that the full Congress is unlikely to pass ECPA reform in this session.

Investigating data journalism

Scraping together the best tools, techniques and tactics of the data journalism trade.

Great journalism has always been based on adding context, clarity and compelling storytelling to facts. While the tools have improved, the art is the same: explaining the who, what, where, when and why behind the story. The explosion of data, however, provides new opportunities to think about reporting, analysis and publishing stories.

As you may know, there’s already a Data Journalism Handbook to help journalists get started. (I contributed some commentary to it). Over the next month, I’m going to be investigating the best data journalism tools currently in use and the data-driven business models that are working for news startups. We’ll then publish a report that shares those insights and combines them with our profiles of data journalists.

Why dig deeper? Getting to the heart of what’s hype and what’s actually new and noteworthy is worth doing. I’d like to know, for instance, whether tutorials specifically designed for journalists can be useful, as Joe Brockmeier suggested at ReadWrite. On a broader scale, how many data journalists are working today? How many will be needed? What are the primary tools they rely upon now? What will they need in 2013? Who are the leaders or primary drivers in the area? What are the most notable projects? What organizations are embracing data journalism, and why?

This isn’t a new interest for me, but it’s one I’d like to found in more research. When I was offered an opportunity to give a talk at the second International Open Government Data Conference at the World Bank this July, I chose to talk about open data journalism and invited practitioners on stage to share what they do. If you watch the talk and the ensuing discussion in the video below, you’ll pick up great insight from the work of the Sunlight Foundation, the experience of Homicide Watch and why the World Bank is focused on open data journalism in developing countries.

3 big ideas for big data in the public sector

Predictive analytics, code sharing and distributed intelligence could improve criminal justice, cities and response to pandemics.

If you’re going to try to apply the lessons of “Moneyball” to New York City,’ you’ll need to get good data, earn the support of political leaders and build a team of data scientists. That’s precisely what Mike Flowers has done in the Big Apple, and his team has helped to save lives and taxpayers dollars. At the Strata + Hadoop World conference held in New York in October, Flowers, the director of analytics for the Office of Policy and Strategic Planning in the Office of the Mayor of New York City, gave a keynote talk about how predictive data analytics have made city government more efficient and productive.

While the story that Flowers told is a compelling one, the role of big data in the public sector was in evidence in several other sessions at the conference. Here are three more ways that big data is relevant to the public sector that stood out from my trip to New York City.

An innovation agenda to help people win the race against the machines

Policy recommendations to get the engines of democracy firing on all cylinders.

If the country is going to have a serious conversation about innovation, unemployment and job creation, we must talk about our race against the machines. For centuries, we’ve been automating people out of jobs. Today’s combination of big data, automation and artificial intelligence, however, looks like something new, from self-driving cars to e-discovery software to “robojournalism” to financial advisors to medical diagnostics. Last year, venture capitalist Marc Andreessen wrote that “software is eating the world.”

Computers and distributed systems are now demonstrating skills in the real world that we once thought would always be the domain of human beings. “That’s just not the case any more,” said MIT research professor Andrew McAfee, in an interview earlier this year at the Strata Conference in Santa Clara, Calif.:

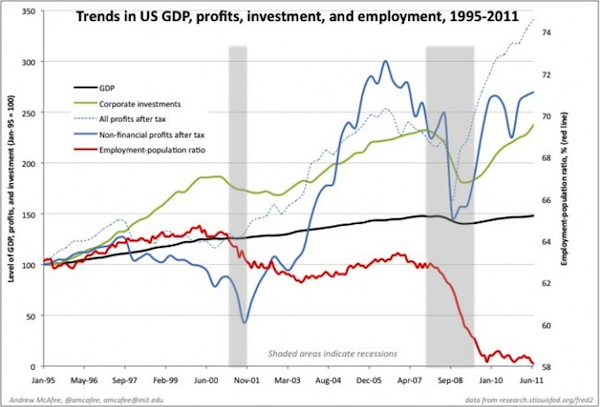

McAfee and his research partner, MIT economics professor Erik Brynjolfsson, remain fundamentally optimistic about the effect of the digital revolution on the world economy. But the drivers of joblessness that they explore in their book, Race Against The Machine, deserved to have had more discussion in this year’s political campaign. Given the tepid labor market recovery in the United States and a rebound that has stayed flat, the Obama administration, given an opportunity for a second term, should pull some new policy levers.

What could — or should — the new administration do? On Tuesday, I had the pleasure of speaking at a panel at the Center for Technology Innovation at the Brookings Institute to talk about what a “First 100 Days Innovation Agenda” might look like for the new administration. (Full disclosure: earlier this year, I was paid to moderate a workshop that discussed this issue and contributed to the paper on building an innovation economy that was published this week.) The event was live streamed and is available on-demand.

Below are recommendations from the paper and from professors McAfee and Brynjolfsson, followed by the suggestions I made during the forum, drawing from my conversations with people around the United States on this topic over the past two years.

In the 2012 election, big data-driven analysis and campaigns were the big winners

Data science played a decisive role in the 2012 election, from the campaigns to the coverage

On Tuesday night, President Barack Obama was elected to a second term in office. In a world of technology and political punditry, the big winner is Nate Silver, the New York Times blogger at Five Thirty Eight. (Break out your dictionaries: a psephologist is a national figure.)

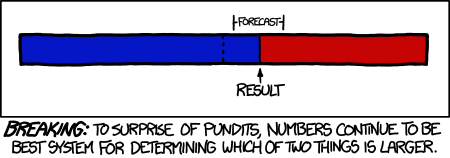

After he correctly called all 50 states, Silver is being celebrated as the “king of the quants” by CNET and the “nerdy Chuck Norris” by Wired. The combined success of statistical models from Silver, TPM PollTracker, HuffPost Pollster, RealClearPolitics Average, and the Princeton Election Consortium all make traditional “horse race journalism” that uses insider information from the campaign trail to explain what’s really going on look a bit, well, antiquated. With the rise of political data science, the Guardian even went so far as to say that big data may sound the death knell for punditry.

This election season should serve, in general, as a wake-up call for data-illiterate journalists. It was certainly a triumph of logic over punditry. At this point, it’s fair to “predict” that Silver’s reputation and the role of data analysis will continue to endure, long after 2012.

“As of this writing, the only thing that’s ‘razor-thin’ or ‘too close to call’ is the gap between the consensus poll forecast and the result” — Randall Munroe

The data campaign

The other big tech story to emerge from the electoral fray, however, is the how the campaigns themselves used technology. What social media was to 2008, data-driven campaigning was in 2012. In the wake of this election, people who understand math, programming and data science will be in even higher demand as a strategic advantage in campaigns, from getting out the vote to targeting and persuading voters.

For political scientists and campaign staff, the story of the quants and data crunchers who helped President Obama win will be pored over and analyzed for years to come. For those wondering how the first big data election played out, Sarah Lai Stirland’s analysis of how Obama’s digital infrastructure helped him win re-election is a must-read, as is Nick Judd’s breakdown of former Massachusetts governor Mitt Romney’s digital campaign. The Obama campaign found voters in battleground states that their opponents apparently didn’t know existed. The exit polls suggest that finding and turning out the winning coalition of young people, minorities and women was critical — and data-driven campaigning clearly played a role.

For added insight on the role of data in this campaign, watch O’Reilly Media’s special online conference on big data and elections, from earlier this year. (It’s still quite relevant.) The archive is embedded below:

For more resources and analysis of the growing role of big data in elections and politics, read on.

Charging up: Networking resources and recovery after Hurricane Sandy

In the wake of a devastating storm, here's how you can volunteer to help those affected.

Even though the direct danger from Hurricane Sandy has passed, lower Manhattan and many parts of Connecticut and New Jersey remain a disaster zone, with millions of people still without power, reduced access to food and gas, and widespread damage from flooding. As of yesterday, according to reports from Wall Street Journal, thousands of residents remain in high-rise buildings with no water, power or heat.

E-government services are in heavy demand, from registering for disaster aid to finding resources, like those offered by the Office of the New York City Advocate. People who need to find shelter can use the Red Cross shelter app. FEMA has set up a dedicated landing page for Hurricane Sandy and a direct means to apply for disaster assistance:

If you’ve been affected by #Sandy apply for assistance online at disasterassistance.gov or call 1-800-621-FEMA(3362) #CT #NY #NJ

— FEMA (@fema) November 2, 2012

Public officials have embraced social media during the disaster as never before, sharing information about where to find help.

No power and diminished wireless capacity, however, mean that the Internet is not accessible in many homes. In the post below, learn more on what you can do on the ground to help and how you can contribute online.

NYC’s PLAN to alert citizens to danger during Hurricane Sandy

A mobile alert system put messages where and when they were needed: residents' palms.

Starting at around 8:36 PM ET last night, as Hurricane Sandy began to flood the streets of lower Manhattan, many New Yorkers began to receive an unexpected message: a text alert on their mobile phones that strongly urged them to seek shelter. It showed up on iPhones:

This Emergency Alert just popped up on my phone. Ten seconds later, the TV went out. Here we go. #sandy #ny1sandy twitter.com/mbchp/status/2…

— Mike Beauchamp (@mbchp) October 30, 2012

…and upon Android devices:

Emergency alert on my phone. instagr.am/p/RYvlmJxJec/

— Heidi N. Moore (@moorehn) October 30, 2012

While the message was clear enough, the way that these messages ended up on the screens may not have been clear to recipients or observers. And still other New Yorkers were left wondering why emergency alerts weren’t on their phones.

Here’s the explanation: the emergency alerts that went out last night came from New York’s Personal Localized Alerting Network, the “PLAN” the Big Apple launched in late 2011.

NYC chief digital officer Rachel Haot confirmed that the messages New Yorkers received last night were the result of a public-private partnership between the Federal Communications Commission, the Federal Emergency Management Agency, the New York City Office of Emergency Management (OEM), the CTIA and wireless carriers.

While the alerts may look quite similar to text messages, the messages themselves run in parallel, enabling them to get through txt traffic congestion. NYC’s PLAN is the local version of the Commercial Mobile Alert System (CMAS) that has been rolling out nation-wide over the last year.

“This new technology could make a tremendous difference during disasters like the recent tornadoes in Alabama where minutes – or even seconds – of extra warning could make the difference between life and death,” said FCC chairman Julius Genachowski, speaking last May in New York City. “And we saw the difference alerting systems can make in Japan, where they have an earthquake early warning system that issued alerts that saved lives.”

NYC was the first city to have it up and running, last December, and less than a year later, the alerts showed up where and when they mattered.

Tracking the data storm around Hurricane Sandy

When natural disasters loom, public open government data feeds become critical infrastructure.

Just over fourteen months ago, social, mapping and mobile data told the story of Hurricane Irene. As a larger, more unusual late October storm churns its way up the East Coast, the people in its path are once again acting as sensors and media, creating crisis data as this “Frankenstorm” moves over them.

As citizens look for hurricane information online, government websites are under high demand. In late 2012, media, government, the private sector and citizens all now will play an important role in sharing information about what’s happening and providing help to one another.In that context, it’s key to understand that it’s government weather data, gathered and shared from satellites high above the Earth, that’s being used by a huge number of infomediaries to forecast, predict and instruct people about what to expect and what to do. In perhaps the most impressive mashup of social and government data now online, an interactive Google Crisis Map for Hurricane Sandy pictured below predicts the future of the ‘Frankenstorm’ in real-time, including a NYC-specific version.

If you’re looking for a great example of public data for public good, these maps like the Weather Underground’s interactive are a canonical example of what’s possible.

What I learned about #debates, social media and being a pundit on Al Jazeera English

Why I'll be turning off the Net and tuning in to the final presidential debate.

Earlier this month, when I was asked by Al Jazeera English if I’d like to be go on live television to analyze the online side of the presidential debates, I didn’t immediately accept. I’d be facing a live international audience at a moment of intense political interest, without a great wealth of on-air training. That said, I felt honored to be asked by Al Jazeera. I’ve been following the network’s steady evolution over the past two decades, building from early beginnings during the first Gulf War to its current position as one of the best sources of live coverage and hard news from the Middle East. When Tahrir Square was at the height of its foment during the Arab Spring, Al Jazeera was livestreaming it online to the rest of the world.

Earlier this month, when I was asked by Al Jazeera English if I’d like to be go on live television to analyze the online side of the presidential debates, I didn’t immediately accept. I’d be facing a live international audience at a moment of intense political interest, without a great wealth of on-air training. That said, I felt honored to be asked by Al Jazeera. I’ve been following the network’s steady evolution over the past two decades, building from early beginnings during the first Gulf War to its current position as one of the best sources of live coverage and hard news from the Middle East. When Tahrir Square was at the height of its foment during the Arab Spring, Al Jazeera was livestreaming it online to the rest of the world.

I’ve been showing a slide in a presentation for months now that features Al Jazeera’s “The Stream” as a notable combination of social media, online video and broadcast journalism since its inception.

So, by and large, the choice was clear: say “yes,” and then figure out how to do a good job.