John Russell

A million rows isn’t cool. You know what’s cool? A billion rows.

Changing your frame of reference when starting with SQL on Hadoop.

Editor’s note: John Russell will be one of the teachers of the tutorial Getting Started with Interactive SQL-On-Hadoop at Strata + Hadoop World in San Jose. Visit the Strata + Hadoop World website for more information on the program.

If you’re just getting started doing analytic work with SQL on Hadoop, a table with a million rows might seem like a good starting point for experimentation. Isn’t that a lot of data? While you can exercise the features of a traditional database with a million rows, for Hadoop it’s not nearly enough. Think billions of rows instead.

Let’s look at the ways a million-row table falls short. Understanding the data volumes involved with big data can help you avoid going down unproductive pathways based on misleading assumptions.

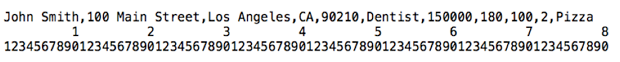

With a million-row table, every byte in each row represents a megabyte of total data volume. Let’s say your table represents people and has fields for name, address, occupation, salary, height, weight, number of children, and favorite food. Here’s what a sample field might look like, with a scale underneath to illustrate length:

This particular record takes up 78 characters, including the comma separators. A back-of-the-envelope calculation suggests that, if this is an average row, we’ll end up with about 78 megabytes of data in the table. (And don’t recycle that envelope just yet — doing analytics with Hadoop, you’ll do a lot of rough estimates like this to sanity-check your expectations about performance and scalability.) Read more…