- Ashley Madison’s Fembot Con (Gizmodo) — As documents from company e-mails now reveal, 80% of first purchases on Ashley Madison were a result of a man trying to contact a bot, or reading a message from one.

- Terrapin — Pinterest’s low-latency NoSQL replacement for HBase. See engineering blog post.

- Why Futurism Has a Cultural Blindspot (Nautilus) — As the psychologist George Lowenstein and colleagues have argued, in a phenomenon they termed “projection bias,” people “tend to exaggerate the degree to which their future tastes will resemble their current tastes.”

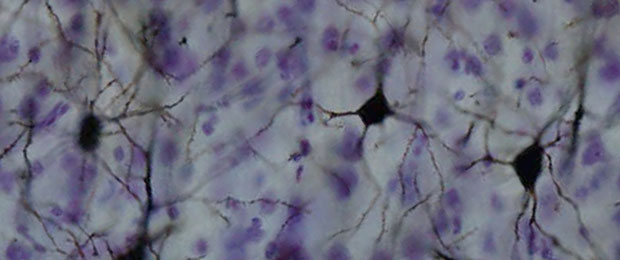

- Mind-Controlled Prosthetic Arm (Quartz) — The robotic arm is connected by wires that link up to the wearer’s motor cortex — the part of the brain that controls muscle movement — and sensory cortex, which identifies tactile sensations when you touch things. The wires from the motor cortex allow the wearer to control the motion of the robot arm, and pressure sensors in the arm that connect back into the sensory cortex give the wearer the sensation that they are touching something.

"Big Data" entries

Big data is changing the face of fashion

How the fashion industry is embracing algorithms, natural language processing, and visual search.

Download Fashioning Data: A 2015 Update, our updated free report exploring data innovations from the fashion industry.

Fashion is an industry that struggles for respect — despite its enormous size globally, it is often viewed as frivolous or unnecessary.

And it’s true — fashion can be spectacularly silly and wildly extraneous. But somewhere between the glitzy, million-dollar runway shows and the ever-shifting hemlines, a very big business can be found. One industry profile of the global textiles, apparel, and luxury goods market reported that fashion had total revenues of $3.05 trillion in 2011, and is projected to create $3.75 trillion in revenues in 2016.

Solutions for a unique business problem

The majority of clothing purchases are made not out of necessity, but out of a desire for self-expression and identity — two remarkably difficult things to quantify and define. Yet, established brands and startups throughout the industry are finding clever ways to use big data to turn fashion into “bits and bytes,” as much as threads and buttons.

In the newly updated O’Reilly report Fashioning Data: A 2015 Update, Data Innovations from the Fashion Industry, we explore applications of big data that carry lessons for industries of all types. Topics range from predictive algorithms to visual search — capturing structured data from photographs — to natural language processing, with specific examples from complex lifecycles and new startups; this report reveals how different companies are merging human input with machine learning. Read more…

Three best practices for building successful data pipelines

Reproducibility, consistency, and productionizability let data scientists focus on the science.

Building a good data pipeline can be technically tricky. As a data scientist who has worked at Foursquare and Google, I can honestly say that one of our biggest headaches was locking down our Extract, Transform, and Load (ETL) process.

Building a good data pipeline can be technically tricky. As a data scientist who has worked at Foursquare and Google, I can honestly say that one of our biggest headaches was locking down our Extract, Transform, and Load (ETL) process.

At The Data Incubator, our team has trained more than 100 talented Ph.D. data science fellows who are now data scientists at a wide range of companies, including Capital One, the New York Times, AIG, and Palantir. We commonly hear from Data Incubator alumni and hiring managers that one of their biggest challenges is also implementing their own ETL pipelines.

Drawn from their experiences and my own, I’ve identified three key areas that are often overlooked in data pipelines, and those are making your analysis:

- Reproducible

- Consistent

- Productionizable

While these areas alone cannot guarantee good data science, getting these three technical aspects of your data pipeline right helps ensure that your data and research results are both reliable and useful to an organization. Read more…

Four short links: 15 September 2015

Bot Bucks, Hadoop Database, Futurism Biases, and Tactile Prosthetics

Four short links: 11 September 2015

Wishful CS, Music Big Data, Better Queues, and Data as Liability

- Computer Science Courses that Don’t Exist, But Should (James Hague) — CSCI 3300: Classical Software Studies. Discuss and dissect historically significant products, including VisiCalc, AppleWorks, Robot Odyssey, Zork, and MacPaint. Emphases are on user interface and creativity fostered by hardware limitations.

- Music Science: How Data and Digital Content Are Changing Music — O’Reilly research report on big data and the music industry. Researchers estimate that it takes five seconds to decide if we don’t like a song, but 25 to conclude that we like it.

- The Curse of the First-In First-Out Queue Discipline (PDF) — the research paper behind the “more efficient to serve the last person who joined the queue” newspaper stories going around.

- Data is Not an Asset, It Is a Liability — regardless of the boilerplate in your privacy policy, none of your users have given informed consent to being tracked. Every tracker and beacon script on your website increases the privacy cost they pay for transacting with you, chipping away at the trust in the relationship.

From search to distributed computing to large-scale information extraction

The O'Reilly Data Show Podcast: Mike Cafarella on the early days of Hadoop/HBase and progress in structured data extraction.

Subscribe to the O’Reilly Data Show Podcast to explore the opportunities and techniques driving big data and data science.

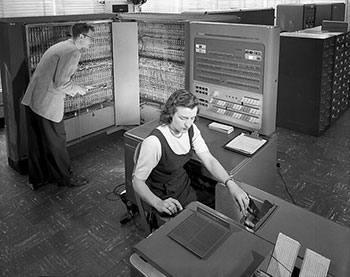

February 2016 marks the 10th anniversary of Hadoop — at a point in time when many IT organizations actively use Hadoop, and/or one of the open source, big data projects that originated after, and in some cases, depend on it.

February 2016 marks the 10th anniversary of Hadoop — at a point in time when many IT organizations actively use Hadoop, and/or one of the open source, big data projects that originated after, and in some cases, depend on it.

During the latest episode of the O’Reilly Data Show Podcast, I had an extended conversation with Mike Cafarella, assistant professor of computer science at the University of Michigan. Along with Strata + Hadoop World program chair Doug Cutting, Cafarella is the co-founder of both Hadoop and Nutch. In addition, Cafarella was the first contributor to HBase.

We talked about the origins of Nutch, Hadoop (HDFS, MapReduce), HBase, and his decision to pursue an academic career and step away from these projects. Cafarella’s pioneering contributions to open source search and distributed systems fits neatly with his work in information extraction. We discussed a new startup he recently co-founded, ClearCutAnalytics, to commercialize a highly regarded academic project for structured data extraction (full disclosure: I’m an advisor to ClearCutAnalytics). As I noted in a previous post, information extraction (from a variety of data types and sources) is an exciting area that will lead to the discovery of new features (i.e., variables) that may end up improving many existing machine learning systems. Read more…

The music science trifecta

Digital content, the Internet, and data science have changed the music industry.

Download our new free report “Music Science: How Data and Digital Content are Changing Music,” by Alistair Croll, to learn more about music, data, and music science.

Today’s music industry is the product of three things: digital content, the Internet, and data science. This trifecta has altered how we find, consume, and share music. How we got here makes for an interesting history lesson, and a cautionary tale for incumbents that wait too long to embrace data.

When music labels first began releasing music on compact disc in the early 1980s, it was a windfall for them. Publishers raked in the money as music fans upgraded their entire collections to the new format. However, those companies failed to see the threat to which they were exposing themselves.

Until that point, piracy hadn’t been a concern because copies just weren’t as good as the originals. To make a mixtape using an audio cassette recorder, a fan had to hunch over the radio for hours, finger poised atop the record button — and then copy the tracks stolen from the airwaves onto a new cassette for that special someone. So, the labels didn’t think to build protection into the CD music format. Some companies, such as Sony, controlled both the devices and the music labels, giving them a false belief that they could limit the spread of content in that format.

One reason piracy seemed so far-fetched was that nobody thought of computers as music devices. Apple Computer even promised Apple Records that it would never enter the music industry — and when it finally did, it launched a protracted legal battle that even led coders in Cupertino to label one of the Mac sound effects “Sosumi” (pronounced “so sue me”) as a shot across Apple Records’ legal bow. Read more…

Four short links: 8 September 2015

Serverless Microservers, Data Privacy, NAS Security, and Mobile Advertising

- Microservices Without the Servers (Amazon) — By “serverless,” we mean no explicit infrastructure required, as in: no servers, no deployments onto servers, no installed software of any kind. We’ll use only managed cloud services and a laptop. The diagram below illustrates the high-level components and their connections: a Lambda function as the compute (“backend”) and a mobile app that connects directly to it, plus Amazon API Gateway to provide an HTTP endpoint for a static Amazon S3-hosted website.

- Privacy vs Data Science — claims Apple is having trouble recruiting top-class machine learning talent because of the strict privacy-driven limits on data retention (Siri data: 6 months, Maps: 15 minutes). As a consequence, Apple’s smartphones attempt to crunch a great deal of user data locally rather than in the cloud.

- NAS Backdoors — firmware in some Seagate NAS drives is very vulnerable. It’s unclear whether these are Seagate-added, or came with third-party bundled software. Coming soon to lightbulbs, doors, thermostats, and all your favorite inanimate objects. (via BetaNews)

- Most Consumers Wouldn’t Pay Publishers What It Would Take to Replace Mobile Ad Income — they didn’t talk to this consumer.

Showcasing the real-time processing revival

Tools and learning resources for building intelligent, real-time products.

Register for Strata + Hadoop World NYC, which will take place September 29 to Oct 1, 2015.

A few months ago, I noted the resurgence in interest in large-scale stream-processing tools and real-time applications. Interest remains strong, and if anything, I’ve noticed growth in the number of companies wanting to understand how they can leverage the growing number of tools and learning resources to build intelligent, real-time products.

This is something we’ve observed using many metrics, including product sales, the number of submissions to our conferences, and the traffic to Radar and newsletter articles.

As we looked at putting together the program for Strata + Hadoop World NYC, we were excited to see a large number of compelling proposals on these topics. To that end, I’m pleased to highlight a strong collection of sessions on real-time processing and applications coming up at the event. Read more…

Bridging the divide: Business users and machine learning experts

The O'Reilly Data Show Podcast: Alice Zheng on feature representations, model evaluation, and machine learning models.

Subscribe to the O’Reilly Data Show Podcast to explore the opportunities and techniques driving big data and data science.

As tools for advanced analytics become more accessible, data scientist’s roles will evolve. Most media stories emphasize a need for expertise in algorithms and quantitative techniques (machine learning, statistics, probability), and yet the reality is that expertise in advanced algorithms is just one aspect of industrial data science.

As tools for advanced analytics become more accessible, data scientist’s roles will evolve. Most media stories emphasize a need for expertise in algorithms and quantitative techniques (machine learning, statistics, probability), and yet the reality is that expertise in advanced algorithms is just one aspect of industrial data science.

During the latest episode of the O’Reilly Data Show podcast, I sat down with Alice Zheng, one of Strata + Hadoop World’s most popular speakers. She has a gift for explaining complex topics to a broad audience, through presentations and in writing. We talked about her background, techniques for evaluating machine learning models, how much math data scientists need to know, and the art of interacting with business users.

Making machine learning accessible

People who work at getting analytics adopted and deployed learn early on the importance of working with domain/business experts. As excited as I am about the growing number of tools that open up analytics to business users, the interplay between data experts (data scientists, data engineers) and domain experts remains important. In fact, human-in-the-loop systems are being used in many critical data pipelines. Zheng recounts her experience working with business analysts:

It’s not enough to tell someone, “This is done by boosted decision trees, and that’s the best classification algorithm, so just trust me, it works.” As a builder of these applications, you need to understand what the algorithm is doing in order to make it better. As a user who ultimately consumes the results, it can be really frustrating to not understand how they were produced. When we worked with analysts in Windows or in Bing, we were analyzing computer system logs. That’s very difficult for a human being to understand. We definitely had to work with the experts who understood the semantics of the logs in order to make progress. They had to understand what the machine learning algorithms were doing in order to provide useful feedback. Read more…

Data-driven neuroscience

The O'Reilly Radar Podcast: Bradley Voytek on data's role in neuroscience, the brain scanner, and zombie brains in STEM.

Subscribe to the O’Reilly Radar Podcast to track the technologies and people that will shape our world in the years to come.

In this week’s Radar Podcast, O’Reilly’s Mac Slocum chats with Bradley Voytek, an assistant professor of cognitive science and neuroscience at UC San Diego. Voytek talks about using data-driven approaches in his neuroscience work, the brain scanner project, and applying cognitive neuroscience to the zombie brain.

Here are a few snippets from their chat:

In the neurosciences, we’ve got something like three million peer reviewed publications to go through. When I was working on my Ph.D., I was very interested, in particular, in two brain regions. I wanted to know how these two brain regions connect, what are the inputs to them and where do they output to. In my naivety as a Ph.D. student, I had assumed there would be some sort of nice 3D visualization, where I could click on a brain region and see all of its inputs and outputs. Such a thing did not exist — still doesn’t, really. So instead, I ended up spending three or four months of my Ph.D. combing through papers written in the 1970s … and I kept thinking to myself, this is ridiculous, and this just stewed in the back of my mind for a really long time.

Sitting at home [with my wife], I said, I think I’ve figured out how to address this problem I’m working on, which is basically very simple text mining. Lets just scrape the text of these three million papers, or at least the titles and abstracts, and see what words co-occur frequently together. It was very rudimentary text mining, with the idea that if words co-occur frequently … this might give us an index of how related things are, and she challenged me to a code-off.