- CT Scanning and 3D Printing for Paleo (Scientific American) — using CT scanners to identify bones still in rock, then using 3D printers to recreate them. (via BoingBoing)

- Growing the Use of Drones in Agriculture (Forbes) — According to Sue Rosenstock, 3D Robotics spokesperson, a third of their customers consist of hobbyists, another third of enterprise users, and a third use their drones as consumer tools. “Over time, we expect that to change as we make more enterprise-focused products, such as mapping applications,” she explains. (via Chris Anderson)

- Serving 1M Load-Balanced Requests/Second (Google Cloud Platform blog) — 7m from empty project to serving 1M requests/second. I remember when 1 request/second was considered insanely busy. (via Forbes)

- Boil Up — behind the scenes for the design and coding of a real-time simulation for a museum’s science exhibit. (via Courtney Johnston)

"cloud" entries

How did we end up with a centralized Internet for the NSA to mine?

The Internet is naturally decentralized, but it's distorted by business considerations.

I’m sure it was a Wired editor, and not the author Steven Levy, who assigned the title “How the NSA Almost Killed the Internet” to yesterday’s fine article about the pressures on large social networking sites. Whoever chose the title, it’s justifiably grandiose because to many people, yes, companies such as Facebook and Google constitute what they know as the Internet. (The article also discusses threats to divide the Internet infrastructure into national segments, which I’ll touch on later.)

So my question today is: How did we get such industry concentration? Why is a network famously based on distributed processing, routing, and peer connections characterized now by a few choke points that the NSA can skim at its leisure?

Read more…

Security firms must retool as clients move to the cloud

The risk of disintermediation meets a promise of collaboration.

This should be flush times for firms selling security solutions, such as Symantec, McAfee, Trend Micro, and RSA. Front-page news about cyber attacks provides free advertising, and security capabilities swell with new techniques such as security analysis (permit me a plug here for our book Network Security Through Data Analysis). But according to Jane Wright, senior analyst covering security at Technology Business Research, security vendors are faced with an existential threat as clients run their applications in the cloud and rely on their cloud service providers for their security controls.

Read more…

Four short links: 27 November 2013

3D Fossils, Changing Drone Uses, High Scalability, and Sim Redux

Four short links: 29 October 2013

Digital Citizenship, Berg Cloud, Data Warehouse, and The Spying Iron

- Mozilla Web Literacy Standard — things you should be able to do if you’re to be trusted to be on the web unsupervised. (via BoingBoing)

- Berg Cloud Platform — hardware (shield), local network, and cloud glue. Caution: magic ahead!

- Shark — a large-scale data warehouse system for Spark designed to be compatible with Apache Hive. It can execute Hive QL queries up to 100 times faster than Hive without any modification to the existing data or queries. Shark supports Hive’s query language, metastore, serialization formats, and user-defined functions, providing seamless integration with existing Hive deployments and a familiar, more powerful option for new ones. (via Strata)

- The Malware of Things — a technician opening up an iron included in a batch of Chinese imports to find a “spy chip” with what he called “a little microphone”. Its correspondent said the hidden devices were mostly being used to spread viruses, by connecting to any computer within a 200m (656ft) radius which were using unprotected Wi-Fi networks.

Four short links: 12 September 2013

PaaS Vendors, Educational MMO, Changing Culture, Data Mythologies

- Amazon Compute Numbers (ReadWrite) — AWS offers five times the utilized compute capacity of each of its other 14 top competitors—combined. (via Matt Asay)

- MIT Educational MMO — The initial phase will cover topics in biology, algebra, geometry, probability, and statistics, providing students with a collaborative, social experience in a systems-based game world where they can explore how the world works and discover important scientific concepts. (via KQED)

- Changing Norms (Atul Gawande) — neither penalties nor incentives achieve what we’re really after: a system and a culture where X is what people do, day in and day out, even when no one is watching. “You must” rewards mere compliance. Getting to “X is what we do” means establishing X as the norm.

- The Mythologies of Big Data (YouTube) — Kate Crawford at UC Berkeley iSchool. The six months: ‘Big data are new’, ‘Big data is objective’, ‘Big data don’t discriminate’, ‘Big data makes cities smart’, ‘Big data is anonymous’, ‘You can opt out of big data’. (via Sam Kinsley)

Four short links: 28 August 2013

Cloud Orchestration, Cultural Heritage, Student Hackers, and Visual Javascript

- Juju — Canonical’s cloud orchestration software, intended to be a peer of chef and puppet. (via svrn)

- Cultural Heritage Symbols — workshopped icons to indicate interactives, big data, makerspaces, etc. (via Courtney Johnston)

- Quinn Norton: Students as Hackers (EdTalks) — if you really want to understand the future, don’t look at how people are looking at technology, look at how they are misusing technology.

- noflo.js — visual flow controls for Javascript.

Tesla Model S REST API Authentication Flaws

[contextly_sidebar id=”fac2d6589aabb899e309cf4413768c10″]As many of you know, APIs matter to me. I have lightbulbs that have APIs. Two months ago, I bought a car that has an API: The Tesla Model S.

For the most part, people use the Tesla REST API via the iPhone and Android mobile apps. The apps enable you to do any of the following:

- Check on the state of battery charge

- Muck with the climate control

- Muck with the panoramic sunroof

- Identify where the hell your car is and what it’s doing

- Honk the horn

- Open the charge port

- Change a variety of car configuration settings

- More stuff of a similar nature

For the purposes of this article, it’s important to note that there’s nothing in the API that (can? should?) result in an accident if someone malicious were to gain access. Having said that, there is enough here to do some economic damage both in terms of excess electrical usage and forcing excess wear on batteries.

Will Developers Move to Sputnik?

The past, present, and future of Dell's project

Barton George (@barton808) is the Director of Development Programs at Dell, and the lead on Project Sputnik—Dell’s Ubuntu-based developer laptop (and its accompanying software). He sat down with me at OSCON to talk about what’s happened in the past year since OSCON 2012, and why he thinks Sputnik has a real chance at attracting developers.

Key highlights include:

- The developers that make up Sputnik’s ideal audience [Discussed at 1:00]

- The top three reasons you should try Sputnik [Discussed at 2:46]

- What Barton hopes to be talking about in 2014 [Discussed at 4:36]

- The key to building a community is documentation [Discussed at 5:20]

You can view the full interview here:

The demise of Google Reader: Stability as a service

How can we commit to Google's platform when its services flicker in and out of existence?

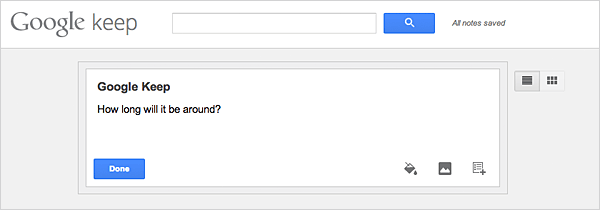

Om Malik’s brief post on the demise of Google Reader raises a good point: If we can’t trust Google to keep successful applications around, why should we bother trying to use their new applications, such as Google Keep?

Given the timing, the name is ironic. I’d definitely like an application similar to Evernote, but with search that actually worked well; I trust Google on search. But why should I use Keep if the chances are that Google is going to drop it a year or two from now?

In the larger scheme of things, Keep is small potatoes. Google is injuring themselves in ways that are potentially much more serious than the success or failure of one app. Google is working on the most ambitious re-envisioning of computing since the beginning of the PC era: moving absolutely everything to the cloud. Minimal local storage; local disk drives, whether solid state or rust-based, are the problem, not the solution. Projects like Google Fiber show that they’re interested in seeing that people have enough bandwidth so that they can get at their cloud storage fast enough so that they don’t notice that it isn’t local.

It’s a breath-taking vision, on many levels: I should be able to have access to all of my work, regardless of the device I’m using or where it’s located. A mobile phone shouldn’t be any different from a desktop. I may not want to write software on a mobile phone (I can’t imagine coding on those tiny touch keyboards), but I should be able to if I want to. And I should definitely be able to take a laptop into the hills and work transparently over a 4G network. Read more…

LISA mixes the ancient and modern: report from USENIX system administration conference

System administrators try to maintain reliability and other virtues while adopting cost-cutting innovations

I came to LISA, the classic USENIX conference, to find out this year who was using such advanced techniques as cloud computing, continuous integration, non-relational databases, and IPv6. I found lots of evidence of those technologies in action, but also had the bracing experience of getting stuck in a talk with dozens of Solaris fans.

Such is the confluence of old and new at LISA. I also heard of the continued relevance of magnetic tape–its storage costs are orders of magnitude below those of disks–and of new developements on NFS. Think of NFS as a protocol, not a filesystem: it can now connect many different filesystems, including the favorites of modern distributed system users.

LISA, and the USENIX organization that valiantly unveils it each year, are communities at least as resilient as the systems that their adherents spend their lives humming. Familiar speakers return each year. Members crowd a conference room in the evening to pepper the staff with questions about organizational issues. Attendees exchange their t-shirts for tuxes to attend a three-hour reception aboard a boat on the San Diego harbor, which this time was experiencing unseasonably brisk weather. (Full disclosure: I skipped the reception and wrote this article instead.) Let no one claim that computer administrators are anti-social.

Again in the spirit of full disclosure, let me admit that I perform several key operations on a Solaris system. When it goes away (which someday it will), I’ll have to alter some workflows.

The continued resilience of LISA

Conferences, like books, have a hard go of it in the age of instant online information. I wasn’t around in the days when people would attend conferences to exchange magnetic tapes with their free software, but I remember the days when companies would plan their releases to occur on the first day of a conference and would make major announcements there. The tradition of using conferences to propel technical innovation is not dead; for instance, OpenStack was announced at an O’Reilly Open Source convention.

But as pointed out by Thomas Limoncelli, an O’Reilly author (Time Management for System Administrators) and a very popular LISA speaker, the Internet has altered the equation for product announcements in two profound ways. First of all, companies and open source projects can achieve notoriety in other ways without leveraging conferences. Second, and more subtly, the philosophy of “release early, release often” launches new features multiple times a year and reduces the impact of major versions. The conferences need a different justification.

Limoncelli says that LISA has survived by getting known as the place you can get training that you can get nowhere else. “You can learn about a tool from the person who created the tool,” he says. Indeed, at the BOFs it was impressive to hear the creator of a major open source tool reveal his plans for a major overhaul that would permit plugin modules. It was sobering though to hear him complain about a lack of funds to do the job, and discuss with the audience some options for getting financial support.

LISA is not only a conference for the recognized stars of computing, but a place to show off students who can create a complete user administration interface in their spare time, or design a generalized extension of common Unix tools (grep, diff, and so forth) that work on structured blocks of text instead of individual lines.

Another long-time attendee told me that companies don’t expect anyone here to whip out a checkbook in the exhibition hall, but they still come. They have a valuable chance at LISA to talk to people who don’t have direct purchasing authority but possess the technical expertise to explain to their bosses the importance of new products. LISA is also a place where people can delve as deep as the please into technical discussions of products.

I noticed good attendance at vendor-sponsored Bird-of-a-Feather sessions, even those lacking beer. For instance, two Ceph staff signed up for a BOF at 10 in the evening, and were surprised to see over 30 attendees. It was in my mind a perfect BOF. The audience talked more than the speakers, and the speakers asked questions as well as delivering answers.

But many BOFs didn’t fit the casual format I used to know. Often, the leader turned up with a full set of slides and took up a full hour going through a list of new features. There were still audience comments, but no more than at a conference session.

Memorable keynotes

One undeniable highlight of LISA was the keynote by Internet pioneer Vint Cerf. After years in Washington, DC, Cerf took visible pleasure in geeking out with people who could understand the technical implications of the movements he likes to track. His talk ranged from the depth of his wine cellar (which he is gradually outfitting with sensors for quality and security) to interplanetary travel.

The early part of his talk danced over general topics that I think were already adequately understood by his audience, such as the value of DNSSEC. But he often raised useful issues for further consideration, such as who will manage the billions of devices that will be attached to the Internet over the next few years. It can be useful to delegate read access and even write access (to change device state) to a third party when the device owner is unavailable. In trying to imagine a model for sets of device, Cerf suggested the familiar Internet concept of an autonomous system, which obviously has scaled well and allowed us to distinguish routers running different protocols.

The smart grid (for electricity) is another concern of Cerf’s. While he acknowledged known issues of security and privacy, he suggested that the biggest problem will be the classic problem of coordinated distributed systems. In an environment where individual homes come and go off the grid, adding energy to it along with removing energy, it will be hard to predict what people need and produce just the right amount at any time. One strategy involves microgrids: letting neighborhoods manage their own energy needs to avoid letting failures cascade through a large geographic area.

Cerf did not omit to warn us of the current stumbling efforts in the UN to institute more governance for the Internet. He acknowledged that abuse of the Internet is a problem, but said the ITU needs an “excuse to continue” as radio, TV, etc. migrate to the Internet and the ITU’s standards see decreasing relevance.

Cerf also touted the Digital Vellum project for the preservation of data and software. He suggested that we need a legal framework that would require software developers to provide enough information for people to continue getting access to their own documents as old formats and software are replaced. “If we don’t do this,” he warned, “our 22nd-century descendants won’t know much about us.”

Talking about OpenFlow and Software Defined Networking, he found its most exciting opportunity is to let us use content to direct network traffic in addition to, or instead of, addresses.

Another fine keynote was delivered by Matt Blaze on a project he and colleagues conducted to assess the security of the P25 mobile systems used everywhere by security forces, including local police and fire departments, soldiers in the field, FBI and CIA staff conducting surveillance, and executive bodyguards. Ironically, there are so many problems with these communication systems that the talk was disappointing.

I should in no way diminish the intelligence and care invested by these researchers from the University of Pennsylvania. It’s just the history of P25 makes security lapses seem inevitable. Because it was old, and was designed to accommodate devices that were even older, it failed to implement basic technologies such as asymmetric encryption that we now take for granted. Furthermore, most of the users of these devices are more concerned with getting messages to their intended destinations (so that personnel can respond to an emergency) than in preventing potential enemies from gaining access. Putting all this together, instead of saying “What impressive research,” we tend to say, “What else would you expect?”

Random insights

Attendees certainly had their choice of virtualization and cloud solutions at the conference. A very basic introduction to OpenStack was offered, along with another by developers of CloudStack. Although the latter is older and more settled, it is losing the battle of mindshare. One developer explained that CloudStack has a smaller scope than OpenStack, because CloudStack is focused on high-computing environments. However, he claimed, CloudStack works on really huge deployments where he hasn’t seen other successful solutions. Yet another open source virtuallization platform presented was Google’s Ganeti.

I also attended talks and had chats with developers working on the latest generation of data stores: massive distributed file systems like Hadoop’s HDFS, and high-performance tools such as HBase and Impala, for accessing the data it stores. There seems be accordion effect in data stores: developers start with simple flat or key-value structures. Then they find the need over time–depending on their particular applications–for more hierarchy or delimited data, and either make their data stores more heavyweight or jerry-rig the structure through conventions such as defining fields for certain purposes. Finally we’re back at something mimicking the features of a relational database, and someone rebels and starts another bare-bones project.

One such developer told me hoped his project never turns into a behemoth like CORBA or (lamentably) what WS-* specifications seem to have wrought.

CORBA is universally recognized as dead–perhaps stillborn, because I never heard of major systems deployed in production. In fact, I never knew of an implementation that caught up with the constant new layers of complexity thrown on by the standards committee.

In contrast, WS-* specifications teeter on the edge of acceptability, as a number of organizations swear by it.

I pointed out to my colleague that most modern cloud or PC systems are unlikely to suffer from the weight of CORBA or WS-*, because the latter two systems were created for environments without trust. They were meant to tie together organizations with conflicting goals, and were designed by consortia of large vendors jockeying for market share. For both of these reasons, they have to negotiate all sorts of parameters and add many assurances to every communication.

Recently we’ve seen an increase of interest in functional programming. It occurred to me this week that many aspects of functional programming go nicely with virtualization and the cloud. When you write code with no side effects and no global lack of state, you can recover more easily when instances of your servers disappear. It’s fascinating to see how technologies coming from many different places push each other forward–and sometimes hold each other back.