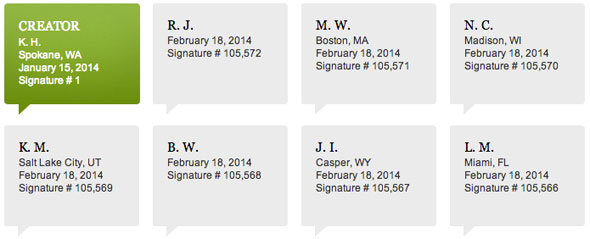

Screen shot of signatures from a “Common Carrier” petition to the White House.

It was the million comments filed at the FCC that dragged me out of the silence I’ve maintained for several years on the slippery controversy known as “network neutrality.” The issue even came up during President Obama’s address to the recent U.S.-Africa Business forum.

Most people who latch on to the term “network neutrality” (which was never favored by the experts I’ve worked with over the years to promote competitive Internet service) don’t know the history that brought the Internet to its current state. Without this background, proposed policy changes will be ineffective. So, I’ll try to fill in some pieces that help explain the complex cans of worms opened by the idea of network neutrality.

From dial-up to fiber

Throughout the 1980s and 1990s, the chief concerns of Internet policy makers were, first, to bring basic network access to masses of people, and second, to achieve enough bandwidth for dazzling advances such as Voice over IP (and occasionally we even managed interactive video). Bandwidth constraints were a constant concern, and a good deal of advances in compression and protocols made the most of limited access. Many applications, such as FTP and rsync, also included restart options so that a file transfer could pick up where it left off when the dial-up line failed and then came up again.

Because there were always more ways to use the network than the available bandwidth allowed (in other words, networks were oversubscribed), we all understood that network administrators at every level, from the local campus to the Tier 1 provider, would throttle traffic to make the network fair to all users. After all, what is the purpose of TCP window scaling, which lies at the center of the main protocol that carries traffic on the Internet?

There’s nothing new about complaints from Internet services about uneven access. When Tier 1 Internet providers stopped peering and started charging smaller providers for access, the outcry was long and loud (I wrote about it in December 2005) — but the Internet survived.

The hope that more competition would lead to more bandwidth at reasonable prices drove the Telecommunications Act of 1996. And in places with robust telecom competition — economically advanced countries in Europe and East Asia — people get much faster speeds than U.S. residents, at decent prices, with few worries about discrimination against content providers. A long history of obstruction and legal maneuvering has kept the U.S. down to one high-speed networking carrier in most markets.

Many people got complacent when they obtained always-on, high-speed connections. But new network capabilities always lead to more user demand, just as widening highways tends to lead to more congestion. Soon the peer-to-peer file sharing phenomenon pumped up the energy of Internet policy discussions again.

Moral outrage gets injected into Internet policy debates

I remember a sense of pessimism setting in among Internet public policy advocates around the year 2000, as the cable and telephone companies carved up the Internet market and effectively excluded most of their competitors. But thanks to the competition between these two industries, at least, most Americans had always-on connections, even if many of them were ADSL. And that led to the next set of Internet infrastructure wars.

A lot of the new always-on Internet users, who had been making cassette tapes for friends and sharing music offline, decided they wanted music on their computers and started using the Internet to exchange files. The growth of peer-to-peer services can be attributed partly to the desire to evade copyright holders, but it had solid technical motivations as well.

Millions of downloads would put a great strain on servers. (Nobody outside Google knows how much it invests in YouTube servers, but the cost must be huge.) When a hot new item hits the Internet, the only way to efficiently disseminate it is to make each user share some upload bandwidth along with download bandwidth. The overhead of peer-to-peer systems like BitTorrent requires a modest amount more bandwidth usage than using central servers to distribute the same content, but it allows small Internet users to provide popular material without being overwhelmed by downloads.

Prices have always been different for different Internet users.And peer-to-peer has substantial non-infringing uses, generally ignored by vigilante bandwidth throttlers. On old networks, software developers had to undergo a complicated protocol requiring technical mastery in order to work on free software. A developer fixing a bug or adding an enhancement would isolate the lines of code he or she changed in a file. Other developers would download that tiny file, update their versions of the source code using the patch program, recompile, and reinstall. Peer-to-peer services made it convenient to just download a whole new binary and compare checksums to make sure it had not become corrupted.

I don’t have to go over the agonizing history of the sudden worldwide mania for peer-to-peer file sharing or the alarmed reactions of music and movie studios. Suffice it to say that traffic shaping, which had always been key to fair network service, got buffeted from all directions by an unpropitious moral zeal. Some network neutrality advocates distrust all application-specific discrimination, and turgid debates arise over what is “legitimate.” Many in the network neutrality movement were even angry at the FCC in 2010 when it created different rules for mobile and fiber networks, even though the bandwidth restraints on mobile networks are obvious.

Still, given that the music and movie industries have started to respond to consumer demand and offer legal streaming, peer-to-peer file sharing may no longer be a significant percentage of traffic that carriers have to worry about. Instead of Napster, they argue over Netflix. But the histrionic appeals that characterized both sides of the peer-to-peer debates — those who defended peer-to-peer, and those who deplored it — continued into the network neutrality controversy.

All other things being unequal

Many advocates who’ve latched onto the network neutrality idea suggest that up to now we’ve enjoyed some kind of golden age where anyone in a garage can compete with Internet giants. Things aren’t so simple.

Prices have always been different for different Internet users because some places have fewer competitors, some places are more difficult to string wires through, and so on. Different service is also available for cold cash, so large users have routinely leased their own lines over the years or paid extra for routing over faster lines. Software traffic shaping is a simple technological extension, similar to the use of virtual circuits and software-defined networking technologies elsewhere, to discriminate among customers willing to pay.

Michael Powell, as chair of the FCC, famously cited different levels of access when he was asked about the digital divide and pointed out that he was on the wrong side of a Mercedes divide. It might not have been smart for an FCC chair to compare the information highway — which was already clearly becoming an economic, educational, and democratic necessity — with a luxury consumer item, but his essential point was correct.

Network access is only one of many resources innovators need in any case. Suppose I cooked up a service that could out-Google Google — one that could instantly deliver the exact information for which people were searching in a privacy-preserving manner — but dang it, I just lacked 18 million CPUs to run it on. Where’s the neutrality?

The fact is that innovators — particularly in developing regions of the world, where more and more innovation is likely to arise — have always, always suffered from resource constraints that don’t bedevil large incumbents. The key trick of innovation is to handle resource constraints and turn them into positives, as the early Internet users I mentioned at the beginning of this article did. I don’t see why network speed and cost should face policy decisions any different from physical resources, access to technical and marketing expertise, geographical location, and other distinctions between people.

One proposed fix: common carrier status

Net neutrality advocates have also seized on what they consider a simple fix: applying the legal regime known as Title II (PDF) (because of its place in the law) so that Internet carriers are regulated like letter carriers or railroads. One attraction of this solution is that it requires action only by the FCC, not by Congress.

As I have pointed out, the evolution of the current regime followed a historical logic that would be hard to unravel. If 99% of those one million comments (plus even more that are certain to roll in before the deadline) ask the FCC to reverse itself, they may do so. Congress could well reverse the reversal. But Title II contains booby traps as well: because networks can’t treat all packets the same way, mandating them to do so will lead to endless technical arguments.

Freedom? Or just economics?

Telephone and cable company managers are not blind to what’s going on. They have seen their services reduced to a commodity, while on the one side content producers continue to charge high fees and on the other side a handful of prominent Internet companies make headlines over stunning deals. (Of course, few companies can be WhatsApp.) The telephone companies spend days and nights plotting how to bring some of that luscious revenue into their own coffers.

Their answer, of course, is through oligopoly market power rather than through real innovation. That’s why I focused this article on competition back at the start. The telephone companies want a direct line into those who build successful businesses on top of telco services, and they want those companies to cough up a higher share of the revenues. It’s the same strategy pursued by Walmart when it forces down prices among its suppliers or by Amazon.com when it demands a higher share of publisher profits.

Tim O’Reilly, with whom I discussed this article, points out that the public is unable to determine what fair compensation is for the carriers because we aren’t privy to the actual costs of providing service. And those costs are not just the costs for providing Internet infrastructure.

O’Reilly asks, “What are the costs, for example, of providing Netflix versus television? Which one is driving subscriber growth and ARPU? What is the real cost of the incremental infrastructure investment needed to provide high bandwidth services versus the prior cost of paying for content? How does this show up in the returns the telcos and cable companies are already getting?”

There are many forms of centralization I’d worry about before traffic shaping.While we are not privy to these cost numbers, he indicates that a quick look at the public financial reports of Comcast and Netflix provides some useful insight. If you look at the annual view of the data, you’ll see that in the four years between 2010 and 2013, Netflix’s revenues doubled (and with them, presumably their demands on the network doubled as well — perhaps even more, as a much larger proportion of Netflix revenue is likely driven by streaming today than it was in 2010). In that period, Netflix’s pre-tax income dropped from about 12% of revenue to about 4%. In the same time period, Comcast’s revenue also more than doubled (partly as a result of acquisition), and their profit margin rose from 16% to 17%. It’s pretty clear that the rise of Netflix streaming has not had a negative impact on Comcast profits!

In short, is being a commodity provider really such a bad deal? Do Internet infrastructure providers really need to squeeze Internet content companies in order to fund their investments? While this level of analysis is at best indicative, and should not be applied naively to policy decisions, it suggests a productive avenue for further research.

O’Reilly also points out that customers are paying the carriers to provide Internet service — and to them, that service consists of access to sites like Facebook, YouTube, and, yes, Netflix. When the carriers ask the content providers to pay them as well, not only are they double-dipping, but they are conveniently forgetting to mention that in the “good old days,” cable companies actually paid for the content they delivered to customers. Aren’t they actually getting a free-ride when people spend their Internet time watching YouTube or Netflix instead?

So, at bottom, the moral rallying cry behind network neutrality offers a muddled way to discuss an economic battle between big companies as to how they will allocate profits between themselves.

Taking the middle road

Some net neutrality experts make no secret of their desire to drive the telephone companies and cable companies out of business or reduce them to regulated utilities. The problem with abandoning infrastructure to the status of a given is that innovation in wires and cell towers is by no means finished. I looked at the economic aspects of telecom infrastructure in my article “Who will upgrade the telecom foundation of the Internet?”

We still have recourse to preserve the open Internet without network neutrality:

- The FCC and FTC can still intervene in anti-competitive or discriminatory behavior — especially during mergers, but also in the daily course of activity. The FCC showed its willingness to play cop when Comcast was caught throttling BitTorrent traffic.

- The government can sponsor initiatives to overcome the digital divide. Foremost among these are municipal networks, which have worked well in many communities (and are under constant political attack by private carriers). There is certainly no reason that Internet service needs to be provided by the purveyors of voice or video applications, just because those companies had lines strung first. On the other hand, Google can’t fund universal access. And even municipalities can’t create all the long-haul lines that connect them, so they are not a complete solution.

- Innovators could be trained and encouraged in economically underdeveloped areas where adaptive solutions to low bandwidth and unreliable connections are crucial. We will all be better off as clever ways are found to use our resources more efficiently. For instance, we can’t expect the wireless spectrum to support all the uses we want to make of it if we’re wasteful.

I feel that network neutrality as a (vague) concept took hold of an important ongoing technical, social, and economic discussion and rechanneled it along ineffective lines. As this article has shown, technology as well as popular usage has continuously changed at the content or application level, and there are many forms of centralization I’d worry about before traffic shaping.

Furthermore, we should not give up on hopes for more competition in infrastructure. At that time, we could all have the equivalent of a Mercedes on the information highway.

Coax photo on home page by Michael Mol, used under a Creative Commons license.