FEATURED STORY

Big data's big ideas

From cognitive augmentation to artificial intelligence, here's a look at the major forces shaping the data world. Read more...

Using Apache Spark to predict attack vectors among billions of users and trillions of events

The O’Reilly Data Show podcast: Fang Yu on data science in security, unsupervised learning, and Apache Spark.

Subscribe to the O’Reilly Data Show Podcast to explore the opportunities and techniques driving big data and data science: Stitcher, TuneIn, iTunes, SoundCloud, RSS.

In this episode of the O’Reilly Data Show, I spoke with Fang Yu, co-founder and CTO of DataVisor. We discussed her days as a researcher at Microsoft, the application of data science and distributed computing to security, and hiring and training data scientists and engineers for the security domain.

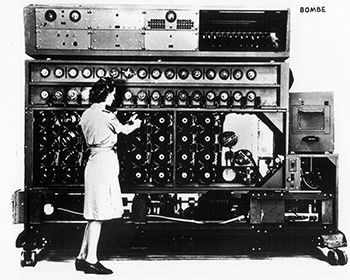

DataVisor is a startup that uses data science and big data to detect fraud and malicious users across many different application domains in the U.S. and China. Founded by security researchers from Microsoft, the startup has developed large-scale unsupervised algorithms on top of Apache Spark, to (as Yu notes in our chat) “predict attack vectors early among billions of users and trillions of events.”

Several years ago, I found myself immersed in the security space and at that time tools that employed machine learning and big data were still rare. More recently, with the rise of tools like Apache Spark and Apache Kafka, I’m starting to come across many more security professionals who incorporate large-scale machine learning and distributed systems into their software platforms and consulting practices.

Building a business that combines human experts and data science

The O’Reilly Data Show podcast: Eric Colson on algorithms, human computation, and building data science teams.

Subscribe to the O’Reilly Data Show Podcast to explore the opportunities and techniques driving big data and data science.

In this episode of the O’Reilly Data Show, I spoke with Eric Colson, chief algorithms officer at Stitch Fix, and former VP of data science and engineering at Netflix. We talked about building and deploying mission-critical, human-in-the-loop systems for consumer Internet companies. Knowing that many companies are grappling with incorporating data science, I also asked Colson to share his experiences building, managing, and nurturing, large data science teams at both Netflix and Stitch Fix.

Augmented systems: “Active learning,” “human-in-the-loop,” and “human computation”

We use the term ‘human computation’ at Stitch Fix. We have a team dedicated to human computation. It’s a little bit coarse to say it that way because we do have more than 2,000 stylists, and these are very much human beings that are very passionate about fashion styling. What we can do is, we can abstract their talent into—you can think of it like an API; there’s certain tasks that only a human can do or we’re going to fail if we try this with machines, so we almost have programmatic access to human talent. We are allowed to route certain tasks to them, things that we could never get done with machines. … We have some of our own proprietary software that blends together two resources: machine learning and expert human judgment. The way I talk about it is, we have an algorithm that’s distributed across the resources. It’s a single algorithm, but it does some of the work through machine resources, and other parts of the work get done through humans.

Is 2016 the year you let robots manage your money?

The O’Reilly Data Show podcast: Vasant Dhar on the race to build “big data machines” in financial investing.

Subscribe to the O’Reilly Data Show Podcast to explore the opportunities and techniques driving big data and data science.

In this episode of the O’Reilly Data Show, I sat down with Vasant Dhar, a professor at the Stern School of Business and Center for Data Science at NYU, founder of SCT Capital Management, and editor-in-chief of the Big Data Journal (full disclosure: I’m a member of the editorial board). We talked about the early days of AI and data mining, and recent applications of data science to financial investing and other domains.

Dhar’s first steps in applying machine learning to finance

I joke with people, I say, ‘When I first started looking at finance, the only thing I knew was that prices go up and down.’ It was only when I actually went to Morgan Stanley and took time off from academia that I learned about finance and financial markets. … What I really did in that initial experiment is I took all the trades, I appended them with information about the state of the market at the time, and then I cranked it through a genetic algorithm and a tree induction algorithm. … When I took it to the meeting, it generated a lot of really interesting discussion. … Of course, it took several months before we actually finally found the reasons for why I was observing what I was observing.

Investing in big data technologies

The O’Reilly Data Show podcast: A fireside chat with Ben Horowitz, plus Reynold Xin on the rise of Apache Spark in China.

Subscribe to the O’Reilly Data Show Podcast to explore the opportunities and techniques driving big data and data science.

In this special holiday episode of the O’Reilly Data Show, I look back at two conversations I had earlier this year at the Spark Summit in San Francisco. The first segment is an on-stage fireside chat with Ben Horowitz, co-founder of Andreessen Horowitz and author of The Hard Thing About Hard Things.

In the second segment, Reynold Xin, one of the architects of Apache Spark, explains the rise of Apache Spark in China.

Related resources:

- State of Spark, and where it is going in 2016: a Strata + Hadoop World San Jose presentation by Apache Spark architects, Reynold Xin and Patrick Wendell.

- Dates for Spark Summit 2016 conferences are now available.

- Learning Spark

- Real-time data applications

Building a scalable platform for streaming updates and analytics

The O’Reilly Data Show podcast: Evan Chan on the early days of Spark+Cassandra, FiloDB, and cloud computing.

Subscribe to the O’Reilly Data Show Podcast to explore the opportunities and techniques driving big data and data science.

In this episode of the O’Reilly Data Show, I sit down with Evan Chan, distinguished engineer at Tuplejump. We talk about the early days of Spark (particularly his contributions to Spark/Cassandra integration), his interesting new open source project (FiloDB), and recent trends in cloud computing.

Bringing Apache Spark & Apache Cassandra together

Datastax credits me with inspiring them to bring Spark into Cassandra … I think they’re very generous about that. I think I was one of the first folks to talk about the possibility of bringing Cassandra and Spark together. The vision that I saw was that Cassandra was really good for real-time updates, but what if we’re able to do more analytical queries on it? Then you could combine, basically, a platform that is really good for real-time updates with analytics.