In my old age, at least for the computing industry, I’m getting more irritated by smart young things that preach today’s big thing, or tomorrow’s next big thing, as the best and only solution to my computing problems.

Those that fail to learn from history are doomed to repeat it, and the smart young things need to pay more attention. Because the trends underlying today’s computing should be evident to anyone with a sufficiently good grasp of computing history.

Depending on the state of technology, the computer industry oscillates between thin- and thick-client architectures. Either the bulk of our compute power and storage is hidden away in racks of (sometimes distant) servers, or alternatively, into a mass of distributed systems closer to home. This year’s reinvention of the mainframe is called cloud computing. While I’m a big supporter of cloud architectures, at least at the moment, I’ll be interested to see those preaching it as a last and final solution of all our problems proved wrong, yet again, when computing power catches up to demand once more and you can fit today’s data center inside a box not much bigger than a cell phone.

Thinking that just couldn’t happen? You should think again, because it already has. The iPad 2 beats most super computers from the early ’90s in raw compute power, and it would have been on the world-wide top 500 list of super computers well into 1994. There isn’t any reason to suspect that, at least for now, that sort of trend isn’t going to continue.

Yesterday’s next big thing

Yesterday’s “next big thing” was the World Wide Web. I still vividly remember standing in a draughty computing lab, almost 20 years ago now, looking over the shoulder of someone who had just downloaded first public build of NCSA Mosaic via some torturous method. I shook my head and said “It’ll never catch on, why would you want images?” That shows what I know. Although to be fair, I was a lot younger back then. I was failing to grasp history because I was neither well read enough, nor old enough, to have seen it all before. And since I still don’t claim to be either well read or old enough this time around, perhaps you should take everything I’m saying with a pinch of salt. That’s the thing with the next big thing: it’s always open to interpretation.

The next big thing?

The machines we grew up with are yesterday’s news. They’re quickly being replaced by consumption devices, with most of the rest of day-to-day computing moving into the environment and becoming embedded into people’s lives. This will happen almost certainly

without people noticing.

While it’s pretty obvious that mobile is the current “next” big thing, it’s arguable whether mobile itself has already peaked. The sleek lines of the iPhone in your pocket are already almost as dated as the beige tower that used to sit next to the CRT on your desk.

Technology has not quite caught up to the overall vision and neither have we — we’ve been trying to reinvent the desktop computer in a smaller form factor. That’s why the mobile platforms we see today are just stepping stones.

Most people just want gadgets that work, and that do the things they want them to do. People never really wanted computers. They wanted what computers could do for them. The general purpose machines we think of today as “computers” will naturally dissipate out into the environment as our technology gets better.

The next, next big thing

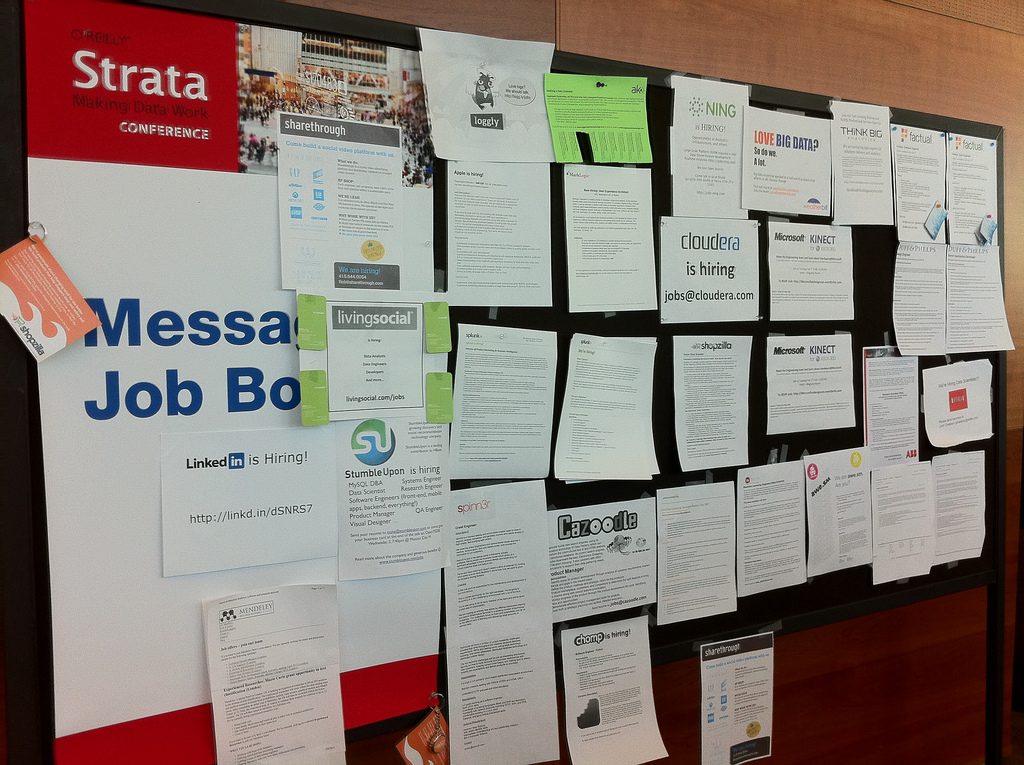

To those preaching cloud computing and web applications as the next big thing: they’ve already had their day and the web as we know it is a dead man walking. Looking at the job board at O’Reilly’s Strata conference earlier in the year, the next big thing is obvious. It’s data. Heck, it’s not even the next big thing anymore. It’s pulling into the station, and to data scientists, the web and its architecture is just a commodity. Bought and sold in bulk.

The overflowing job board at February’s Strata conference.

As for the next, next big thing? Ubiquitous computing is the thing after the next big thing, and almost inevitably the thirst for more data will drive it. But then eventually, inevitably, the data will become secondary — a commodity. Yesterday’s hot job was a developer, today with the arrival of Big Data it has become a mathematician. Tomorrow it could well be a hardware hacker.

Count on it. History goes in cycles and only the names change.

Related: