Which Language Should You Learn First?

If everybody should learn to code, what's the right tool for learning?

What’s the best programming language for a beginner to start with?

It seems like a simple question, and one that lots of aspiring developers ask themselves, but it’s actually somewhat loaded. Are you asking because you want to get a job as a developer? Because you want to get in on the mobile app craze? Because you’ve been tasked with improving your company’s web offerings, even though you’re not a developer? Or just out of personal interest, for the fun of it?

How I failed

Six lessons learned.

This post originally appeared on LinkedIn.

When you start out as an entrepreneur, it’s just you and your idea, or you and your co-founder’s and your idea. Then you add customers, and they shape and mold you and that idea until you achieve the fabled “product-market fit.” If you are lucky and diligent, you achieve that fit more than once, reinventing yourself with multiple products and multiple customer segments.

When you start out as an entrepreneur, it’s just you and your idea, or you and your co-founder’s and your idea. Then you add customers, and they shape and mold you and that idea until you achieve the fabled “product-market fit.” If you are lucky and diligent, you achieve that fit more than once, reinventing yourself with multiple products and multiple customer segments.

But if you are to succeed in building an enduring company, it has to be about far more than that: it has to be about the team and the institution you create together. As a management team, you aren’t just working for the company; you have to work on the company, shaping it, tuning it, setting the rules that it will live by. And it’s way too easy to give that latter work short shrift.

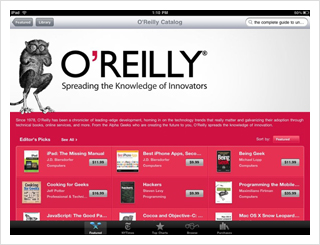

At O’Reilly Media, we’ve built a successful business and have had a big impact on our industry, but looking back at our history, it’s also clear to me how often we’ve failed, and what some of the things are that kept me, my employees, and our company from achieving our full potential. Some of these were failures of vision, some of them were failures of nerve, but most of them were failures in building and cultivating the company culture. Read more…

Automation Myths

The NSA Can't Replace 90% of Its System Administrators

In the aftermath of Edward Snowden’s revelations about NSA’s domestic surveillance activities, the NSA has recently announced that they plan to get rid of 90% of their system administrators via software automation in order to “improve security.” So far, I’ve mostly seen this piece of news reported and commented on straightforwardly. But it simply doesn’t add up. Either the NSA has a monumental (yet not necessarily surprising) level of bureaucratic bloat that they could feasibly cut that amount of staff regardless of automation, or they are simply going to be less effective once they’ve reduced their staff. I talked with a few people who are intimately familiar with the kind of software that would typically be used for automation of traditional sysadmin tasks (Puppet and Chef). Typically, their products are used to allow an existing group of operations people to do much more, not attempting to do the same amount of work with significantly fewer people. The magical thinking that the NSA can actually put in automation sufficient to do away with 90% of their system administration staff belies some fundamental misunderstandings about automation. I’ll tackle the two biggest ones here.

1. Automation replaces people. Automation is about gaining leverage–it’s about streamlining human tasks that can be handled by computers in order to add mental brainpower. As James Turnbull, former VP of Business Development for PuppetLabs, said to me, “You still need smart people to think about and solve hard problems.” (Whether you agree with the types of problems the NSA is trying to solve is a completely different thing, of course.) In reality, the NSA should have been working on automation regardless of the Snowden affair. It has a massive, complex infrastructure. Deploying a new data center, for example, is a huge undertaking; it’s not something you can automate.

Or as Seth Vargo, who works for OpsCode–the creators of configuration management automation software Chef–puts it, “There’s still decisions to be made. And the machines are going to fail.” Sascha Bates (also with OpsCode) chimed in to point out that “This presumes that system administrators only manage servers.” It’s a naive view. Are the DBAs going away, too? Network administrators? As I mentioned earlier, the NSA has a massive, complicated infrastructure that will always require people to manage it. That plus all the stuff that isn’t (theoretically) being automated will now fall on the remaining 10% who don’t get laid off. And that remaining 10% will still have access to the same information.

2. Automation increases security. Automation increases consistency, which can have a relationship with security. Prior to automating something, you might have a wide variety of people doing the same thing in varying ways, hence with varying outcomes. From a security standpoint, automation provides infrastructure security, and makes it auditable. But it doesn’t really increase data/information security (e.g. this file can/cannot live on that server)–those too are human tasks requiring human judgement. And that’s just the kind of information Snowden got his hands on. This is another example of a government agency over-reacting to a low probability event after the fact. Getting rid of 90% of their sysadmins is the IT equivalent of still requiring airline passengers to take off their shoes and cram their tiny shampoo bottles into plastic baggies; it’s security theater.

There are a few upsides, depending on your perspective on this whole situation. First, if your company is in the market for system administrators, you might want to train your recruiters on D.C. in the near future. Additionally, odds are the NSA is going to be less effective than it is right now. Perhaps, like the CIA, they are also courting Amazon Web Services (AWS) to help run their own private cloud, but again, as Sascha said, managing servers is only a small piece of the system administrator picture.

If you care about or are interested in automation, operations, and security, please join us at Velocity New York on October 14-16. Dr. Nancy Leveson will be delivering a fantastic keynote on security and complex systems.

What is probabilistic programming?

Probabilistic languages can free developers from the complexities of high-performance probabilistic inference.

Probabilistic programming languages are in the spotlight. This is due to the announcement of a new DARPA program to support their fundamental research. But what is probabilistic programming? What can we expect from this research? Will this effort pay off? How long will it take?

A probabilistic programming language is a high-level language that makes it easy for a developer to define probability models and then “solve” these models automatically. These languages incorporate random events as primitives and their runtime environment handles inference. Now, it is a matter of programming that enables a clean separation between modeling and inference. This can vastly reduce the time and effort associated with implementing new models and understanding data. Just as high-level programming languages transformed developer productivity by abstracting away the details of the processor and memory architecture, probabilistic languages promise to free the developer from the complexities of high-performance probabilistic inference. Read more…

Python data tools just keep getting better

A variety of tools are making data science tasks easy to do in Python

Here are a few observations inspired by conversations I had during the just concluded PyData conference1.

The Python data community is well-organized:

Besides conferences (PyData, SciPy, EuroSciPy), there is a new non-profit (NumFOCUS) dedicated to supporting scientific computing and data analytics projects. The list of supported projects are currently Python-based, but in principle NumFOCUS is an entity that can be used to support related efforts from other communities.

It’s getting easier to use the Python data stack:

There are tools that facilitate the dissemination and sharing of code and programming environments. IPython2 notebooks allow Python code and markup in the same document. Notebooks are used to record and share complex workflows and are used heavily for (conference) tutorials. As the data stack grows, one of the major pain points is getting all the packages to work properly together (version compatibility is a common issue). In particular setting up environments were all the pieces work together can be a pain. There are now a few solutions that address this issue: Anaconda and cloud-based Wakari from Continuum Analytics, and cloud computing platform PiCloud.

There are many more visualization tools to choose from:

The 2D plotting tool matplotlib is the first tool enthusiasts turn to, but as I learned at the conference, there are a number of other options available. Continuum Analytics recently introduced companion packages Bokeh and Bokeh.js that simplify the creation of static and interactive visualizations using Python. In particular Bokeh is the equivalent of ggplot (it even has an interface that mimics ggplot). With Nodebox, programmers use Python code to create sketches and interactive visualizations that are similar to those produced by Processing. Read more…

The future of programming

Unraveling what programming will need for the next 10 years.

Programming is changing. The PC era is coming to an end, and software developers now work with an explosion of devices, job functions, and problems that need different approaches from the single machine era. In our age of exploding data, the ability to do some kind of programming is increasingly important to every job, and programming is no longer the sole preserve of an engineering priesthood.

Over the course of the next few months, I’m looking to chart the ways in which programming is evolving, and the factors that are affecting it. This article captures a few of those forces, and I welcome comment and collaboration on how you think things are changing.Where am I headed with this line of inquiry? The goal is to be able to describe the essential skills that programmers need for the coming decade, the places they should focus their learning, and differentiating between short term trends and long term shifts. Read more…