- Eve, Version 0 (Chris Grainger) — Version 0 contains a database, compiler, query runtime, data editor, and query editor. Basically, it’s a database with an IDE. You can add data both manually or through importing a CSV and then you can create queries over that data using our visual query editor.

- BOOM: Berkeley Orders Of Magnitude — an effort to explore implementing Cloud software using disorderly, data-centric languages.

- Eigenstyle — clever analysis and reconstruction of images through principal component analysis. And here are “prettiest ugly dresses,” those that I classified as dislikes, that the program predicted I would really like.

- Turing Digital Archive — many of Turing’s letters, talks, photographs, and unpublished papers, as well as memoirs and obituaries written about him. It contains images of the original documents that are held in the Turing collection at King’s College, Cambridge. (Timely as Jason Scott works to save a manual archive: [1], [2], [3])

"machine learning" entries

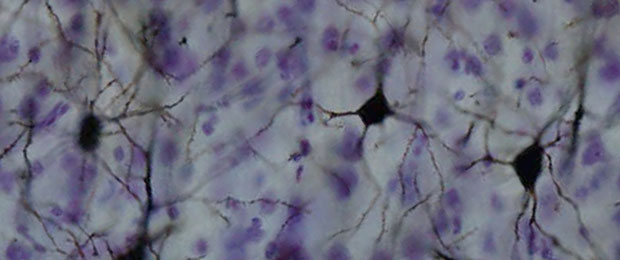

Data-driven neuroscience

The O'Reilly Radar Podcast: Bradley Voytek on data's role in neuroscience, the brain scanner, and zombie brains in STEM.

Subscribe to the O’Reilly Radar Podcast to track the technologies and people that will shape our world in the years to come.

In this week’s Radar Podcast, O’Reilly’s Mac Slocum chats with Bradley Voytek, an assistant professor of cognitive science and neuroscience at UC San Diego. Voytek talks about using data-driven approaches in his neuroscience work, the brain scanner project, and applying cognitive neuroscience to the zombie brain.

Here are a few snippets from their chat:

In the neurosciences, we’ve got something like three million peer reviewed publications to go through. When I was working on my Ph.D., I was very interested, in particular, in two brain regions. I wanted to know how these two brain regions connect, what are the inputs to them and where do they output to. In my naivety as a Ph.D. student, I had assumed there would be some sort of nice 3D visualization, where I could click on a brain region and see all of its inputs and outputs. Such a thing did not exist — still doesn’t, really. So instead, I ended up spending three or four months of my Ph.D. combing through papers written in the 1970s … and I kept thinking to myself, this is ridiculous, and this just stewed in the back of my mind for a really long time.

Sitting at home [with my wife], I said, I think I’ve figured out how to address this problem I’m working on, which is basically very simple text mining. Lets just scrape the text of these three million papers, or at least the titles and abstracts, and see what words co-occur frequently together. It was very rudimentary text mining, with the idea that if words co-occur frequently … this might give us an index of how related things are, and she challenged me to a code-off.

Unsupervised learning, attention, and other mysteries

How to almost necessarily succeed: An interview with Google research scientist Ilya Sutskever.

Get notified when our free report “Future of Machine Intelligence: Perspectives from Leading Practitioners” is available for download. The following interview is one of many that will be included in the report.

Ilya Sutskever is a research scientist at Google and the author of numerous publications on neural networks and related topics. Sutskever is a co-founder of DNNresearch and was named Canada’s first Google Fellow.

Ilya Sutskever is a research scientist at Google and the author of numerous publications on neural networks and related topics. Sutskever is a co-founder of DNNresearch and was named Canada’s first Google Fellow.

Key Takeaways:

- Since humans can solve perception problems very quickly, despite our neurons being relatively slow, moderately deep and large neural networks have enabled machines to succeed in a similar fashion.

- Unsupervised learning is still a mystery, but a full understanding of that domain has the potential to fundamentally transform the field of machine learning.

- Attention models represent a promising direction for powerful learning algorithms that require ever less data to be successful on harder problems.

David Beyer: Let’s start with your background. What was the evolution of your interest in machine learning, and how did you zero-in on your Ph.D. work?

Ilya Sutskever: I started my Ph.D. just before deep learning became a thing. I was working on a number of different projects, mostly centered around neural networks. My understanding of the field crystallized when collaborating with James Martens on the Hessian-free optimizer. At the time, greedy layer-wise training (training one layer at a time) was extremely popular. Working on the Hessian-free optimizer helped me understand that if you just train a very large and deep neural network on a lot of data, you will almost necessarily succeed. Read more…

A “bottom-up” approach to data unification

How machine learning plus expert sourcing can unify customer data at scale.

Watch the free webcast Integrating Customer Data at Scale to learn how Toyota Motor Europe was able to unify its customer data at scale.

Enterprises that are capable of gaining a unified view of their customer data can achieve added business enhancements and user opportunities. Capturing customer data, however, can be a difficult task, as most systems rely on traditional “top-down” approaches to standardizing data. In a recent O’Reilly webcast, Integrating Customer Data at Scale, Tamr field engineer Alan Wagner hosts a Q&A session with Matt Stevens, the general manager at Toyota Motor Europe, to demonstrate how a leading enterprise uses a third-generation system like Tamr to simplify the process of unifying customer data.

In the webcast, Stevens explains how Toyota Motor Europe has gained a 360-degree view of their customers through the Tamr Data Unification Platform, which takes a machine learning and expert-sourcing “human guided workflow” approach to data unification. Wagner provides a demo of the Tamr platform, applied within a Salesforce application, to demonstrate the ability to capture and unify customer data. Read more…

Four short links: 18 August 2015

Chris Grainger Ships, Disorderly Data-Centric Languages, PCA for Fun and Fashion, and Know Thy History

We make the software, you make the robots

An interview with Andreas Mueller, on scikit-learn and usable machine learning software.

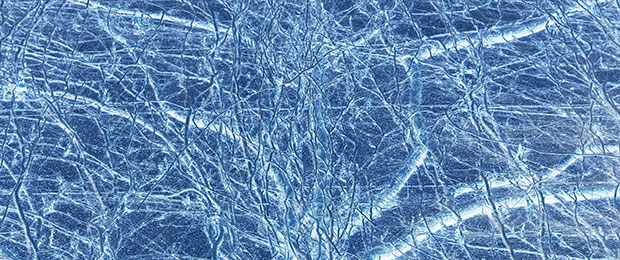

Superpixels example from Andreas Mueller’s thesis paper (PDF), used with permission.

Mueller wears many hats at work. He is one of the key maintainers of the popular Python machine learning library scikit-learn. Holding a doctorate in computer vision from the University of Bonn in Germany, he currently works on open science at New York University’s Center for Data Science. He speaks at conferences around the world and has a fanbase of 5,000+ followers on Twitter and about as many reputation points on Stack Overflow. In other words, this man has got mad street cred. He started out doing pure math in academia, and has now achieved software developer cult idol status. Read more…

Four short links: 4 August 2015

Data-Flow Graphing, Realtime Predictions, Robot Hotel, and Open-Source RE

- Data-flow Graphing in Python (Matt Keeter) — not shared because data-flow graphing is sexy new hot topic that’s gonna set the world on fire (though, I bet that’d make Matt’s day), but because there are entire categories of engineering and operations migraines that are caused by not knowing where your data came from or goes to, when, how, and why. Remember Wirth’s “algorithms + data structures = programs”? Data flows seem like a different slice of “programs.” Perhaps “data flow + typos = programs”?

- Machine Learning for Sports and Real-time Predictions (Robohub) — podcast interview for your commute. Real time is gold.

- Japan’s Robot Hotel is Serious Business (Engadget) — hotel was architected to suit robots: For the porter robots, we designed the hotel to include wide paths.” Two paths slope around the hotel lobby: one inches up to the second floor, while another follows a gentle decline to guide first-floor guests (slowly, but with their baggage) all the way to their room. Makes sense: at Solid, I spoke to a chap working on robots for existing hotels, and there’s an entire engineering challenge in navigating an elevator that you wouldn’t believe.

- bokken — GUI to help open source reverse engineering for code.

Four short links: 31 July 2015

Robot Swarms, Google Datacenters, VR Ecosystem, and DeepDream Visualised

- Buzz: An Extensible Programming Language for Self-Organizing Heterogeneous Robot Swarms (arXiv) — Swarm-based primitives allow for the dynamic management of robot teams, and for sharing information globally across the swarm. Self-organization stems from the completely decentralized mechanisms upon which the Buzz run-time platform is based. The language can be extended to add new primitives (thus supporting heterogeneous robot swarms), and its run-time platform is designed to be laid on top of other frameworks, such as Robot Operating System.

- Jupiter Rising: A Decade of Clos Topologies and Centralized Control in Google’s Datacenter Network (PDF) — Our datacenter networks run at dozens of sites across the planet, scaling in capacity by 100x over 10 years to more than 1Pbps of bisection bandwidth. Wow, their Wi-Fi must be AMAZING!

- Nokia’s VR Ambitions Could Restore Its Tech Lustre (Bloomberg) — the VR ecosystem map is super-interesting.

- Visualising GoogleNet Classes — fascinating to see squirrel monkeys and basset hounds emerge from nothing. It’s so tempting to say, “this is what the machine sees in its mind when it thinks of basset hounds,” even though Boring Brain says, “that’s bollocks and you know it!”

Four short links: 30 July 2015

Catalogue Data, Git for Data, Computer-Generated Handwriting, and Consumer Robots

- A Sort of Joy — MOMA’s catalogue was released under CC license, and has even been used to create new art. The performance is probably NSFW at your work without headphones on, but is hilarious. Which I never thought I’d say about a derivative work of a museum catalogue. (via Courtney Johnston)

- dat goes beta — the “git for data” goes beta. (via Nelson Minar)

- Computer Generated Handwriting — play with it here. (via Evil Mad Scientist Labs)

- Japanese Telcos vie for Consumer Robot-as-a-Service Business (Robohub) — NTT says Sota will be deployed in seniors’ homes as early as next March, and can be connected to medical devices to help monitor health conditions. This plays well with Japanese policy to develop and promote technological solutions to its aging population crisis.

Understanding neural function and virtual reality

The O'Reilly Data Show Podcast: Poppy Crum explains that what matters is efficiency in identifying and emphasizing relevant data.

Like many data scientists, I’m excited about advances in large-scale machine learning, particularly recent success stories in computer vision and speech recognition. But I’m also cognizant of the fact that press coverage tends to inflate what current systems can do, and their similarities to how the brain works.

During the latest episode of the O’Reilly Data Show Podcast, I had a chance to speak with Poppy Crum, a neuroscientist who gave a well-received keynote at Strata + Hadoop World in San Jose. She leads a research group at Dolby Labs and teaches a popular course at Stanford on Neuroplasticity in Musical Gaming. I wanted to get her take on AI and virtual reality systems, and hear about her experience building a team of researchers from diverse disciplines.

Understanding neural function

While it can sometimes be nice to mimic nature, in the case of the brain, machine learning researchers recognize that understanding and identifying the essential neural processes is much more critical. A related example cited by machine learning researchers is flight: wing flapping and feathers aren’t critical, but an understanding of physics and aerodynamics is essential.

Crum and other neuroscience researchers express the same sentiment. She points out that a more meaningful goal should be to “extract and integrate relevant neural processing strategies when applicable, but also identify where there may be opportunities to be more efficient.”

The goal in technology shouldn’t be to build algorithms that mimic neural function. Rather, it’s to understand neural function. … The brain is basically, in many cases, a Rube Goldberg machine. We’ve got this limited set of evolutionary building blocks that we are able to use to get to a sort of very complex end state. We need to be able to extract when that’s relevant and integrate relevant neural processing strategies when it’s applicable. We also want to be able to identify that there are opportunities to be more efficient and more relevant. I think of it as table manners. You have to know all the rules before you can break them. That’s the big difference between being really cool or being a complete heathen. The same thing kind of exists in this area. How we get to the end state, we may be able to compromise, but we absolutely need to be thinking about what matters in neural function for perception. From my world, where we can’t compromise is on the output. I really feel like we need a lot more work in this area. Read more…

Four short links: 27 July 2015

Google’s Borg, Georgia v. Malamud, SLAM-aware system, and SmartGPA

- Large-scale Cluster Management at Google with Borg — Google’s Borg system is a cluster manager that runs hundreds of thousands of jobs, from many thousands of different applications, across a number of clusters, each with up to tens of thousands of machines. […] We present a summary of the Borg system architecture and features, important design decisions, a quantitative analysis of some of its policy decisions, and a qualitative examination of lessons learned from a decade of operational experience with it.

- Georgia Sues Carl Malamud (TechDirt) — for copyright infringement… for publishing an official annotated copy of the state's laws. […] the state points directly to the annotated version as the official laws of the state.

- Monocular SLAM Supported Object Recognition (PDF) — a monocular SLAM-aware object recognition system that is able to achieve considerably stronger recognition performance, as compared to classical object recognition systems that function on a frame-by-frame basis. (via Improving Object Recognition for Robots)

- SmartGPA: How Smartphones Can Assess and Predict Academic Performance of College Students (PDF) — We show that there are a number of important behavioral factors automatically inferred from smartphones that significantly correlate with term and cumulative GPA, including time series analysis of activity, conversational interaction, mobility, class attendance, studying, and partying.