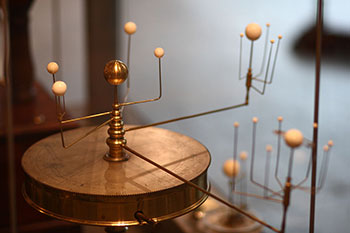

An orrery, a runnable model of the solar system that allows us to make predictions. Photo: Wikimedia Commons.

In my last post, we saw that AI means a lot of things to a lot of people. These dueling definitions each have a deep history — ok fine, baggage — that has massed and layered over time. While they’re all legitimate, they share a common weakness: each one can apply perfectly well to a system that is not particularly intelligent. As just one example, the chatbot that was recently touted as having passed the Turing test is certainly an interlocutor (of sorts), but it was widely criticized as not containing any significant intelligence.

Let’s ask a different question instead: What criteria must any system meet in order to achieve intelligence — whether an animal, a smart robot, a big-data cruncher, or something else entirely?

To answer this question, I want to explore a hypothesis that I’ve heard attributed to the cognitive scientist Josh Tenenbaum (who was a member of my thesis committee). He has not, to my knowledge, unpacked this deceptively simple idea in detail (though see his excellent and accessible paper How to Grow a Mind: Statistics, Structure, and Abstraction), and he would doubtless describe it quite differently from my attempt here. Any foolishness which follows is therefore most certainly my own, and I beg forgiveness in advance.

I’ll phrase it this way:

Intelligence, whether natural or synthetic, derives from a model of the world in which the system operates. Greater intelligence arises from richer, more powerful, “runnable” models that are capable of more accurate and contingent predictions about the environment.

What do I mean by a model? After all, people who work with data are always talking about the “predictive models” that are generated by today’s machine learning and data science techniques. While these models do technically meet my definition, it turns out that the methods in wide use capture very little of what is knowable and important about the world. We can do much better, though, and the key prediction of this hypothesis is that systems will gain intelligence proportionate to how well the models on which they rely incorporate additional aspects of the environment: physics, the behaviors of other intelligent agents, the rewards that are likely to follow from various actions, and so on. And the most successful systems will be those whose models are “runnable,” able to reason about and simulate the consequences of actions without actually taking them.

Let’s look at a few examples.

- Single-celled organisms leverage a simple behavior called chemotaxis to swim toward food and away from toxins; they do this by detecting the relevant chemical concentration gradients in their liquid environment. The organism is thus acting on a simple model of the world – one that, while devastatingly simple, usually serves it well.

- Mammalian brains have a region known as the hippocampus that contains cells that fire when the animal is in a particular place, as well as cells that fire at regular intervals on a hexagonal grid. While we don’t yet understand all of the details, these cells form part of a system that models the physical world, doubtless to aid in important tasks like finding food and avoiding danger — not so different from the bacteria.

- While humans also have a hippocampus, which probably performs some of these same functions, we also have overgrown neocortexes that model many other aspects of our world, including, crucially, our social environment: we need to be able to predict how others will act in response to various situations.

The scientists who study these and many other examples have solidly established that naturally occurring intelligences rely on internal models. The question, then, is whether artificial intelligences must rely on the same principles. In other words, what exactly did we mean when we said that intelligence “derives from” internal models? Just how strong is the causal link between a system having a rich world model and its ability to possess and display intelligence? Is it an absolute dependency, meaning that a sophisticated model is a necessary condition for intelligence? Are good models merely very helpful in achieving intelligence, and therefore likely to be present in the intelligences that we build or grow? Or is a model-based approach but one path among many in achieving intelligence? I have my hunches — I lean toward the stronger formulations — but I think these need to be considered open questions at this point.

The next thing to note about this conception of intelligence is that, bucking a long-running trend in AI and related fields, it is not a behavioralist measure. Rather than evaluating a system based on its actions alone, we are affirmedly piercing the veil in order to make claims about what is happening on the inside. This is at odds with the most famous machine intelligence assessment, the Turing test; it also contrasts with another commonly-referenced measure of general intelligence, “an agent’s ability to achieve goals in a wide range of environments”.

Of course, the reason for a naturally-evolving organism to spend significant resources on a nervous system that can build and maintain a sophisticated world model is to generate actions that promote reproductive success — big brains are energy hogs, and they need to pay rent. So, it’s not that behavior doesn’t matter, but rather that the strictly behavioral lens might be counterproductive if we want to learn how to build generally intelligent systems. A focus on the input-output characteristics of a system might suffice when its goals are relatively narrow, such as medical diagnoses, question answering, and image classification (though each of these domains could benefit from more sophisticated models). But this black-box approach is necessarily descriptive, rather than normative: it describes a desired endpoint, without suggesting how this result should be achieved. This devotion to surface traits leads us to adopt methods that do not not scale to harder problems.

Finally, what does this notion of intelligence say about the current state of the art in machine intelligence as well as likely avenues for further progress? I’m planning to explore this more in future posts, but note for now that today’s most popular and successful machine learning and predictive analytics methods — deep neural networks, random forests, logistic regression, Bayesian classifiers — all produce models that are remarkably impoverished in their ability to represent real-world phenomena.

In response to these shortcomings, there are several active research programs attempting to bring richer models to bear, including but not limited to probabilistic programming and representation learning. By now, you won’t be surprised that I think such approaches represent our best hope at building intelligent systems that can truly be said to understand the world they live in.