"O’Reilly Radar Podcast" entries

Risto Miikkulainen on evolutionary computation and making robots think for themselves

The O'Reilly Radar Podcast: Evolutionary computation, its applications in deep learning, and how it's inspired by biology.

Subscribe to the O’Reilly Radar Podcast to track the technologies and people that will shape our world in the years to come: Stitcher, TuneIn, iTunes, SoundCloud, RSS

In this week’s episode, David Beyer, principal at Amplify Partners, co-founder of Chart.io, and part of the founding team at Patients Know Best, chats with Risto Miikkulainen, professor of computer science and neuroscience at the University of Texas at Austin. They chat about evolutionary computation, its applications in deep learning, and how it’s inspired by biology.

Finding optimal solutions

We talk about evolutionary computation as a way of solving problems, discovering solutions that are optimal or as good as possible. In these complex domains like, maybe, simulated multi-legged robots that are walking in challenging conditions—a slippery slope or a field with obstacles—there are probably many different solutions that will work. If you run the evolution multiple times, you probably will discover some different solutions. There are many paths of constructing that same solution. You have a population and you have some solution components discovered here and there, so there are many different ways for evolution to run and discover roughly the same kind of a walk, where you may be using three legs to move forward and one to push you up the slope if it’s a slippery slope.

You do (relatively) reliably discover the same solutions, but also, if you run it multiple times, you will discover others. This is also a new direction or recent direction in evolutionary computation—that the standard formulation is that you are running a single run of evolution and you try to, in the end, get the optimum. Everything in the population supports finding that optimum.

Eric McNulty on real-time disaster reponse and leadership beyond control

The O'Reilly Radar Podcast: FEMA's Innovation Team and practicing leadership as if it's an Olympic sport.

Subscribe to the O’Reilly Radar Podcast to track the technologies and people that will shape our world in the years to come.

O’Reilly’s Jenn Webb chats with Eric McNulty, a consultant, writer, speaker, and catalyst for positive leadership. McNulty talks about real-time disaster response, the connections between disaster response and organizational leadership, and how today’s leaders can achieve order beyond control and influence beyond authority. McNulty will talk more about instituting effective leadership at the Cultivate leadership training at Strata + Hadoop World in San Jose in March.

Here are a few highlights:

Right after Hurricane Sandy, I was here in New York and New Jersey, and FEMA deployed their first ever Innovation Team, which meant they were trying to innovate in the midst of disaster response. … They were coming together and building mesh networks in some cases. They were crowdsourcing evaluation of photographs. They would get the Civilian Air Patrol to do overviews of the effected areas. They’d upload that and people anywhere in the country could look at it and give it a basic evaluation of severe, moderate, or mild in terms of the damage. They were able to get situational awareness very quickly the way they never would have been able to otherwise. … They were able to innovate in real time in the field and see what worked and what didn’t; the then deputy administrator, who is now a colleague of mine at Harvard, said it fundamentally changed the way FEMA operates.

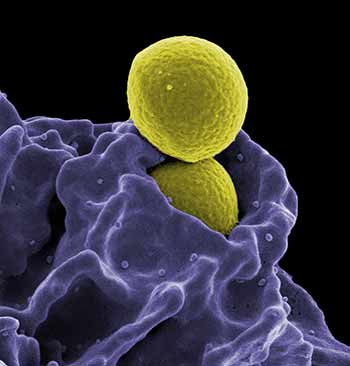

Mark Burgess on a CS narrative, orders of magnitude, and approaching biological scale

The O'Reilly Radar Podcast: "In Search of Certainty," Promise Theory, and scaling the computational net.

Subscribe to the O’Reilly Radar Podcast to track the technologies and people that will shape our world in the years to come.

In this week’s Radar Podcast episode, Aneel Lakhani, director of marketing at SignalFx, chats with Mark Burgess, professor emeritus of network and system administration, former founder and CTO of CFEngine, and now an independent technologist and researcher. They talk about the new edition of Burgess’ book, In Search of Certainty, Promise Theory and how promises are a kind of service model, and ways of applying promise-oriented thinking to networks.

Here are a few highlights from their chat:

We tend to separate our narrative about computer science from the narrative of physics and biology and these other sciences. Many of the ideas of course, all of the ideas, that computers are based on originate in these other sciences. I felt it was important to weave computer science into that historical narrative and write the kind of book that I loved to read when I was a teenager, a popular science book explaining ideas, and popularizing some of those ideas, and weaving a story around it to hopefully create a wider understanding.

I think one of the things that struck me as I was writing [In Search of Certainty], is it all goes back to scales. This is a very physicist point of view. When you measure the world, when you observe the world, when you characterize it even, you need a sense of something to measure it by. … I started the book explaining how scales affect the way we describe systems in physics. By scale, I mean the order of magnitude. … The descriptions of systems are often qualitatively different with these different scales. … Part of my work over the years has been trying to find out how we could invent the measuring scale for semantics. This is how so-called Promise Theory came about. I think this notion of scale and how we apply it to systems is hugely important.

You’re always trying to find the balance between the forces of destruction and the forces of repair.

Katie Dill on heading up experience design at Airbnb

The O'Reilly Radar Podcast: A triforce company structure, the power of storyboards, and designing business strategy.

Subscribe to the O’Reilly Radar Podcast to track the technologies and people that will shape our world in the years to come.

In this special holiday episode of the Radar Pocast, we’re featuring a podcast cross-over of the O’Reilly Design Podcast, which you can find on iTunes, Stitcher, TuneIn, or SoundCloud. O’Reilly’s Mary Treseler host’s the Design Podcast, and in this episode, she chats with Airbnb’s head of experience design Katie Dill about the values that drive design at Airbnb, the triforce structure of the company, and the process of journey mapping their users’ experience.

Here are a few snippets from their conversation:

That triforce of product management, engineering, and design, working together from point zero on the process of what problems we are trying to solve, and how we might solve that, and why we might solve it, and what the road map should be in getting there, is a process that is facilitated through design thinking. It’s a process that includes all those voices in a way that we think gets us to some solutions that are a little bit more creative than we otherwise would have gotten to, but also thoughtfully considered in terms of the technology and the business impact.

Patrick Wendell on Spark’s roadmap, Spark R API, and deep learning on the horizon

The O'Reilly Radar Podcast: A special holiday cross-over of the O'Reilly Data Show Podcast.

Subscribe to the O’Reilly Radar Podcast to track the technologies and people that will shape our world in the years to come.

In this special holiday episode of the Radar Podcast, we’re featuring a cross-over of the O’Reilly Data Show Podcast, which you can find on iTunes, Stitcher, TuneIn, or SoundCloud. O’Reilly’s Ben Lorica hosts that podcast, and in this episode, he chats with Apache Spark release manager and Databricks co-founder Patrick Wendell about the roadmap of Spark and where it’s headed, and interesting applications he’s seeing in the growing Spark ecosystem.

Here are some highlights from their chat:

We were really trying to solve research problems, so we were trying to work with the early users of Spark, getting feedback on what issues it had and what types of problems they were trying to solve with Spark, and then use that to influence the roadmap. It was definitely a more informal process, but from the very beginning, we were expressly user driven in the way we thought about building Spark, which is quite different than a lot of other open source projects. … From the beginning, we were focused on empowering other people and building platforms for other developers.

One of the early users was Conviva, a company that does analytics for real-time video distribution. They were a very early user of Spark, they continue to use it today, and a lot of their feedback was incorporated into our roadmap, especially around the types of APIs they wanted to have that would make data processing really simple for them, and of course, performance was a big issue for them very early on because in the business of optimizing real-time video streams, you want to be able to react really quickly when conditions change. … Early on, things like latency and performance were pretty important.

Leah Busque and Dan Teran on the future of work

The O'Reilly Radar Podcast: Service networking, employees vs contractors, and turning the world into a luxury hotel.

Subscribe to the O’Reilly Radar Podcast to track the technologies and people that will shape our world in the years to come.

In this week’s Radar Podcast episode, O’Reilly’s Mac Slocum delves into the economy with two speakers from our recent Next:Economy conference. First, Slocum talks with Leah Busque, founder of TaskRabbit, about service networking, TaskRabbit’s goals, and issues facing the peer economy. In the second segment, Slocum talks with Dan Teran, co-founder of Managed by Q, about the on-demand economy and the future of work.

Here are a few highlights from Busque:

As a technologist myself, I became really passionate about how we mash up social and location technologies to connect real people, in the real world, to get real things done. I’d say in the last two years, it’s become real time, and that’s really the idea about where service networking was born.

It’s certainly our job to create a platform where demand is generated so that our tasker community, our suppliers, can find work, but I think even more than that, it is about building a platform and tools for our taskers to build out their own businesses.

Dave Zwieback on learning reviews and humans keeping pace with complex systems

O'Reilly Radar Podcast: Learning from both failure and success to make our systems more resilient.

Subscribe to the O’Reilly Radar Podcast to track the technologies and people that will shape our world in the years to come.

In this week’s Radar Podcast episode, I chat with Dave Zwieback, head of engineering at Next Big Sound and CTO of Lotus Outreach. Zwieback is the author of a new book, Beyond Blame: Learning from Failure and Success, that outlines an approach to make postmortems not only blameless, but to turn them into a productive learning process. We talk about his book, the framework for conducting a “learning review,” and how humans can keep pace with the growing complexity of the systems we’re building.

When you add scale to anything, it becomes sort of its own problem. Meaning, let’s say you have a single computer, right? The mean time to failure of the hard drive or the computer is actually fairly lengthy. When you have 10,000 of them or 10 million of them, you’re having tens if not hundreds of failures every single day. That certainly changes how you go about designing systems. Again, whenever I say systems, I also mean organizations. To me, they’re not really separate.

I spent a bunch of my time in fairly large-scale organizations, and I’ve witnessed and been part of a significant number of outages or issues. I’ve seen how dysfunctional organizations dealing with failure can be. By the way, when we mention failure, it’s important for us not to forget about success. All the things that we find in the default ways that people and organizations deal with failure, we find in the default ways that they deal with success. It’s just a mirror image of each other.

We can learn from both failures and success. If we’re only learning from failures, which is what the current practice of postmortem is focused on, then we’re missing … the other 99% of the time when they’re not failing. The practice of learning reviews allows for learning from both failures and successes.

Jeff Jonas on context computing, irresistible surveillance, and hunting astroids with Space Time Boxes

The O'Reilly Radar Podcast: Context-aware computing, privacy by design, and predicting astroid collisions.

Subscribe to the O’Reilly Radar Podcast to track the technologies and people that will shape our world in the years to come.

In this week’s Radar Podcast episode, I sit down with Jeff Jonas, an IBM fellow and chief scientist of context computing, Ironman triathlete, and contributing author to the new book, Privacy in the Modern Age: The Search for Solutions. Jonas talks about applications of context-aware computing, his new G2 software, and astroid hunting with astronomers at the University of Honolulu.

Here are a few highlights from our conversation:

The definition I’m using of context is this: to better understand something by taking into account the things around it. Context computing is taking a new piece of data that arrived in the enterprise as a puzzle piece and finding other pieces of data that had been previously seen and see how it fits. Instead of using algorithms staring at puzzle pieces, you end up with whole chunks of the puzzle and it’s much easier to make a high-quality prediction.

The purpose of G2 is to be able to take structured and unstructured data from batch or streaming sources. Think of it as new observations across a virtually unlimited number of data points. You could think of this as internet of things feeding it or transactional systems or social data or mobile data. It’s about weaving all those puzzle pieces together and then using the puzzle pieces as they land to figure out what’s important or not and use these system to help focus people’s attention.

Kristian Hammond on truly democratizing data and the value of AI in the enterprise

The O'Reilly Radar Podcast: Narrative Science's foray into proprietary business data and bridging the data gap.

Subscribe to the O’Reilly Radar Podcast to track the technologies and people that will shape our world in the years to come.

In this week’s episode, O’Reilly’s Mac Slocum chats with Kristian Hammond, Narrative Science’s chief scientist. Hammond talks about Natural Language Generation, Narrative Science’s shift into the world of business data, and evolving beyond the dashboard.

Here are a few highlights:

We’re not telling people what the data are; we’re telling people what has happened in the world through a view of that data. I don’t care what the numbers are; I care about who are my best salespeople, where are my logistical bottlenecks. Quill can do that analysis and then tell you — not make you fight with it, but just tell you — and tell you in a way that is understandable and includes an explanation about why it believes this to be the case. Our focus is entirely, a little bit in media, but almost entirely in proprietary business data, and in particular we really focus on financial services right now.

You can’t make good on that promise [of what big data was supposed to do] unless you communicate it in the right way. People don’t understand charts; they don’t understand graphs; they don’t understand lines on a page. They just don’t. We can’t be angry at them for being human. Instead we should actually have the machine do what it needs to do in order to fill that gap between what it knows and what people need to know.

Mike Kuniavsky on the tectonic shift of the IoT

The O'Reilly Radar Podcast: The Internet of Things ecosystem, predictive machine learning superpowers, and deep-seated love for appliances and furniture.

Subscribe to the O’Reilly Radar Podcast to track the technologies and people that will shape our world in the years to come.

In this week’s episode of the Radar Podcast, O’Reilly’s Mary Treseler chats with Mike Kuniavsky, a principal scientist in the Innovation Services Group at PARC. Kuniavsky talks about designing for the Internet of Things ecosystem and why the most interesting thing about the IoT isn’t the “things” but the sensors. He also talks about his deep-seated love for appliances and furniture, and how intelligence will affect those industries.

Here are some highlights from their conversation:

Wearables as a class is really weird. It describes where the thing is, not what it is. It’s like referring to kitchenables. ‘Oh, I’m making a kitchenable.’ What does that mean? What does it do for you?

There’s this slippery slope between service design and UX design. I think UX design is more digital and service design allows itself to include things like a poster that’s on a wall in a lobby, or a little card that gets mailed to people, or a human being that they can talk to. … Service design takes a slightly broader view, whereas UX design is — and I think usefully — still focused largely on the digital aspect of it.