"web operations" entries

Signals from the 2015 O’Reilly Velocity Conference in Santa Clara

Key insights from DevOps, Web operations, and performance.

People from across the Web operations and performance worlds are coming together this week for the 2015 O’Reilly Velocity Conference in Santa Clara. Below, we’ve assembled notable keynotes, interviews, and insights from the event.

Think like a villain

Laura Bell outlines a three-step approach to securing organizations — by putting yourself in the bad guy’s shoes (without committing actual crime, she stresses):

- Think like a villain and be objective: identify why and how someone would attack your company; what is the core value they’d come to steal?

- Create a safe place to create a little chaos: don’t do it live, but find a safe place without restriction and without fear to break things, to practice creative chaos.

- Play like you’ve never read the the rule book: Not everyone plays by the same rules as you, so to protect yourself and your company, you have to think more like the person willing to break the rules.

Ghosts in the machines

The secret to successful infrastructure automation is people.

“The trouble with automation is that it often gives us what we don’t need at the cost of what we do.” —Nicholas Carr, The Glass Cage: Automation and Us

Virtualization and cloud hosting platforms have pervasively decoupled infrastructure from its underlying hardware over the past decade. This has led to a massive shift towards what many are calling dynamic infrastructure, wherein infrastructure and the tools and services used to manage it are treated as code, allowing operations teams to adopt software approaches that have dramatically changed how they operate. But with automation comes a great deal of fear, uncertainty and doubt.

Common (mis)perceptions of automation tend to pop up at the extreme ends: It will either liberate your people to never have to worry about mundane tasks and details, running intelligently in the background, or it will make SysAdmins irrelevant and eventually replace all IT jobs (and beyond). Of course, the truth is very much somewhere in between, and relies on a fundamental rethinking of the relationship between humans and automation.

Read more…

Introducing “A Field Guide to the Distributed Development Stack”

Tools to develop massively distributed applications.

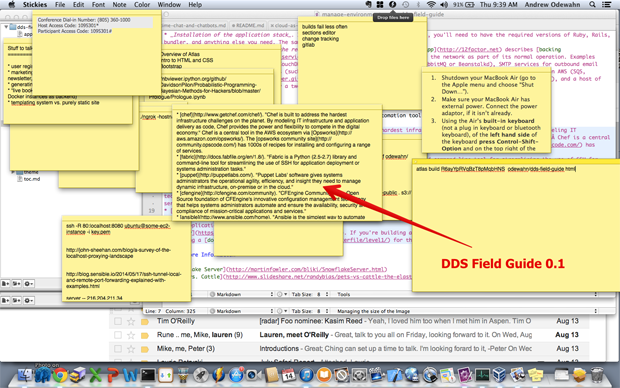

Editor’s Note: At the Velocity Conference in Barcelona we launched “A Field Guide to the Distributed Development Stack.” Early response has been encouraging, with reactions ranging from “If I only had this two years ago” to “I want to give a copy of this to everyone on my team.” Below, Andrew Odewahn explains how the Guide came to be and where it goes from here.

As we developed Atlas, O’Reilly’s next-generation publishing tool, it seemed like every day we were finding interesting new tools in the DevOps space, so I started a “Sticky” for the most interesting-looking tools to explore.

At first, this worked fine. I was content to simply keep a list, where my only ordering criteria was “Huh, that looks cool. Someday when I have time, I’ll take a look at that,” in the same way you might buy an exercise DVD and then only occasionally pull it out and think “Huh, someday I’ll get to that.” But, as anyone who has watched DevOps for any length of time can tell you, it’s a space bursting with interesting and exciting new tools, so my list and guilt quickly got out of hand.

Signals from Velocity Europe 2014

From design processes to postmortem complexity, here are key insights from Velocity Europe 2014.

Practitioners and experts from the web operations and performance worlds came together in Barcelona, Spain for Velocity Europe 2014. We’ve gathered highlights and insights from the event below.

Managing performance is like herding cats

Aaron Rudger, senior product marketing manager at Keynote Systems, says bridging the communication gap between IT and the marketing and business sectors is a bit like herding cats. Successful communication requires a narrative that discusses performance in the context of key business metrics, such as user engagement, abandonment, impression count, and revenue.

Beyond the stack

The tools in the Distributed Developer's Stack make development manageable in a highly distributed environment.

The shape of software development has changed radically in the last two decades. We’ve seen many changes: the Internet, the web, virtualization, and cloud computing. All of these changes point toward a fundamental new reality: all computing has become distributed computing. The age of standalone applications has disappeared, and applications that run on a single computer are almost inconceivable. Distributed is the default; and whether an application is running on Amazon Web Services (AWS), on a private cloud, or even on a desktop or a mobile phone, it depends on the behavior of other systems and services that aren’t under the developer’s control.

The shape of software development has changed radically in the last two decades. We’ve seen many changes: the Internet, the web, virtualization, and cloud computing. All of these changes point toward a fundamental new reality: all computing has become distributed computing. The age of standalone applications has disappeared, and applications that run on a single computer are almost inconceivable. Distributed is the default; and whether an application is running on Amazon Web Services (AWS), on a private cloud, or even on a desktop or a mobile phone, it depends on the behavior of other systems and services that aren’t under the developer’s control.

In the past few years, a new toolset has grown up to support the development of massively distributed applications. We call this new toolset the Distributed Developer’s Stack (DDS). It is orthogonal to the more traditional world of servers, frameworks, and operating systems; it isn’t a replacement for the aged LAMP stack, but a set of tools to make development manageable in a highly distributed environment.

The DDS is more of a meta-stack than a “stack” in the traditional sense. It’s not prescriptive; we don’t care whether you use AWS or OpenStack, whether you use Git or Mercurial. We do care that you develop for the cloud, and that you use a distributed version control system. The DDS is about the requirements for working effectively in the second decade of the 21st century. The specific tools have evolved, and will continue to evolve, and we expect you to evolve, too. Read more…

Everything is distributed

How do we manage systems that are too large to understand, too complex to control, and that fail in unpredictable ways?

“What is surprising is not that there are so many accidents. It is that there are so few. The thing that amazes you is not that your system goes down sometimes, it’s that it is up at all.”—Richard Cook

In September 2007, Jean Bookout, 76, was driving her Toyota Camry down an unfamiliar road in Oklahoma, with her friend Barbara Schwarz seated next to her on the passenger side. Suddenly, the Camry began to accelerate on its own. Bookout tried hitting the brakes, applying the emergency brake, but the car continued to accelerate. The car eventually collided with an embankment, injuring Bookout and killing Schwarz. In a subsequent legal case, lawyers for Toyota pointed to the most common of culprits in these types of accidents: human error. “Sometimes people make mistakes while driving their cars,” one of the lawyers claimed. Bookout was older, the road was unfamiliar, these tragic things happen. Read more…

What Is the Risk That Amazon Will Go Down (Again)?

Velocity 2013 Speaker Series

Why should we at all bother about notions such as risk and safety in web operations? Do web operations face risk? Do web operations manage risk? Do web operations produce risk? Last Christmas Eve, Amazon had an AWS outage affecting a variety of actors, including Netflix, which was a service included in many of the gifts shared on that very day. The event has introduced the notion of risk into the discourse of web operations, and it might then be good timing for some reflective thoughts on the very nature of risk in this domain.

What is risk? The question is a classic one, and the answer is tightly coupled to how one views the nature of the incident occurring as a result of the risk.

One approach to assessing the risk of Amazon going down is probabilistic: start by laying out the entire space of potential scenarios leading to Amazon going down, calculate their probability, and multiply the probability for each scenario by their estimated severity (likely in terms of the costs connected to the specific scenario depending on the time of the event). Each scenario can then be plotted in a risk matrix showing their weighted ranking (to prioritize future risk mitigation measures) or calculated as a collective sum of the risks for each scenario (to judge whether the risk for Amazon going down is below a certain acceptance criterion).

This first way of answering the question of what the risk is for Amazon to go down is intimately linked with a perception of risk as energy to be kept contained (Haddon, 1980). This view originates from more recent times of increased development of process industries in which clearly graspable energies (fuel rods at nuclear plants, the fossil fuels at refineries, the kinetic energy of an aircraft) are to be kept contained and safely separated from a vulnerable target such as human beings. The next question of importance here becomes how to avoid an uncontrolled release of the contained energy. The strategies for mitigating the risk of an uncontrolled release of energy are basically two: barriers and redundancy (and the two combined: redundancy of barriers). Physically graspable energies can be contained through the use of multiple barriers (called “defenses in depth”) and potentially several barriers of the same kind (redundancy), for instance several emergency-cooling systems for a nuclear plant.

Using this metaphor, the risk of Amazon going down is mitigated by building a system of redundant barriers (several server centers, backup, active fire extinguishing, etc.). This might seem like a tidy solution, but here we run into two problems with this probabilistic approach to risk: the view of the human operating the system and the increased complexity that comes as a result of introducing more and more barriers.

Controlling risk by analyzing the complete space of possible (and graspable) scenarios basically does not distinguish between safety and reliability. From this view, a system is safe when it is reliable, and the reliability of each barrier can be calculated. However there is one system component that is more difficult to grasp in terms of reliability than any other: the human. Inevitably, proponents of the energy/barrier model of risk end up explaining incidents (typically accidents) in terms of unreliable human beings not guaranteeing the safety (reliability) of the inherently safe (risk controlled by reliable barriers) system. I think this problem—which has its own entire literature connected to it—is too big to outline in further detail in this blog post, but let me point you towards a few references: Dekker, 2005; Dekker, 2006; Woods, Dekker, Cook, Johannesen & Sarter, 2009. The only issue is these (and most other citations in this post) are all academic tomes, so for those who would prefer a shorter summary available online, I can refer you to this report. I can also reassure you that I will get back to this issue in my keynote speech at the Velocity conference next month. To put the critique short: the contemporary literature questions the view of humans as the unreliable component of inherently safe systems, and instead advocates a view of humans as the only ones guaranteeing safety in inherently complex and risky environments.

Read more…

Velocity Profile: Nicole Sullivan

Web ops and performance questions with Nicole Sullivan, architect at Stubbornella.

Nicole Sullivan discusses her favorite CSS tools and who she follows in the web ops & performance world.

Steve Souders on Web Performance Optimization

Why "even faster" matters in the web performance and optimization world.

Steve Souders on the state of web performance, optimization and velocity.