“You say you want a revolution,

Well, you know,

We all want to change the world.” — The Beatles

I loved Google engineer Steve Yegge’s rant about: A) Google not grokking how to build and execute platforms; and B) How his ex-employer, Amazon, does.

First off, it bucks conventional wisdom. How could Google, the high priest of the cloud and the parent of Android, analytics and AdWords/AdSense, not be a standard-setter for platform creation?

Second, as Amazon’s strategy seems to be to embrace “open” Android and use it to make a platform that’s proprietary to Amazon, that’s a heck of a story to watch unfold in the months ahead. Even more so, knowing that Amazon has serious platform mojo.

But mostly, I loved the piece because it underscores the granular truth about just how hard it is to execute a coherent platform strategy in the real world.

Put another way, Yegge’s rant, and what it suggests about Google’s and Amazon’s platform readiness, provides the best insider’s point of reference for appreciating how Apple has played chess to everyone’s checkers in the post-PC platform wars.

Case in point, what company other than Apple could have executed something even remotely as rich and well-integrated as the simultaneous release of iOS 5, iCloud and iPhone 4S, the latter of which sold four million units in its first weekend of availability?

Let me answer that for you: No one.

Post-PC: Putting humans into the center of the computing equation

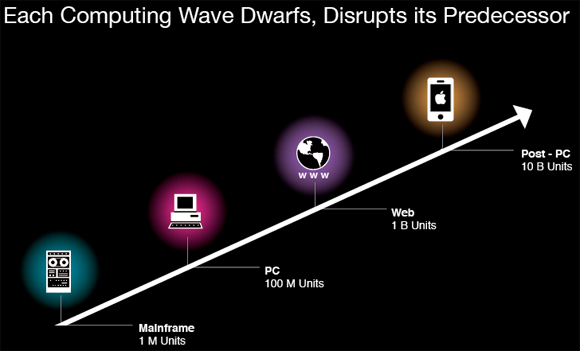

There is a truism that each wave of computing not only disrupts, but dwarfs its predecessor.

The mainframe was dwarfed by the PC, which in turn has been subordinated by the web. But now, a new kind of device is taking over. It’s mobile, lightweight, simple to use, connected, has a long battery life and is a digital machine for running native apps, web browsing, playing all kinds of media, enabling game playing, taking photos and communicating.

Given its multiplicity of capabilities, it’s not hard to imagine a future where post-PC devices dot every nook and cranny of the planet (an estimated 10 billion devices by 2020, according to Morgan Stanley).

But, an analysis of evolving computing models suggests a second, less obvious moral of the story. Namely, when you solve the right core problems central to enabling the emergent wave (as opposed to just bolting on more stuff), all sorts of lifecycle advantages come your way.

In the PC era, for example, the core problems were centered on creating homogeneity to get to scale and to give developers a singular platform to program around, something that the Wintel hardware-software duopoly addressed with bull’s-eye accuracy. As a result, Microsoft and Intel captured the lion’s share of the industry’s profits.

By contrast, the wonderful thing about the way that the web emerged is that HTML initially made it so simple to “write once, run anywhere” that any new idea — brilliant or otherwise — could rapidly go from napkin to launch to global presence. The revolution was completely decentralized, and suddenly, web-based applications were absorbing more and more of the PC’s reason for being.

Making all of this new content discoverable via search and monetizable (usually via advertising) thus became the core problem where the lion’s share of profits flowed, and Google became the icon of the web.

The downside of this is that because the premise of the web is about abstracting out hardware and OS specificity, browsers are prone to crashing, slowdowns and sub-optimal performance. Very little about the web screams out “great design” or “magical user experience.”

Enter Apple. It brought back a fundamental appreciation of the goodness of “native” experiences built around deeply integrated hardware, software and service platforms.

Equally important, Apple’s emphasis on outcomes over attributes led it to marry design, technology and liberal arts in ways that brought humans into the center of the computing equation, such that for many, an iPhone, iPod Touch or iPad is the most “personal” computer they have ever owned.

The success of Apple in this regard is best appreciated by how it took a touch-based interfacing model and made it seamless and invisible across different device types and interaction methods. Touch facilitated the emotional bond that users have with their iPhones, iPads and the like. Touch is one of the human senses, after all.

Thus, it’s little surprise that the lion’s share of profits in the post-PC computing space are flowing to the company that is delivering the best, most human-centric user experience: Apple.

Now, Apple is opening a second formal interface into iOS through Siri, a voice-based helper system that is enmeshed in the land of artificial intelligence and automated agents. This was noted by Daring Fireball’s John Gruber in an excellent analysis of the iPhone 4S:

… Siri is indicative of an AI-focused ambition that Apple hasn’t shown since before Steve Jobs returned to the company. Prior to Siri, iOS struck me being designed to make it easy for us to do things. Siri is designed to do things for us.

Once again, Apple is looking to one of the human senses — this time, sound — to provide a window for users into computing. While many look at Siri as a concept that’s bound to fail, if Apple gets Siri right, it could become even more transformational than touch — particularly as Siri’s dictionary, grammar and contextual understanding grow.

Taken together, a new picture of the evolution of computing starts to emerge. An industry that was once defined by the singular goal of achieving power (the mainframe era), morphed over time into the noble ambition of achieving ubiquity via the “PC on every desktop” era. It then evolved into the ideal of universality, vis-à-vis the universal access model of the web, which in turn was aided by lots of free, ad-supported sites and services. Now, human-centricity is emerging as the raison d’être for computing, and it seems clear that the inmates will never run the asylum again. That may quite possibly be the greatest legacy of Steve Jobs.

Do technology revolutions drive economic revolutions?

Sitting in these difficult economic times, it is perhaps fair to ask if the rise of post-PC computing is destined to be a catalyst for economic revival. After all, we’ve seen the Internet disrupt industry after industry with a brutal efficiency that has arguably wiped out more jobs than it has created.

Before answering that, though, let me note that while the seminal revolutions always appear in retrospect to occur in one magical moment, in truth, they play out as a series of compounding innovations, punctuated by a handful of catalytic, game-changing events.

For example, it may seem that the Industrial Revolution occurred spontaneously, but the truth is that for the revolution to realize its destiny, multiple concurrent innovations had to occur in manufacturing, energy utilization, information exchange and machine tools. And all of this was aided by significant public infrastructure development. It took continuous, measurable improvements in the products, markets, suppliers and sales channels participating in the embryonic wave before things sufficiently coalesced to transform society, launch new industries, create jobs, and rain serious material wealth on the economy.

It’s often a painful, messy process going from infancy to maturation, and it may take still more time for this latest wave to play out in our society. But, I fully believe that we are approaching what VC John Doerr refers to as the “third wave” in technology:

We are at the beginning of a third wave in technology (the prior two were the commercialization of the microprocessor, followed 15 years later by the advent of the web), which is this convergence of mobile and social technologies made possible by the cloud. We will see the creation of multiple multi-billion-dollar businesses, and equally important, tens maybe hundreds of thousands of smaller companies.

For many folks, the revolution can’t come soon enough. But it is coming.

Quantifying the post-PC “standard bearers”

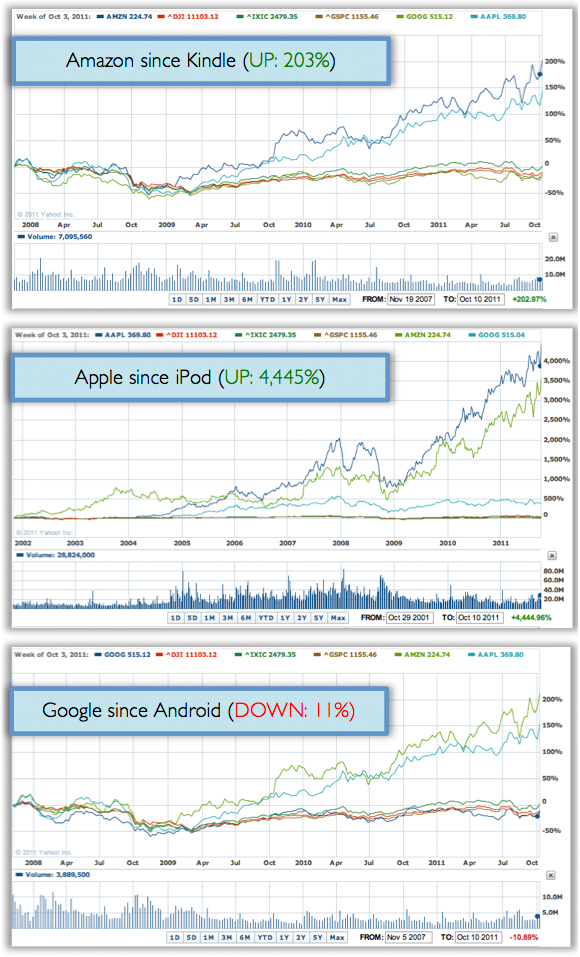

A couple years back, I wrote an article called “Built-to-Thrive — The Standard Bearers,” where I argued that Apple was the gold standard company (i.e., the measuring stick by which all others are judged), Google was the silver and Amazon was the bronze.

The only re-thinking I have with respect to that medal stand is that Amazon and Google have now flipped places.

Most fundamentally, this exemplifies:

- How well Apple has succeeded in actually solving the core problems of its constituency base through an integrated, human-centered platform.

- How Amazon has gained religion about the importance of platform practice.

- How, as Yegge noted, Google doesn’t always “eat its own dog food.”

If you doubt this, check out the adjacent charts, which spotlight the relative stock performance of Apple, Amazon and Google after each company’s strategic foray into post-PC computing: namely, iPod, Kindle and Android, respectively.

This is one of those cases where the numbers may surprise, but they don’t lie.

Related: