My first child was born just about nine months ago. From the hospital window on that memorable day, I could see that it was surprisingly sunny for a Berkeley autumn afternoon. At the time, I’d only slept about three of the last 38 hours. My mind was making up for the missing haze that usually fills the Berkeley sky. Despite my cloudy state, I can easily recall those moments following my first afternoon laying with my newborn son. In those minutes, he cleared my mind better than the sun had cleared the Berkeley skies.

While my wife slept and recovered, I talked to my boy, welcoming him into this strange world and his newfound existence. I told him how excited I was for him to learn about it all: the sky, planets, stars, galaxies, animals, happiness, sadness, laughter. As I talked, I came to realize how many concepts I understand that he lacked. For every new thing I mentioned, I realized there were 10 more that he would need to learn just to understand that one.

Of course, he need not know specific facts to appreciate the sun’s warmth, but to understand what the sun is, he must first learn the pyramid of knowledge that encapsulates our understanding of it: He must learn to distinguish self from other; he must learn about time, scale and distance and proportion, light and energy, motion, vision, sensation, and so on.

I mentioned time. Ultimately, I regressed to talking about language, mathematics, history, ancient Egypt, and the Pyramids. It was the verbal equivalent of “wiki walking,” wherein I go to Wikipedia to look up an innocuous fact, such as the density of gold, and find myself reading about Mesopotamian religious practices an hour later.

It struck me then how incredible human culture, science, and technology truly are. For billions of years, life was restricted to a nearly memoryless existence, at most relying upon brief changes in chemical gradients to move closer to nutrient sources or farther from toxins.

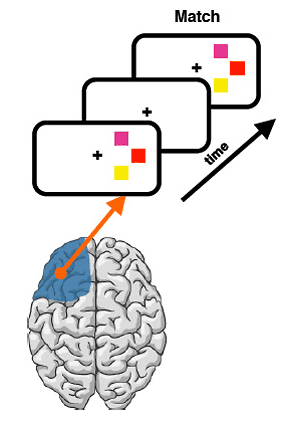

With time, these basic chemo- and photo-sensory apparatuses evolved; creatures with longer memories — perhaps long enough to remember where food sources were richest — possessed an evolutionary advantage. Eventually, the time scales on which memory operates extended longer; short-term memory became long-term memory, and brains evolved the ability to maintain a memory across an entire biological lifetime. (In fact, how the brain coordinates such memories is a core question of my neuroscientific research.)

However, memory did not stop there. Language permitted interpersonal communication, and primates finally overcame the memory limitations of a single lifespan. Writing and culture imbued an increased permanence to memory, impervious to the requirement for knowledge to pass verbally, thus improving the fidelity of memory and minimizing the costs of the “telephone game effect.”

We are now in the digital age, where we are freed from the confines of needing to remember a phone number or other arbitrary facts. While I’d like to think that we’re using this “extra storage” for useful purposes, sadly I can tell you more about minutiae of the Marvel Universe and “Star Wars” canon than will ever be useful (short of an alien invasion in which our survival as a species is predicated on my ability to tell you that Nightcrawler doesn’t, strictly speaking, teleport, but rather he travels through another dimension, and when he reappears in our dimension the “BAMF” sound results from some sulfuric gasses entering our dimension upon his return).

But I wiki-walk digress.

So what does all of this extra memory gain us?

Accelerated innovation.

As a scientist my (hopefully) novel research is built upon the unfathomable number of failures and successes dedicated by those who came before me. The common refrain is that we scientists stand on the shoulders of giants. It is for this reason that I’ve previously argued that research funding is so critical, even for apparently “frivolous” projects. I’ve got a Google Doc noting impressive breakthroughs that emerged from research that, on the surface, has no “practical” value:

- Research on black holes helped create Wi-Fi

- Optometry saved lives on 9/11 via architecture

- Growing bacteria in dirty petri dishes led to penicillin

- Studying monkey social behaviors and eating habits led to insights into HIV

Although you can’t legislate innovation or democratize a breakthrough, you can encourage a system that maximizes the probability that a breakthrough can occur. This is what science should be doing and this is, to a certain extent, what Silicon Valley is already doing.

The more data, information, software, tools, and knowledge available, the more we as a society can build upon previous work. (That said, even though I’m a huge proponent for more data, the most transformational theory from biology came about from solid critical thinking, logical, and sparse data collection.)

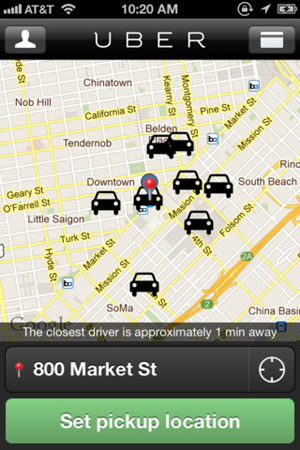

Of course, I’m biased, but I’m going to talk about two projects in which I’m involved: one business and one scientific. The first is Uber, an on-demand car service that allows users to request a private car via their smartphone or SMS. Uber is built using a variety of open software and tools such as Python, MySQL, node.js, and others. These systems helped make Uber possible.

As a non-engineer, it’s staggering to think of the complexity of the systems that make Uber work: GPS, accurate mapping tools, a reliable cellular/SMS system, automated dispatching system, and so on. But we as a culture become so quickly accustomed to certain advances that, should our system ever experience a service disruption, Louis C.K. would almost certainly be prophetic about the response:

The other project in which I’m involved is brainSCANr. My wife and I recently published a paper on this, but the basic idea is that we mined the text of more than three million peer-reviewed neuroscience research articles to find associations between topics and search for potentially missing links (which we called “semi-automated hypothesis generation”).

We built the first version of the site in a week, using nothing but open data and tools. The National Library of Medicine, part of the National Institutes of Health, provides an API to search all of these manuscripts in their massive, 20-million-paper-plus database. We used Python to process the associations, the JavaScript InfoVis Toolkit to plot the data, and Google App Engine to host it all. I’m positive when the NIH funded the creation of PubMed and its API, they didn’t have this kind of project in mind.

That’s the great thing about making more tools available; it’s arrogant to think that we can anticipate the best ways to make use of our own creations. My hope is that brainSCANr is the weakest incarnation of this kind of scientific text mining, and that bigger and better things will come of it.

Twenty years ago, these projects would have been practically impossible, meaning that the amount of labor involved to make them would have been impractical. Now they can be built by a handful of people (or a guy and his pregnant wife) in a week.

Just as research into black holes can lead to a breakthrough in wireless communication, so too can seemingly benign software technologies open amazing and unpredictable frontiers. Who would have guessed that what began with a simple online bookstore would grow into Amazon Web Services, a tool that is playing an ever-important role in innovation and scientific computing such as genetic sequencing?

So, before you scoff at the “pointlessness” of social networks or the wastefulness of “another web service,” remember that we don’t always do the research that will lead to the best immediate applications or build the company that is immediately useful or profitable. Nor can we always anticipate how our products will be used. It’s easy to mock Twitter because you don’t care to hear about who ate what for lunch, but I guarantee that the people whose lives were saved after the Haiti earthquake or who coordinated the spark of the Arab Spring are happy Twitter exists.

While we might have to justify ourselves to granting agencies, or venture capitalists, or our shareholders in order to do the work we want to do, sometimes the “real” reason we spend so much of our time working is the same reason people climb mountains: because it’s awesome that we can. That said, it’s nice to know that what we’re building now will be improved upon by our children in ways we can’t even conceive.

I can’t wait to have this conversation with my son when — after learning how to talk, of course — he’s had a chance to build on the frivolities of my generation.

Related: