"data scientists" entries

How an enterprise begins its big data journey

An ETL offload solution addresses the challenges of data overload, rising costs, and the skills gap.

As the amount of data continues to double in size every two years, organizations are struggling more than ever before to manage, ingest, store, process, transform, and analyze massive data sets. It has become clear that getting started on the road to using data successfully can be a difficult task, especially with a growing number of new data sources, demands for fresher data, and the need for increased processing capacity. In order to advance operational efficiencies and drive business growth, however, organizations must address and overcome these challenges.

In recent years, many organizations have heavily invested in the development of enterprise data warehouses (EDW) to serve as the central data system for reporting, extract/transform/load (ETL) processes, and ways to take in data (data ingestion) from diverse databases and other sources both inside and outside the enterprise. Yet, as the volume, velocity, and variety of data continues to increase, already expensive and cumbersome EDWs are becoming overloaded with data. Furthermore, traditional ETL tools are unable to handle all the data being generated, creating bottlenecks in the EDW that result in major processing burdens.

As a result of this overload, organizations are now turning to open source tools like Hadoop as cost-effective solutions to offloading data warehouse processing functions from the EDW. While Hadoop can help organizations lower costs and increase efficiency by being used as a complement to data warehouse activities, most businesses still lack the skill sets required to deploy Hadoop. Read more…

Understanding neural function and virtual reality

The O'Reilly Data Show Podcast: Poppy Crum explains that what matters is efficiency in identifying and emphasizing relevant data.

Like many data scientists, I’m excited about advances in large-scale machine learning, particularly recent success stories in computer vision and speech recognition. But I’m also cognizant of the fact that press coverage tends to inflate what current systems can do, and their similarities to how the brain works.

During the latest episode of the O’Reilly Data Show Podcast, I had a chance to speak with Poppy Crum, a neuroscientist who gave a well-received keynote at Strata + Hadoop World in San Jose. She leads a research group at Dolby Labs and teaches a popular course at Stanford on Neuroplasticity in Musical Gaming. I wanted to get her take on AI and virtual reality systems, and hear about her experience building a team of researchers from diverse disciplines.

Understanding neural function

While it can sometimes be nice to mimic nature, in the case of the brain, machine learning researchers recognize that understanding and identifying the essential neural processes is much more critical. A related example cited by machine learning researchers is flight: wing flapping and feathers aren’t critical, but an understanding of physics and aerodynamics is essential.

Crum and other neuroscience researchers express the same sentiment. She points out that a more meaningful goal should be to “extract and integrate relevant neural processing strategies when applicable, but also identify where there may be opportunities to be more efficient.”

The goal in technology shouldn’t be to build algorithms that mimic neural function. Rather, it’s to understand neural function. … The brain is basically, in many cases, a Rube Goldberg machine. We’ve got this limited set of evolutionary building blocks that we are able to use to get to a sort of very complex end state. We need to be able to extract when that’s relevant and integrate relevant neural processing strategies when it’s applicable. We also want to be able to identify that there are opportunities to be more efficient and more relevant. I think of it as table manners. You have to know all the rules before you can break them. That’s the big difference between being really cool or being a complete heathen. The same thing kind of exists in this area. How we get to the end state, we may be able to compromise, but we absolutely need to be thinking about what matters in neural function for perception. From my world, where we can’t compromise is on the output. I really feel like we need a lot more work in this area. Read more…

How real-time analytics integrates with our connected world

The O'Reilly Podcast: Scott Jarr on how real-time analytics applications can unlock value and automate decision-making.

In this special-edition O’Reilly Podcast, O’Reilly’s Ben Lorica and VoltDB’s co-founder Scott Jarr discuss how VoltDB’s hybrid transaction, analytic system allows for real-time analytics and personalization of data across various industries.

Scaling transaction processing without losing the relational database

MIT’s Mike Stonebraker (VoltDB’s co-founder) wanted to scale traditional OLTP (online transaction processing) without losing performance. The project evolved and eventually commercialized as VoltDB around the time NoSQL systems introduced a paradigm shift to non-relational databases. Jarr describes how Stonebraker’s approach didn’t assume a relational database was a core issue:

To give you an old story, but it’s a good story, they took a traditional style OLTP database and they ran it in memory. What they found was that it was doing less than 10% of its effective workload in processing transactions. The rest was dealing with overhead in various forms. He said, ‘Without getting rid of any of the things that we know [are] involved in the database world — consistency, SQL, ACID transactions, relational structures, high-level query languages — let’s keep all that, but let’s see if we can make this thing go faster.’

When those [NoSQL] systems were coming out, and they were coming out very strong, it was around the same time we were coming out with VoltDB. People were asking questions, ‘Well you’re consistent and they’re not.’ Or, ‘You’re relational and they’re not.’ I think that really lost the true meaning of what the differences were … [let’s] not get mired in the details … let’s look at the workloads that people are trying to accomplish.

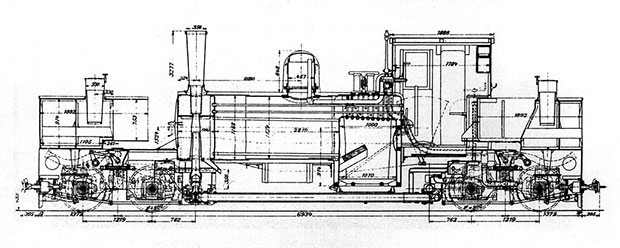

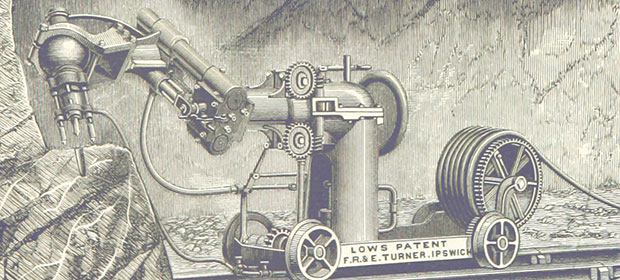

How trains are becoming data driven

Railways are at the intersection of Internet and industry.

Trains and public transport are, for many of us, a vital part of our daily lives. Large cities are particularly dependent on an efficient public transport system, and if disruption occurs, it usually affects many passengers while spreading across the transport network. But our requirements as passengers are growing and maturing. Safety is paramount, but we also care about timeliness, comfort, Internet access, and other amenities. With strong competition for regional and long-distance trains, providing an attractive service has become critical for many rail operators today.

The railway industry is an old industry. For the last 150 years, this industry was built around mechanical systems maintained throughout a lifetime of 30 years, mostly through reactive or preventive maintenance. But this is not enough anymore to deliver the type of service we all want and expect to experience.

Deriving insight from the data of trains

Over the last few years, the rail industry has been transforming itself, embracing IT, digitalization, big data, and the related changes in business models. This change is driven both by the railway operating companies demanding higher vehicle and infrastructure availability, and, increasingly, wanting to transition their operational risk to suppliers. In parallel, the thought leaders among maintenance providers have embraced the technology opportunities to radically improve their offerings and help their customers deliver better value. Read more…

Big data, interactive access: How Apache Drill makes it easy

True SQL queries? Yes. Parquet and other complex data structures? Yes. Drill 1.1 is full of surprises.

Register for the free webcast “Easy, real-time access to data with Apache Drill,” which will be held Thursday, July 30, 2015, at 10 a.m. PT. This panel discussion will explore the major role SQL-on-Hadoop technologies play in organizations.

Big data techniques are becoming mainstream in an increasing number of businesses, but how do people get self-service, interactive access to their big data? And how do they do this without having to train their SQL-literate employees to be advanced developers?

One solution is to take advantage of the rapidly maturing open source, open community software tool known as Apache Drill. Drill is not the first SQL-on-Hadoop tool. It is, however, a new and very sophisticated highly scalable SQL query engine that has been built from the ground up to be appropriate for use even in production settings. Drill extends query capabilities to a variety of new data sources and formats without the requirement for IT intervention that might be expected from a SQL query engine. In short, Drill allows self-exploration of data by providing flexibility along with performance.

As capabilities in the big data world have progressed, our understanding of what is needed for high-performance, enterprise-grade architectures have also increased. A need for a SQL solution for the Hadoop and NoSQL space was recognized fairly early, and it’s not surprising that to meet an urgent need, some of the first tools approached the problem with SQL-like syntax and made compromises that led to limitations in the data sources and formats they could handle well. Read more…

Big data, small cluster

Finding new ways to shrink disk space for storing partitionable data.

Register for the free webcast, “Extending Cassandra with Doradus OLAP for High Performance Analytics,” which will be held July 29 at 9 a.m. PT.

Engineers at Dell were developing customer apps when they found that the query response times their customers were demanding — something on the order of seconds (in other words, the need to scan millions of objects/second) — required a new type of query engine. This led them on a four-year journey to create Doradus, one of Dell Software Group’s first open-source projects.

Doradus is a server framework that runs on top of Cassandra. To build Doradus, the team borrowed from several well-accepted paradigms. They used traditional OLAP techniques to allow data to be arranged into static, multidimensional cubes. They leveraged the vertical orientation and efficient compression of columnar databases. And, from the NoSQL world, they employed sharding. The result: a storage and query engine called Doradus OLAP that stores data up to 1M objects/second/node, providing nearly real-time data warehousing. This architecture also allows for extreme compression of the data, sometimes producing up to a 99% reduction in space usage.

This extremely dense storage means that data that once took multiple nodes can now be stored on a single node, allowing for fast queries without the expense of a large cluster. Because Doradus is built on top of Cassandra, the option to scale out is still there. This allows for sharding and replication, and also takes advantage of Cassandra’s failover features. Read more…

Data has a shape

Using topology to uncover the shape of your data: An interview with Gurjeet Singh.

Get notified when our free report, “Future of Machine Intelligence: Perspectives from Leading Practitioners,” is available for download. The following interview is one of many that will be included in the report.

As part of our ongoing series of interviews surveying the frontiers of machine intelligence, I recently interviewed Gurjeet Singh. Singh is CEO and co-founder of Ayasdi, a company that leverages machine intelligence software to automate and accelerate discovery of data insights. Author of numerous patents and publications in top mathematics and computer science journals, Singh has developed key mathematical and machine learning algorithms for topological data analysis.

Key Takeaways

- The field of topology studies the mapping of one space into another through continuous deformations.

- Machine learning algorithms produce functional mappings from an input space to an output space and lend themselves to be understood using the formalisms of topology.

- A topological approach allows you to study data sets without assuming a shape beforehand and to combine various machine learning techniques while maintaining guarantees about the underlying shape of the data.

David Beyer: Let’s get started by talking about your background and how you got to where you are today.

Gurjeet Singh: I am a mathematician and a computer scientist, originally from India. I got my start in the field at Texas Instruments, building integrated software and performing digital design. While at TI, I got to work on a project using clusters of specialized chips called Digital Signal Processors (DSPs) to solve computationally hard math problems.

As an engineer by training, I had a visceral fear of advanced math. I didn’t want to be found out as a fake, so I enrolled in the Computational Math program at Stanford. There, I was able to apply some of my DSP work to solving partial differential equations and demonstrate that a fluid dynamics researcher need not buy a supercomputer anymore; they could just employ a cluster of DSPs to run the system. I then spent some time in mechanical engineering building similar GPU-based partial differential equation solvers for mechanical systems. Finally, I worked in Andrew Ng’s lab at Stanford, building a quadruped robot and programming it to learn to walk by itself. Read more…

6 reasons why I like KeystoneML

The O'Reilly Data Show Podcast: Ben Recht on optimization, compressed sensing, and large-scale machine learning pipelines.

As we put the finishing touches on what promises to be another outstanding Hardcore Data Science Day at Strata + Hadoop World in New York, I sat down with my co-organizer Ben Recht for the the latest episode of the O’Reilly Data Show Podcast. Recht is a UC Berkeley faculty member and member of AMPLab, and his research spans many areas of interest to data scientists including optimization, compressed sensing, statistics, and machine learning.

At the 2014 Strata + Hadoop World in NYC, Recht gave an overview of a nascent AMPLab research initiative into machine learning pipelines. The research team behind the project recently released an alpha version of a new software framework called KeystoneML, which gives developers a chance to test out some of the ideas that Recht outlined in his talk last year. We devoted a portion of this Data Show episode to machine learning pipelines in general, and a discussion of KeystoneML in particular.

Since its release in May, I’ve had a chance to play around with KeystoneML and while it’s quite new, there are several things I already like about it:

KeystoneML opens up new data types

Most data scientists don’t normally play around with images or audio files. KeystoneML ships with easy to use sample pipelines for computer vision and speech. As more data loaders get created, KeystoneML will enable data scientists to leverage many more new data types and tackle new problems. Read more…

The key to agile data science: experimentation

A real-world example of how a short delivery cycle fosters creativity.

I lead a research team of data scientists responsible for discovering insights that lead to market and competitive intelligence for our company, Computer Sciences Corporation (CSC). We are a busy group. We get questions from all different areas of the company and it’s important to be agile.

I lead a research team of data scientists responsible for discovering insights that lead to market and competitive intelligence for our company, Computer Sciences Corporation (CSC). We are a busy group. We get questions from all different areas of the company and it’s important to be agile.

The nature of data science is experimental. You don’t know the answer to the question asked of you — or even if an answer exists. You don’t know how long it will take to produce a result or how much data you need. The easiest approach is to just come up with an idea and work on it until you have something. But for those of us with deadlines and expectations, that approach doesn’t fly. Companies that issue you regular paychecks usually want insight into your progress.

This is where being agile matters. An agile data scientist works in small iterations, pivots based on results, and learns along the way. Being agile doesn’t guarantee that an idea will succeed, but it does decrease the amount of time it takes to spot a dead end. Agile data science lets you deliver results on a regular basis and it keeps stakeholders engaged.

The key to agile data science is delivering data products in defined time boxes — say, two- to three-week sprints. Short delivery cycles force us to be creative and break our research into small chunks that can be tested using minimum viable experiments. We deliver something tangible after almost every sprint for our stakeholders to review and give us feedback. Our stakeholders get better visibility into our work, and we learn early on if we are on track.

This approach might sound obvious, but it isn’t always natural for the team. We have to get used to working on just enough to meet stakeholder’s needs and resist the urge to make solutions perfect before moving on. After we make something work in one sprint, we make it better in the next only if we can find a really good reason to do so. Read more…

The truth about MapReduce performance on SSDs

Cost-per-performance is approaching parity with HDDs.

Karthik Kambatla co-authored this post.

It is well-known that solid-state drives (SSDs) are fast and expensive. But exactly how much faster — and more expensive — are they than the hard disk drives (HDDs) they’re supposed to replace? And does anything change for big data?

I work on the performance engineering team at Cloudera, a data management vendor. It is my job to understand performance implications across customers and across evolving technology trends. The convergence of SSDs and big data does have the potential to broadly impact future data center architectures. When one of our hardware partners loaned us a number of SSDs with the mandate to “find something interesting,” we jumped on the opportunity. This post shares our findings.

As a starting point, we decided to focus on MapReduce. We chose MapReduce because it enjoys wide deployment across many industry verticals — even as other big data frameworks such as SQL-on-Hadoop, free text search, machine learning, and NoSQL gain prominence.

We considered two scenarios: first, when setting up a new cluster, we explored whether SSDs or HDDs, of equal aggregate bandwidth, are superior; second, we explored how cluster operators should configure SSDs, when upgrading an HDDs-only cluster. Read more…