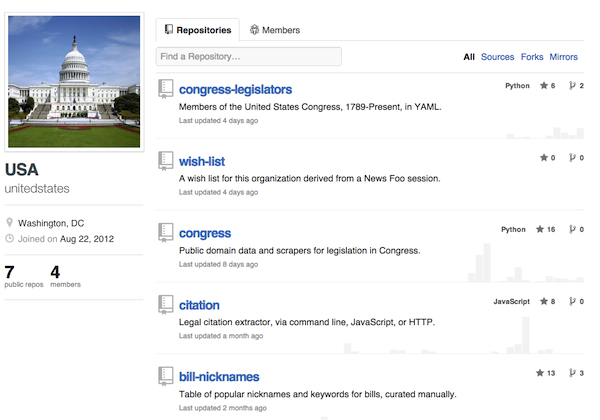

When Congress launched Congress.gov in beta, they didn’t open the data. This fall, a trio of open government developers took it upon themselves to do what custodians of the U.S. Code and laws in the Library of Congress could have done years ago: published data and scrapers for legislation in Congress from THOMAS.gov in the public domain. The data at github.com/unitedstates is published using an “unlicense” and updated nightly. Credit for releasing this data to the public goes to Sunlight Foundation developer Eric Mill, GovTrack.us founder Josh Tauberer and New York Times developer Derek Willis.

“It would be fantastic if the relevant bodies published this data themselves and made these datasets and scrapers unnecessary,” said Mill, in an email interview. “It would increase the information’s accuracy and timeliness, and probably its breadth. It would certainly save us a lot of work! Until that time, I hope that our approach to this data, based on the joint experience of developers who have each worked with it for years, can model to government what developers who aim to serve the public are actually looking for online.”

If the People’s House is going to become a platform for the people, it will need to release its data to the people. If Congressional leaders want THOMAS.gov to be a platform for members of Congress, legislative staff, civic developers and media, the Library of Congress will need to release structured legislative data. THOMAS is also not updated in real-time, which means that there will continue to be a lag between a bill’s introduction and the nation’s ability to read the bill before a vote.

Until that happens, however, this combination of scraping and open source data publishing offers a way forward on Congressional data to be released to the public, wrote Willis, on his personal blog:

Two years ago, there was a round of blog posts touched off by Clay Johnson that asked, “Why shouldn’t there be a GitHub for data?” My own view at the time was that availability of the data wasn’t as much an issue as smart usage and documentation of it: ‘We need to import, prune, massage, convert. It’s how we learn.’

Turns out that GitHub actually makes this easier, and I’ve had a conversion of sorts to the idea of putting data in version control systems that make it easier to view, download and report issues with data … I’m excited to see this repository grow to include not only other congressional information from THOMAS and the new Congress.gov site, but also related data from other sources. That this is already happening only shows me that for common government data this is a great way to go.

In the future, legislation data could be used to show iterations of laws and improve the ability of communities at OpenCongress, POPVOX or CrunchGov to discover and discuss proposals. As Congress incorporates more tablets on the floor during debates, such data could also be used to update legislative dashboards.

The choice to use Github as a platform for government data and scraper code is another significant milestone in a breakout year for Github’s use in government. In January, the British government committed GOV.UK code to Github. NASA, after contributing its first code in January added 11 code repositories this year. In August, the White House committed code to Github. In September, the Open Gov Foundation open sourced the MADISON crowd sourced legislation platform.

The choice to use Github for this scraper and legislative data, however, presents a new and interesting iteration in the site’s open source story.

“Github is a great fit for this because it’s neutral ground and it’s a welcoming environment for other potential contributors,” wrote Sunlight Labs director Tom Lee, in an email. “Sunlight expects to invest substantial resources in maintaining and improving this codebase, but it’s not ours: we think the data made available by this code belongs to every American. Consequently the project needed to embrace a form that ensures that it will continue to exist, and be free of encumbrances, in a way that’s not dependent on any one organization’s fortunes.”

Mill, an open government developer at Sunlight Labs, shared more perspective in the rest of our email interview, below.

Is this based on the GovTrack.us scraper?

Eric Mill: All three of us have contributed at least one code change to our new THOMAS scraper; the majority of the code was written by me. Some of the code has been taken or adapted from Josh’s work.

The scraper that currently actively populates the information on GovTrack is an older Perl-based scraper. None of that code was used directly in this project. Josh had undertaken an incomplete, experimental rewrite of these scrapers in Python about a year ago (code), but my understanding is it never got to the point of replacing GovTrack’s original Perl scripts.

We used the code from this rewrite in our new scraper, and it was extremely helpful in two ways &mddash; providing a roadmap of how THOMAS’ URLs and sitemap work, and parsing meaning out of the text of official actions.

Parsing the meaning out of action text is, I would say, about half the value and work of the project. When you look at a page on GovTrack or OpenCongress and see the timeline of a bill’s life — “Passed House,” “Signed by the President,” etc. — that information is only obtainable by analyzing the order and nature of the sentences of the official actions that THOMAS lists. Sentences are finicky, inconsistent things, and extracting meaning from them is tricky work. Just scraping them out of THOMAS.gov’s HTML is only half the battle. Josh has experience at doing this for GovTrack. The code in which this experience was encapsulated drastically reduced how long it took to create this.

How long did this take to build?

Eric Mill: Creating the whole scraper, and the accompanying dataset, was about 4 weeks of work on my part. About half of that time was spent actually scraping — reverse engineering THOMAS’ HTML — and the other half was spent creating the necessary framework, documentation, and general level of rigor for this to be a project that the community can invest in and rely on.

There will certainly be more work to come. THOMAS is shutting down in a year, to be replaced by Congress.gov. As Congress.gov grows to have the same level of data as THOMAS, we’ll gradually transition the scraper to use Congress.gov as its data source.

Was this data online before? What’s new?

Eric Mill: All of the data in this project has existed in an open way at GovTrack.us, which has provided bulk data downloads for years. The Sunlight Foundation and OpenCongress have both created applications based on this data, as have many other people and organizations.

This project was undertaken as a collaboration because Josh and I believed that the data was fundamental enough that it should exist in a public, owner-less commons, and that the code to generate it should be in the same place.

There are other benefits, too. Although the source code to GovTrack’s scrapers has been available, it depends on being embedded in GovTrack’s system, and the use of a database server. It was also written in Perl, a language less widely used today, and produced only XML. This new Python scraper has no other dependencies, runs without a database, and generates both JSON and XML. It can be easily extended to output other data formats.

Finally, everyone who worked on the project has had experience in dealing with legislative information. We were able to use that to make various improvements to how the data is structured and presented that make it easier for developers to use the data quickly and connect it to other data sources.

Searches for bills in Scout use data collected directly from this scraper. What else are people doing with the data?

Eric Mill: Right now, I only know for a fact that the Sunlight Foundation is using the data. GovTrack recently sent an email to its developer list announcing that in the near future, its existing dataset would be deprecated in favor of this new one, so the data should be used in GovTrack before long.

Pleasantly, I’ve found nearly nothing new by switching from GovTrack’s original dataset to this one. GovTrack’s data has always had a high level of quality. So far, the new dataset looks to be as good.

Is it common to host open data on Github?

Eric Mill: Not really. Github’s not designed for large-scale data hosting. This is an experiment to see whether this is a useful place to host it. The primary benefit is that no single person or organization (besides Github) is paying for download bandwidth.

The data is published as a convenience, for people to quickly download for analysis or curiosity. I expect that any person or project that intends to integrate the data into their work on an ongoing basis will do so by using the scraper, not downloading the data repeatedly from Github. It’s not our intent that anyone make their project dependent on the Github download links.

Laudably, Josh Tauberer donated his legislator dataset and converted it to YAML. What’s YAML?

Eric Mill: YAML is a lightweight data format intended to be easy for humans to both read and write. This dataset, unlike the one scraped from THOMAS, is maintained mostly through manual effort. Therefore, the data itself needs to be in source control, it needs to not be scary to look at and it needs to be obvious how to fix or improve it.

What’s in this legislator dataset? What can be done with it?

Eric Mill: The legislator dataset contains information about members of Congress from 1789 to the present day. It is a wealth of vital data for anyone doing any sort of application or analysis of members of Congress. This includes a breakdown of their name, a crosswalk of identifiers on other services, and social media accounts. Crucially, it also includes a member of Congress’ change in party, chamber, and name over time.

For example, it’s a pretty necessary companion to the dataset that our scraper gathers from THOMAS. THOMAS tells you the name of the person who sponsored this bill in 2003, and gives you a THOMAS-specific ID number. But it doesn’t tell you what that person’s party was at the time, or if the person is still a member of the same chamber now as they were in 2003 (or whether they’re in office at all). So if you want to say “how many Republicans sponsored bills in 2003,” or if you’d like to draw in information from outside sources, such as campaign finance information, you will need a dataset like the one that’s been publicly donated here.

Sunlight’s API on members of Congress is easily the most prominent API, widely used by people and organizations to build systems that involve legislators. That API’s data is a tiny subset of this new one.

You moved a legal citation and extractor into this code. What do they do here?

Eric Mill: The legal citation extractor, called “Citation,” plucks references to the US Code (and other things) out of text. Just about any system that deals with legal documents benefits from discovering links between those documents. For example, I use this project to power US Code searches on Scout, so that the site returns results that cite some piece of the law, regardless of how that citation is formatted. There’s no text-based search, simple or advanced, that would bring back results matching a variety of formats or matching subsections — something dedicated to the arcane craft of citation formats is required.

The citation extractor is built to be easy for others to invest in. It’s a stand-alone tool that can be used through the command line, HTTP, or directly through JavaScript. This makes it suitable for the front-end or back-end, and easy to integrate into a project written in any language. It’s very far from complete, but even now it’s already proven extremely useful at creating powerful features for us that weren’t possible before.

The parser for the U.S. Code itself is a dataset, written by my colleague Thom Neale. The U.S. Code is published by the government in various formats, but none of them are suitable for easy reuse. The Office of Law Revision Counsel, which publishes the U.S. Code, is planning on producing a dedicated XML version of the US Code, but they only began the procurement process recently. It could be quite some time before it appears.

Thom’s work parses the “locator code” form of the data, which is a binary format designed for telling GPO’s typesetting machines how to print documents. It is very specialized and very complicated. This parser is still in an early stage and not in use in production anywhere yet. When it’s ready, it’ll produce reliable JSON files containing the law of the United States in a sensible, reusable form.

Does Github’s organization structure makes a data commons possible?

Eric Mill: Github deliberately aligns its interests with the open source community, so it is possible to host all of our code and data there for free. Github offers unlimited public repositories, collaborators, bandwidth, and disk space to organizations and users at no charge. They do this while being an extremely successful, profitable business.

On Github, there are two types of accounts: users and organizations. Organizations are independent entities, but no one has to log in as an organization or share a password. Instead, at least one user will be marked as the “owner” of an organization. Ownership can easily change hands or be distributed amongst various users. This means that Josh, Derek, and I can all have equal ownership of the “unitedstates” repositories and data. Any of us can extend that ownership to anyone we want in a simple, secure way, without password sharing.

Github as a company has established both a space and a culture that values the commons. All software development work, from hobbyist to non-profit to corporation, from web to mobile to enterprise, benefits from a foundation of open source code. Github is the best living example of this truth, so it’s not surprising to me that it was the best fit for our work.

Why is this important to the public?

Eric Mill: The work and artifacts of our government should be available in bulk, for easy download, in accessible formats, and without license restrictions. This is a principle that may sound important and obvious to every technologist out there, but it’s rarely the case in practice. When it is, the bag is usually mixed. Not every member of the public will be able or want to interact directly with our data or scrapers. That’s fine. Developers are the force multipliers of public information. Every citizen can benefit somehow from what a developer can build with government information.

Related: