Alex Howard

Towards a more open world

5 minutes on how technology is changing the way we learn, live, and govern.

Last September, I gave a 5 minute Ignite talk at the tenth Ignite DC. The video just became available. My talk, embedded below, focused on what I’ve been writing about here at Radar for the past three years: open government, journalism, media, mobile technology and more.

The 20 slides that I used for the Ignite were a condensed version of a much longer presentation I’d created for a talk on open data and journalism in Moldova, also I’ve embedded below.

Linking open data to augmented intelligence and the economy

Nigel Shadbolt on AI, ODI, and how personal, open data could empower consumers in the 21st century.

After years of steady growth, open data is now entering into public discourse, particularly in the public sector. If President Barack Obama decides to put the White House’s long-awaited new open data mandate before the nation this spring, it will finally enter the mainstream.

As more governments, businesses, media organizations and institutions adopt open data initiatives, interest in the evidence behind release and the outcomes from it is similarly increasing. High hopes abound in many sectors, from development to energy to health to safety to transportation.

“Today, the digital revolution fueled by open data is starting to do for the modern world of agriculture what the industrial revolution did for agricultural productivity over the past century,” said Secretary of Agriculture Tom Vilsack, speaking at the G-8 Open Data for Agriculture Conference.

As other countries consider releasing their public sector information as data and machine-readable formats onto the Internet, they’ll need to consider and learn from years of effort at data.gov.uk, data.gov in the United States, and Kenya in Africa.

One of the crucial sources of analysis for the success or failure of open data efforts will necessarily be research institutions and academics. That’s precisely why research from the Open Data Institute and Professor Nigel Shadbolt (@Nigel_Shadbolt) will matter in the months and years ahead.

One of the crucial sources of analysis for the success or failure of open data efforts will necessarily be research institutions and academics. That’s precisely why research from the Open Data Institute and Professor Nigel Shadbolt (@Nigel_Shadbolt) will matter in the months and years ahead.

In the following interview, Professor Shadbolt and I discuss what lies ahead. His responses were lightly edited for content and clarity.

Read more…

White House Science Fair praises future scientists and makers

If we want kids to aspire to become scientists and technologists, celebrate academic achievement like athletics and celebrity.

There are few ways to better judge a nation’s character than to look at how its children are educated. What values do their parents, teachers and mentors demonstrate? What accomplishments are celebrated? In a world where championship sports teams are idolized and superstar athletes are feted by the media, it was gratifying to see science, students and teachers get their moment in the sun at the White House last week.

“…one of the things that I’m concerned about is that, as a culture, we’re great consumers of technology, but we’re not always properly respecting the people who are in the labs and behind the scenes creating the stuff that we now take for granted,” said President Barack Obama, “and we’ve got to give the millions of Americans who work in science and technology not only the kind of respect they deserve but also new ways to engage young people.”

President Barack Obama talks with Evan Jackson, 10, Alec Jackson, 8, and Caleb Robinson, 8, from McDonough, Ga., at the 2013 White House Science Fair in the State Dining Room. (Official White House Photo by Chuck Kennedy)

An increasingly fierce global competition for talent and natural resources has put a premium on developing scientists and engineers in the nation’s schools. (On that count, last week, the President announced a plan to promote careers in the sciences and expand federal and private-sector initiatives to encourage students to study STEM.

“America has always been about discovery, and invention, and engineering, and science and evidence,” said the President, last week. “That’s who we are. That’s in our DNA. That’s how this country became the greatest economic power in the history of the world. That’s how we’re able to provide so many contributions to people all around the world with our scientific and medical and technological discoveries.”

Finding and telling data-driven stories in billions of tweets

Twitter has hired Guardian Data editor Simon Rogers as its first data editor.

Twitter has hired its first data editor. Simon Rogers, one of the leading practitioners of data journalism in the world, will join Twitter in May. He will be moving his family from London to San Francisco and applying his skills to telling data-driven stories using tweets. James Ball will replace him as the Guardian’s new data editor.

As a data editor, will Rogers keep editing and producing something that we’ll recognize as journalism? Will his work at Twitter be different than what Google Think or Facebook Stories delivers? Different in terms of how he tells stories with data? Or is the difference that Twitter has a lot more revenue coming in or sees data-driven storytelling as core to driving more business? (Rogers wouldn’t comment on those counts.)

Sprinting toward the future of Jamaica

Open data is fundamental to democratic governance and development, say Jamaican officials and academics.

Creating the conditions for startups to form is now a policy imperative for governments around the world, as Julian Jay Robinson, minister of state in Jamaica’s Ministry of Science, Technology, Energy and Mining, reminded the attendees at the “Developing the Caribbean” conference last week in Kingston, Jamaica.

Robinson said Jamaica is working on deploying wireless broadband access, securing networks and stimulating tech entrepreneurship around the island, a set of priorities that would have sounded of the moment in Washington, Paris, Hong Kong or Bangalore. He also described open access and open data as fundamental parts of democratic governance, explicitly aligning the release of public data with economic development and anti-corruption efforts. Robinson also pledged to help ensure that Jamaica’s open data efforts would be successful, offering a key ally within government to members of civil society.

The interest in adding technical ability and capacity around the Caribbean was sparked by other efforts around the world, particularly Kenya’s open government data efforts. That’s what led the organizers to invite Paul Kukubo to speak about Kenya’s experience, which Robinson noted might be more relevant to Jamaica than that of the global north. Read more…

Predictive analytics and data sharing raise civil liberties concerns

Expanded rules for data sharing in the U.S. government will need more oversight as predictive algorithms are applied.

Last winter, around the same time there was a huge row in Congress over the Cyber Intelligence Sharing and Protection Act (CISPA), U.S. Attorney General Holder quietly signed off on expanded rules on government data sharing. The rules allowed the National Counterterrorism Center (NCTC), housed within the Department of Homeland Security, to analyze the regulatory data collected during the business of government for patterns relevant to domestic terrorist threats.

Julia Angwin, who reported the story for the Wall Street Journal, highlighted the key tension: the rules allow the NCTC to “examine the government files of U.S. citizens for possible criminal behavior, even if there is no reason to suspect them.”

On the one hand, this is a natural application of big data: search existing government records collected about citizens for suspicious patterns of behavior. The action can be justified for counter-terrorism purposes: there are advanced persistent threats. (When national security is invoked, privacy concerns are often deprecated.) The failure to "connect the dots" using existing data across government on Christmas Day 2009 (remember the so-called "underwear bomber?") added impetus to getting more data in the NCTC’s hands. It’s possible that the rules on data retention were extended five years because the agency didn’t have the capabilities it needed. Data mining existing records offers unprecedented opportunities to find and detect terrorism plots before they happen.

On the other hand, the changes at the NCTC that were authorized back in March 2012 represent a massive data grab with far-reaching consequences. The changes received little public discussion prior to the WSJ breaking the story, and they seem to substantially override the purpose of the Federal Privacy Act that Congress passed in 1974. Extension of the rules happened without public debate because of what effectively amounts to a legal loophole. Post proposed changes to the Federal Register, voila. Effectively, this looks like an end run around the Federal Privacy Act. Read more…

Sensoring the news

Sensor journalism will augment our ability to understand the world and hold governments accountable.

When I went to the 2013 SXSW Interactive Festival to host a conversation with NPR’s Javaun Moradi about sensors, society and the media, I thought we would be talking about the future of data journalism. By the time I left the event, I’d learned that sensor journalism had long since arrived and been applied. Today, inexpensive, easy-to-use open source hardware is making it easier for media outlets to create data themselves.

“Interest in sensor data has grown dramatically over the last year,” said Moradi. “Groups are experimenting in the areas of environmental monitoring, journalism, human rights activism, and civic accountability.” His post on what sensor networks mean for journalism sparked our collaboration after we connected in December 2011 about how data was being used in the media.

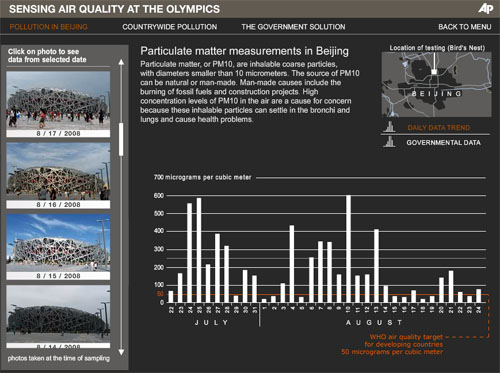

Associated Press visualization of Beijing air quality. See related feature.

At a SXSW panel on “sensoring the news,” Sarah Williams, an assistant professor at MIT, described how the Spatial Information Design Lab At Columbia University* had partnered with the Associated Press to independently measure air quality in Beijing.

Prior to the 2008 Olympics, the coaches of the Olympic teams had expressed serious concern about the impact of air pollution on the athletes. That, in turn, put pressure on the Chinese government to take substantive steps to improve those conditions. While the Chinese government released an index of air quality, explained Williams, they didn’t explain what went into it, nor did they provide the raw data.

The Beijing Air Tracks project arose from the need to determine what the conditions on the ground really were. AP reporters carried sensors connected to their cellphones to detect particulate and carbon monoxide levels, enabling them to report air quality conditions back in real-time as they moved around the Olympic venues and city. Read more…

The City of Chicago wants you to fork its data on GitHub

Chicago CIO Brett Goldstein is experimenting with social coding for a different kind of civic engagement.

GitHub has been gaining new prominence as the use of open source software in government grows.

Earlier this month, I included a few thoughts from Chicago’s chief information officer, Brett Goldstein, about the city’s use of GitHub, in a piece exploring GitHub’s role in government.

While Goldstein says that Chicago’s open data portal will remain the primary means through which Chicago releases public sector data, publishing open data on GitHub is an experiment that will be interesting to watch, in terms of whether it affects reuse or collaboration around it.

In a followup email, Goldstein, who also serves as Chicago’s chief data officer, shared more about why the city is on GitHub and what they’re learning. Our discussion follows.

GitHub gains new prominence as the use of open source within governments grows

The collaborative coding site hired a "government bureaucat."

When it comes to government IT in 2013, GitHub may have surpassed Twitter and Facebook as the most interesting social network.

When it comes to government IT in 2013, GitHub may have surpassed Twitter and Facebook as the most interesting social network.

GitHub’s profile has been rising recently, from a Wired article about open source in government, to its high profile use by the White House and within the Consumer Financial Protection Bureau. This March, after the first White House hackathon in February, the administration’s digital team posted its new API standards on GitHub. In addition to the U.S., code from the United Kingdom, Canada, Argentina and Finland is also on the platform.

“We’re reaching a tipping point where we’re seeing more collaboration not only within government agencies, but also between different agencies, and between the government and the public,” said GitHub head of communications Liz Clinkenbeard, when I asked her for comment. Read more…

If followers can sponsor updates on Facebook, social advertising has a new horizon

The frequency of sponsored posts looks set to grow.

This week, I found that one of my Facebook updates received significantly more attention that others I’ve posted. On the one hand, it was a share of an important New York Times story focusing on the first time a baby was cured of HIV. But I discovered something that went beyond the story itself: someone who was not my friend had paid to sponsor one of my posts.

According to Facebook, the promoted post had 27 times as many views because it was sponsored this way, with 96% of the views coming through the sponsored version.

When I started to investigate what had happened, I learned that I’d missed some relevant news last month. Facebook had announced that users would be able to promote the posts of friends. My situation, however, was clearly different: Christine Harris, the sponsor of my post, is not my friend.

When I followed up with Elisabeth Diana, Facebook’s advertising communications manager, she said this was part of the cross-promote feature that Facebook rolled out. If a reporter posts a public update to his followers on Facebook, Diana explained to me in an email, that update can be promoted and “boosted” to the reporter’s friends.

While I couldn’t find Harris on Facebook, Diana said with “some certainty” that she was my follower, “in order to have seen your content.” Harris definitely isn’t my friend, and while she may well be one of my followers, I have no way to search them to determine whether that’s so. Read more…