"artificial intelligence" entries

Is 2016 the year you let robots manage your money?

The O’Reilly Data Show podcast: Vasant Dhar on the race to build “big data machines” in financial investing.

Subscribe to the O’Reilly Data Show Podcast to explore the opportunities and techniques driving big data and data science.

In this episode of the O’Reilly Data Show, I sat down with Vasant Dhar, a professor at the Stern School of Business and Center for Data Science at NYU, founder of SCT Capital Management, and editor-in-chief of the Big Data Journal (full disclosure: I’m a member of the editorial board). We talked about the early days of AI and data mining, and recent applications of data science to financial investing and other domains.

Dhar’s first steps in applying machine learning to finance

I joke with people, I say, ‘When I first started looking at finance, the only thing I knew was that prices go up and down.’ It was only when I actually went to Morgan Stanley and took time off from academia that I learned about finance and financial markets. … What I really did in that initial experiment is I took all the trades, I appended them with information about the state of the market at the time, and then I cranked it through a genetic algorithm and a tree induction algorithm. … When I took it to the meeting, it generated a lot of really interesting discussion. … Of course, it took several months before we actually finally found the reasons for why I was observing what I was observing.

Kristian Hammond on truly democratizing data and the value of AI in the enterprise

The O'Reilly Radar Podcast: Narrative Science's foray into proprietary business data and bridging the data gap.

Subscribe to the O’Reilly Radar Podcast to track the technologies and people that will shape our world in the years to come.

In this week’s episode, O’Reilly’s Mac Slocum chats with Kristian Hammond, Narrative Science’s chief scientist. Hammond talks about Natural Language Generation, Narrative Science’s shift into the world of business data, and evolving beyond the dashboard.

Here are a few highlights:

We’re not telling people what the data are; we’re telling people what has happened in the world through a view of that data. I don’t care what the numbers are; I care about who are my best salespeople, where are my logistical bottlenecks. Quill can do that analysis and then tell you — not make you fight with it, but just tell you — and tell you in a way that is understandable and includes an explanation about why it believes this to be the case. Our focus is entirely, a little bit in media, but almost entirely in proprietary business data, and in particular we really focus on financial services right now.

You can’t make good on that promise [of what big data was supposed to do] unless you communicate it in the right way. People don’t understand charts; they don’t understand graphs; they don’t understand lines on a page. They just don’t. We can’t be angry at them for being human. Instead we should actually have the machine do what it needs to do in order to fill that gap between what it knows and what people need to know.

A little AI in lots of things: Our most promising future with tech

The O'Reilly Radar Podcast: David Rose on enchanting objects, avoiding cognitive overload, and our future relationship with tech.

Subscribe to the O’Reilly Radar Podcast to track the technologies and people that will shape our world in the years to come.

In this episode of the Radar Podcast, I chat with David Rose, an entrepreneur, MIT Media Lab instructor, and author of Enchanted Objects. We talk about which objects we should enchant, and how to avoid being overwhelmed by object communication and cognitive overload.

Rose also weighs in on the AI debate and outlines the four potential ways he sees the Internet of Things shaking out.

Here are a few snippets from our conversation:

I’ve been a fan of magic and of studying the tropes that magicians have used to control the emotional arc of a trick, for example, and I think enchantment, for me, sort of sets a high bar for designers to consider not just the mechanism of what’s happening, but also consider how people are engaged — and are people delighted? What’s their emotional reaction to whatever the new connectivity or new sensor or new display is that’s in one of these objects?

Those phenomenon of using light and pattern and texture are all pre-attentive — meaning your brain process them in parallel; it’s non-distracting; it happens in less than 250 milliseconds. That’s the design space for the best types of interactions with objects because they don’t tend to overwhelm you, and you don’t perceive them as being a cognitive load because they aren’t a cognitive load.

The race to build ‘‘big data machines’’ in financial investing

Robot wealth managers and approaches will grow and offer alternative ways of investing.

Get the O’Reilly Money Newsletter for news and insights about finance and technology.

Editor’s note: This post originally published in Big Data at Mary Ann Liebert, Inc., Publishers, in Volume 3, Issue 2, on June 18, 2015, under the title “Should You Trust Your Money to a Robot?” It is republished here with permission.

Financial markets emanate massive amounts of data from which machines can, in principle, learn to invest with minimal initial guidance from humans. I contrast human and machine strengths and weaknesses in making investment decisions. The analysis reveals areas in the investment landscape where machines are already very active and those where machines are likely to make significant inroads in the next few years.

Computers are making more and more decisions for us, and increasingly so in areas that require human judgment. Driverless cars, which seemed like science fiction until recently, are expected to become common in the next 10 years. There is a palpable increase in machine intelligence across the touchpoints of our lives, driven by the proliferation of data feeding into intelligent algorithms capable of learning useful patterns and acting on them. We are living through one of the greatest revolutions in our lifestyles, in which computers are increasingly engaged in our lives and decision-making, to a degree that it has become second nature. Recommendations on Amazon or auto-suggestions on Google are now so routine, we find it strange to encounter interfaces that don’t anticipate what we want. The intelligence revolution is well under way, with or without our conscious approval or consent. We are entering the era of intelligence as a service, with access to building blocks for building powerful new applications. Read more…

Building intelligent machines

To understand deep learning, let’s start simple.

Use code DATA50 to get 50% off of the new early release of “Fundamentals of Deep Learning: Designing Next-Generation Artificial Intelligence Algorithms.” Editor’s note: This is an excerpt of “Fundamentals of Deep Learning,” by Nikhil Buduma.

The brain is the most incredible organ in the human body. It dictates the way we perceive every sight, sound, smell, taste, and touch. It enables us to store memories, experience emotions, and even dream. Without it, we would be primitive organisms, incapable of anything other than the simplest of reflexes. The brain is, inherently, what makes us intelligent.

The infant brain only weighs a single pound, but somehow, it solves problems that even our biggest, most powerful supercomputers find impossible. Within a matter of days after birth, infants can recognize the faces of their parents, discern discrete objects from their backgrounds, and even tell apart voices. Within a year, they’ve already developed an intuition for natural physics, can track objects even when they become partially or completely blocked, and can associate sounds with specific meanings. And by early childhood, they have a sophisticated understanding of grammar and thousands of words in their vocabularies.

For decades, we’ve dreamed of building intelligent machines with brains like ours — robotic assistants to clean our homes, cars that drive themselves, microscopes that automatically detect diseases. But building these artificially intelligent machines requires us to solve some of the most complex computational problems we have ever grappled with, problems that our brains can already solve in a manner of microseconds. To tackle these problems, we’ll have to develop a radically different way of programming a computer using techniques largely developed over the past decade. This is an extremely active field of artificial computer intelligence often referred to as deep learning. Read more…

Artificial intelligence?

AI scares us because it could be as inhuman as humans.

Elon Musk started a trend. Ever since he warned us about artificial intelligence, all sorts of people have been jumping on the bandwagon, including Stephen Hawking and Bill Gates.

Although I believe we’ve entered the age of postmodern computing, when we don’t trust our software, and write software that doesn’t trust us, I’m not particularly concerned about AI. AI will be built in an era of distrust, and that’s good. But there are some bigger issues here that have nothing to do with distrust.

What do we mean by “artificial intelligence”? We like to point to the Turing test; but the Turing test includes an all-important Easter Egg: when someone asks Turing’s hypothetical computer to do some arithmetic, the answer it returns is incorrect. An AI might be a cold calculating engine, but if it’s going to imitate human intelligence, it has to make mistakes. Not only can it make mistakes, it can (indeed, must be) be deceptive, misleading, evasive, and arrogant if the situation calls for it.

That’s a problem in itself. Turing’s test doesn’t really get us anywhere. It holds up a mirror: if a machine looks like us (including mistakes and misdirections), we can call it artificially intelligent. That begs the question of what “intelligence” is. We still don’t really know. Is it the ability to perform well on Jeopardy? Is it the ability to win chess matches? These accomplishments help us to define what intelligence isn’t: it’s certainly not the ability to win at chess or Jeopardy, or even to recognize faces or make recommendations. But they don’t help us to determine what intelligence actually is. And if we don’t know what constitutes human intelligence, why are we even talking about artificial intelligence? Read more…

Our future sits at the intersection of artificial intelligence and blockchain

The O'Reilly Radar Podcast: Steve Omohundro on AI, cryptocurrencies, and ensuring a safe future for humanity.

Subscribe to the O’Reilly Radar Podcast to track the technologies and people that will shape our world in the years to come.

I met up with Possibility Research president Steve Omohundro at our Bitcoin & the Blockchain Radar Summit to talk about an interesting intersection: artificial intelligence (AI) and blockchain/cryptocurrency technologies. This Radar Podcast episode features our discussion about the role cryptocurrency and blockchain technologies will play in the future of AI, Omohundro’s Self Aware Systems project that aims to ensure intelligent technologies are beneficial for humanity, and his work on the Pebble cryptocurrency.

Synthesizing AI and crypto-technologies

Bitcoin piqued Omohundro’s interest from the very start, but his excitement built as he started realizing the disruptive potential of the technology beyond currency — especially the potential for smart contracts. He began seeing ways the technology will intersect with artificial intelligence, the area of focus for much of his work:

I’m very excited about what’s happening with the cryptocurrencies, particularly Ethereum. I would say Ethereum is the most advanced of the smart contracting ideas, and there’s just a flurry of insights, and people are coming up every week with, ‘Oh we could use it to do this.’ We could have totally autonomous corporations running on the blockchain that copy what Uber does, but much more cheaply. It’s like, ‘Whoa what would that do?’

I think we’re in a period of exploration and excitement in that field, and it’s going to merge with the AI systems because programs running on the blockchain have to connect to the real world. You need to have sensors and actuators that are intelligent, have knowledge about the world, in order to integrate them with the smart contracts on the blockchain. I see a synthesis of AI and cryptocurrencies and crypto-technologies and smart contracts. I see them all coming together in the next couple of years.

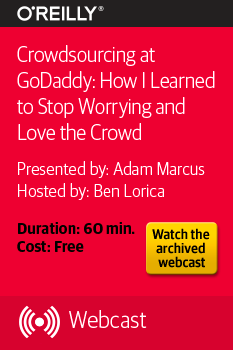

Exploring methods in active learning

Tips on how to build effective human-machine hybrids, from crowdsourcing expert Adam Marcus.

In a recent O’Reilly webcast, “Crowdsourcing at GoDaddy: How I Learned to Stop Worrying and Love the Crowd,” Adam Marcus explains how to mitigate common challenges of managing crowd workers, how to make the most of human-in-the-loop machine learning, and how to establish effective and mutually rewarding relationships with workers. Marcus is the director of data on the Locu team at GoDaddy, where the “Get Found” service provides businesses with a central platform for managing their online presence and content.

In a recent O’Reilly webcast, “Crowdsourcing at GoDaddy: How I Learned to Stop Worrying and Love the Crowd,” Adam Marcus explains how to mitigate common challenges of managing crowd workers, how to make the most of human-in-the-loop machine learning, and how to establish effective and mutually rewarding relationships with workers. Marcus is the director of data on the Locu team at GoDaddy, where the “Get Found” service provides businesses with a central platform for managing their online presence and content.

In the webcast, Marcus uses practical examples from his experience at GoDaddy to reveal helpful methods for how to:

- Offset the inevitability of wrong answers from the crowd

- Develop and train workers through a peer-review system

- Build a hierarchy of trusted workers

- Make crowd work inspiring and enable upward mobility

What to do when humans get it wrong

It turns out there is a simple way to offset human error: redundantly ask people the same questions. Marcus explains that when you ask five different people the same question, there are some creative ways to combine their responses, and use a majority vote. Read more…

Beyond AI: artificial compassion

If what we are trying to build is artificial minds, intelligence might be the smaller, easier part.

When we talk about artificial intelligence, we often make an unexamined assumption: that intelligence, understood as rational thought, is the same thing as mind. We use metaphors like “the brain’s operating system” or “thinking machines,” without always noticing their implicit bias.

When we talk about artificial intelligence, we often make an unexamined assumption: that intelligence, understood as rational thought, is the same thing as mind. We use metaphors like “the brain’s operating system” or “thinking machines,” without always noticing their implicit bias.

But if what we are trying to build is artificial minds, we need only look at a map of the brain to see that in the domain we’re tackling, intelligence might be the smaller, easier part.

Maybe that’s why we started with it.

After all, the rational part of our brain is a relatively recent add-on. Setting aside unconscious processes, most of our gray matter is devoted not to thinking, but to feeling.

There was a time when we deprecated this larger part of the mind, as something we should either ignore or, if it got unruly, control.

But now we understand that, as troublesome as they may sometimes be, emotions are essential to being fully conscious. For one thing, as neurologist Antonio Damasio has demonstrated, we need them in order to make decisions. A certain kind of brain damage leaves the intellect unharmed, but removes the emotions. People with this affliction tend to analyze options endlessly, never settling on a final choice. Read more…

Artificial intelligence: summoning the demon

We need to understand our own intelligence is competition for our artificial, not-quite intelligences.

A few days ago, Elon Musk likened artificial intelligence (AI) to “summoning the demon.” As I’m sure you know, there are many stories in which someone summons a demon. As Musk said, they rarely turn out well.

There’s no question that Musk is an astute student of technology. But his reaction is misplaced. There are certainly reasons for concern, but they’re not Musk’s.

The problem with AI right now is that its achievements are greatly over-hyped. That’s not to say those achievements aren’t real, but they don’t mean what people think they mean. Researchers in deep learning are happy if they can recognize human faces with 80% accuracy. (I’m skeptical about claims that deep learning systems can reach 97.5% accuracy; I suspect that the problem has been constrained some way that makes it much easier. For example, asking “is there a face in this picture?” or “where is the face in this picture?” is much different from asking “what is in this picture?”) That’s a hard problem, a really hard problem. But humans recognize faces with nearly 100% accuracy. For a deep learning system, that’s an almost inconceivable goal. And 100% accuracy is orders of magnitude harder than 80% accuracy, or even 97.5%. Read more…