As I noted in a previous post, model building is just one component of the analytic lifecycle. Many analytic projects result in models that get deployed in production environments. Moreover, companies are beginning to treat analytics as mission-critical software and have real-time dashboards to track model performance.

Once a model is deemed to be underperforming or misbehaving, diagnostic tools are needed to help determine appropriate fixes. It could well be models need to be revisited and updated, but there are instances when underlying data sources1 and data pipelines are what need to be fixed. Beyond the formal systems put in place specifically for monitoring analytic products, tools for reproducing data science workflows could come in handy.

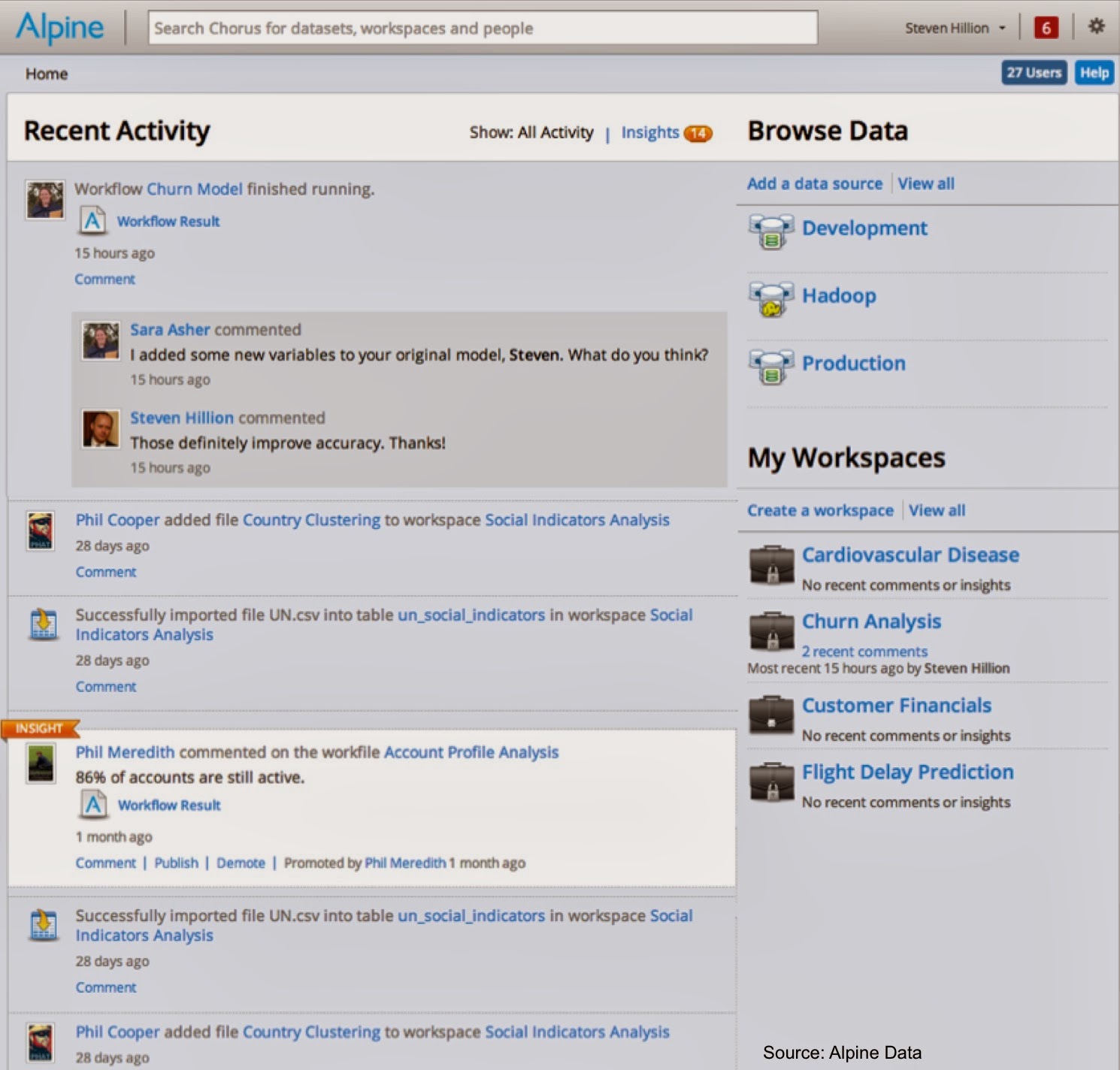

Version control systems are useful, but appeal primarily to developers. The recent wave of data products come with collaboration features that target a broader user base. Properly instrumented, collaboration tools are also useful for reproducing and debugging complex data analysis projects. As an example, Alpine Data records all the actions made while working on a data project: a screen displays all recent “actions and changes” and team members can choose to leave comments or questions.

If you’re a tool builder charged with baking in collaboration, consider how best to expose activity logs as well. Properly crafted “audit trails” can be very useful for uncovering and fixing problems that arise once a model gets deployed in production.

Related Content:

(1) Models can be on the receiving end of bad data or the victim of attacks from adversaries.