Subscribe to the O’Reilly Data Show Podcast to explore the opportunities and techniques driving big data and data science.

As tools for advanced analytics become more accessible, data scientist’s roles will evolve. Most media stories emphasize a need for expertise in algorithms and quantitative techniques (machine learning, statistics, probability), and yet the reality is that expertise in advanced algorithms is just one aspect of industrial data science.

As tools for advanced analytics become more accessible, data scientist’s roles will evolve. Most media stories emphasize a need for expertise in algorithms and quantitative techniques (machine learning, statistics, probability), and yet the reality is that expertise in advanced algorithms is just one aspect of industrial data science.

During the latest episode of the O’Reilly Data Show podcast, I sat down with Alice Zheng, one of Strata + Hadoop World’s most popular speakers. She has a gift for explaining complex topics to a broad audience, through presentations and in writing. We talked about her background, techniques for evaluating machine learning models, how much math data scientists need to know, and the art of interacting with business users.

Making machine learning accessible

People who work at getting analytics adopted and deployed learn early on the importance of working with domain/business experts. As excited as I am about the growing number of tools that open up analytics to business users, the interplay between data experts (data scientists, data engineers) and domain experts remains important. In fact, human-in-the-loop systems are being used in many critical data pipelines. Zheng recounts her experience working with business analysts:

It’s not enough to tell someone, “This is done by boosted decision trees, and that’s the best classification algorithm, so just trust me, it works.” As a builder of these applications, you need to understand what the algorithm is doing in order to make it better. As a user who ultimately consumes the results, it can be really frustrating to not understand how they were produced. When we worked with analysts in Windows or in Bing, we were analyzing computer system logs. That’s very difficult for a human being to understand. We definitely had to work with the experts who understood the semantics of the logs in order to make progress. They had to understand what the machine learning algorithms were doing in order to provide useful feedback.

…

It really comes back to this big divide, this bottleneck, between the domain expert and the machine learning expert. I saw that as the most challenging problem facing us when we try to really make machine learning widely applied in the world. I saw both machine learning experts and domain experts as being difficult to scale up. There’s only a few of each kind of expert produced every year. I thought, how can I scale up machine learning expertise? I thought the best thing that I could do is to build software that doesn’t take a machine learning expert to use, so that the domain experts can use them to build their own applications. That’s what prompted me to do research in automating machine learning while at MSR [Microsoft Research].

Feature engineering over algorithms

It’s a common adage among experienced data scientists that “good features allow a simple model to beat a complex model.” One of the major reasons why many find deep learning so compelling is that it provides a mechanism for learning a hierarchy of representations across layers. In fact some machine learning veterans, including Zheng, regard deep learning as mainly about feature representations.

But deep learning isn’t suitable for every problem or domain (particularly when model explainability is important). In many settings, feature selection and feature engineering remain at the heart of analytic projects. Zheng explains this using machine-generated log data as an example:

There’s structure in it, but it’s kind of a different form. … It’s spit out by machines and programs. There’s structure, but that structure is difficult to understand for humans. … So, you can’t just throw all of it into an algorithm and expect the algorithm to be able to make sense of it. You really have to process the features, do a lot of pre-processing, and first do things like extract out the frequent sequences, maybe, or figure out what’s the right way to represent IP addresses, for instance. Maybe you don’t want to represent latency by the actual latency number, which could have a very skewed distribution, with lots and lots of large numbers. You might want to assign them into bins or something. There are a lot of things that you need to do to get the data into a format that’s friendly to the model, and then you want to choose the right model. Maybe after you choose the model, you realize this model really is suitable for numeric data and not categorical data. Then you need to go back to the feature engineering part and figure out the best way to represent the data.

…

I hesitate to say anything critical because half of my friends are in machine learning, which is all about algorithms. I think we already have enough algorithms. It’s not that we don’t need more and better algorithms. I think a much, much bigger challenge is data itself, features, and feature engineering.

Evaluating machine learning models

Part of the on-the-job training of data scientists includes interfacing and communicating with business users — that means translating technical and often geeky metrics. In her forthcoming O’Reilly report, Zheng provides a nice framework for evaluating machine learning models:

If we think of training the model as a part of it, then even after you’ve trained a model and evaluated it and found it to be good by some evaluation metric standards, when you deploy it, where it actually goes and faces users, then there’s a different set of metrics that would impact the users. You might measure: how long do users actually interact with this model? Does it actually make a difference in the length of time? Did they used to interact less and now they’re more engaged, or vice versa? That’s different from whatever evaluation metric that you used, like AUC or per class accuracy or precision and recall. … It’s probably not enough to just say this model has a .85 F1 score and expect someone who has not done any data science to understand what that means. How good are the results? What does it actually mean to the end users of the product?

You can listen to our entire interview in the SoundCloud player above, or subscribe through Stitcher, SoundCloud, TuneIn, or iTunes.

Join Alice Zheng and the team from Dato, for a one-day tutorial, Building and deploying large-scale machine learning applications, next month at Strata + Hadoop World NYC.

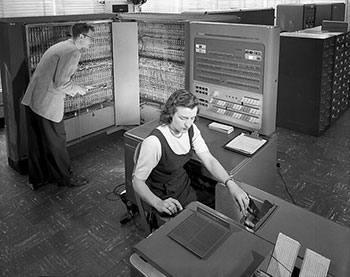

Public domain image on article and category pages via Wikimedia Commons.