Three best practices for building successful data pipelines

Reproducibility, consistency, and productionizability let data scientists focus on the science.

Building a good data pipeline can be technically tricky. As a data scientist who has worked at Foursquare and Google, I can honestly say that one of our biggest headaches was locking down our Extract, Transform, and Load (ETL) process.

Building a good data pipeline can be technically tricky. As a data scientist who has worked at Foursquare and Google, I can honestly say that one of our biggest headaches was locking down our Extract, Transform, and Load (ETL) process.

At The Data Incubator, our team has trained more than 100 talented Ph.D. data science fellows who are now data scientists at a wide range of companies, including Capital One, the New York Times, AIG, and Palantir. We commonly hear from Data Incubator alumni and hiring managers that one of their biggest challenges is also implementing their own ETL pipelines.

Drawn from their experiences and my own, I’ve identified three key areas that are often overlooked in data pipelines, and those are making your analysis:

- Reproducible

- Consistent

- Productionizable

While these areas alone cannot guarantee good data science, getting these three technical aspects of your data pipeline right helps ensure that your data and research results are both reliable and useful to an organization. Read more…

From search to distributed computing to large-scale information extraction

The O'Reilly Data Show Podcast: Mike Cafarella on the early days of Hadoop/HBase and progress in structured data extraction.

Subscribe to the O’Reilly Data Show Podcast to explore the opportunities and techniques driving big data and data science.

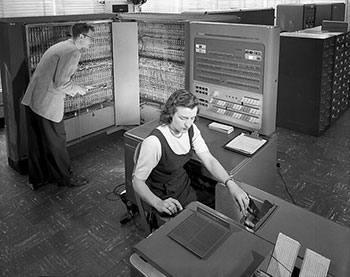

February 2016 marks the 10th anniversary of Hadoop — at a point in time when many IT organizations actively use Hadoop, and/or one of the open source, big data projects that originated after, and in some cases, depend on it.

February 2016 marks the 10th anniversary of Hadoop — at a point in time when many IT organizations actively use Hadoop, and/or one of the open source, big data projects that originated after, and in some cases, depend on it.

During the latest episode of the O’Reilly Data Show Podcast, I had an extended conversation with Mike Cafarella, assistant professor of computer science at the University of Michigan. Along with Strata + Hadoop World program chair Doug Cutting, Cafarella is the co-founder of both Hadoop and Nutch. In addition, Cafarella was the first contributor to HBase.

We talked about the origins of Nutch, Hadoop (HDFS, MapReduce), HBase, and his decision to pursue an academic career and step away from these projects. Cafarella’s pioneering contributions to open source search and distributed systems fits neatly with his work in information extraction. We discussed a new startup he recently co-founded, ClearCutAnalytics, to commercialize a highly regarded academic project for structured data extraction (full disclosure: I’m an advisor to ClearCutAnalytics). As I noted in a previous post, information extraction (from a variety of data types and sources) is an exciting area that will lead to the discovery of new features (i.e., variables) that may end up improving many existing machine learning systems. Read more…

The music science trifecta

Digital content, the Internet, and data science have changed the music industry.

Download our new free report “Music Science: How Data and Digital Content are Changing Music,” by Alistair Croll, to learn more about music, data, and music science.

Today’s music industry is the product of three things: digital content, the Internet, and data science. This trifecta has altered how we find, consume, and share music. How we got here makes for an interesting history lesson, and a cautionary tale for incumbents that wait too long to embrace data.

When music labels first began releasing music on compact disc in the early 1980s, it was a windfall for them. Publishers raked in the money as music fans upgraded their entire collections to the new format. However, those companies failed to see the threat to which they were exposing themselves.

Until that point, piracy hadn’t been a concern because copies just weren’t as good as the originals. To make a mixtape using an audio cassette recorder, a fan had to hunch over the radio for hours, finger poised atop the record button — and then copy the tracks stolen from the airwaves onto a new cassette for that special someone. So, the labels didn’t think to build protection into the CD music format. Some companies, such as Sony, controlled both the devices and the music labels, giving them a false belief that they could limit the spread of content in that format.

One reason piracy seemed so far-fetched was that nobody thought of computers as music devices. Apple Computer even promised Apple Records that it would never enter the music industry — and when it finally did, it launched a protracted legal battle that even led coders in Cupertino to label one of the Mac sound effects “Sosumi” (pronounced “so sue me”) as a shot across Apple Records’ legal bow. Read more…

Showcasing the real-time processing revival

Tools and learning resources for building intelligent, real-time products.

Register for Strata + Hadoop World NYC, which will take place September 29 to Oct 1, 2015.

A few months ago, I noted the resurgence in interest in large-scale stream-processing tools and real-time applications. Interest remains strong, and if anything, I’ve noticed growth in the number of companies wanting to understand how they can leverage the growing number of tools and learning resources to build intelligent, real-time products.

This is something we’ve observed using many metrics, including product sales, the number of submissions to our conferences, and the traffic to Radar and newsletter articles.

As we looked at putting together the program for Strata + Hadoop World NYC, we were excited to see a large number of compelling proposals on these topics. To that end, I’m pleased to highlight a strong collection of sessions on real-time processing and applications coming up at the event. Read more…

Bridging the divide: Business users and machine learning experts

The O'Reilly Data Show Podcast: Alice Zheng on feature representations, model evaluation, and machine learning models.

Subscribe to the O’Reilly Data Show Podcast to explore the opportunities and techniques driving big data and data science.

As tools for advanced analytics become more accessible, data scientist’s roles will evolve. Most media stories emphasize a need for expertise in algorithms and quantitative techniques (machine learning, statistics, probability), and yet the reality is that expertise in advanced algorithms is just one aspect of industrial data science.

As tools for advanced analytics become more accessible, data scientist’s roles will evolve. Most media stories emphasize a need for expertise in algorithms and quantitative techniques (machine learning, statistics, probability), and yet the reality is that expertise in advanced algorithms is just one aspect of industrial data science.

During the latest episode of the O’Reilly Data Show podcast, I sat down with Alice Zheng, one of Strata + Hadoop World’s most popular speakers. She has a gift for explaining complex topics to a broad audience, through presentations and in writing. We talked about her background, techniques for evaluating machine learning models, how much math data scientists need to know, and the art of interacting with business users.

Making machine learning accessible

People who work at getting analytics adopted and deployed learn early on the importance of working with domain/business experts. As excited as I am about the growing number of tools that open up analytics to business users, the interplay between data experts (data scientists, data engineers) and domain experts remains important. In fact, human-in-the-loop systems are being used in many critical data pipelines. Zheng recounts her experience working with business analysts:

It’s not enough to tell someone, “This is done by boosted decision trees, and that’s the best classification algorithm, so just trust me, it works.” As a builder of these applications, you need to understand what the algorithm is doing in order to make it better. As a user who ultimately consumes the results, it can be really frustrating to not understand how they were produced. When we worked with analysts in Windows or in Bing, we were analyzing computer system logs. That’s very difficult for a human being to understand. We definitely had to work with the experts who understood the semantics of the logs in order to make progress. They had to understand what the machine learning algorithms were doing in order to provide useful feedback. Read more…

ResourceMiner: Toppling the Tower of Babel in the lab

An open source project aims to crowdsource a common language for experimental design.

Contributing author: Tim Gardner

Editor’s note: This post originally appeared on PLOS Tech; it is republished here with permission.

From Gutenberg’s invention of the printing press to the Internet of today, technology has enabled faster communication, and faster communication has accelerated technology development. Today, we can zip photos from a mountaintop in Switzerland back home to San Francisco with hardly a thought, but that wasn’t so trivial just a decade ago. It’s not just selfies that are being sent; it’s also product designs, manufacturing instructions, and research plans — all of it enabled by invisible technical standards (e.g., TCP/IP) and language standards (e.g., English) that allow machines and people to communicate.

But in the laboratory sciences (life, chemical, material, and other disciplines), communication remains inhibited by practices more akin to the oral traditions of a blacksmith shop than the modern Internet. In a typical academic lab, the reference description of an experiment is the long-form narrative in the “Materials and Methods” section of a paper or a book. Similarly, industry researchers depend on basic text documents in the form of Standard Operating Procedures. In both cases, essential details of the materials and protocol for an experiment are typically written somewhere in a long-forgotten, hard-to-interpret lab notebook (paper or electronic). More typically, details are simply left to the experimenter to remember and to the “lab culture” to retain.

At the dawn of science, when a handful of researchers were working on fundamental questions, this may have been good enough. But nowadays this archaic method of protocol record keeping and sharing is so lacking that half of all biomedical studies are estimated to be irreproducible, wasting $28 billion each year of U.S. government funding. With more than $400 billion invested each year in biological and chemical research globally, the full cost of irreproducible research to the public and private sector worldwide could be staggeringly large. Read more…